AI Governance in Healthcare: Best Practices

Post Summary

AI governance in healthcare refers to the frameworks, policies, and practices that ensure the ethical, safe, and compliant use of AI technologies in healthcare settings.

It ensures patient safety, protects data privacy, mitigates risks, and builds trust in AI systems while complying with regulations like HIPAA and GDPR.

Key components include data governance, risk management, ethical oversight, compliance with regulations, and continuous monitoring of AI systems.

Challenges include managing data quality, addressing algorithmic bias, ensuring transparency, and navigating complex regulatory landscapes.

Best practices include establishing governance committees, using frameworks like PPTO, conducting regular audits, and fostering collaboration between stakeholders.

Organizations can scale governance by centralizing oversight, automating compliance processes, and leveraging partnerships with industry and academic entities.

AI governance in healthcare ensures that AI systems are safe, ethical, and compliant with regulations. It integrates into existing workflows to prioritize patient safety, legal compliance, and continuous system improvement. Key points include:

- Core Goals: Protect patient safety, meet regulations (e.g., HIPAA, FDA), and improve system performance.

- Ethical Principles: Transparency, accountability, and human oversight to prevent bias and ensure reliable decision-making.

- Regulatory Compliance: Adherence to U.S. laws like HIPAA, HITECH, and FDA rules, along with risk assessments and lifecycle monitoring.

- Governance Structures: Multi-disciplinary committees and frameworks like PPTO (People, Process, Technology, Operations) for structured oversight.

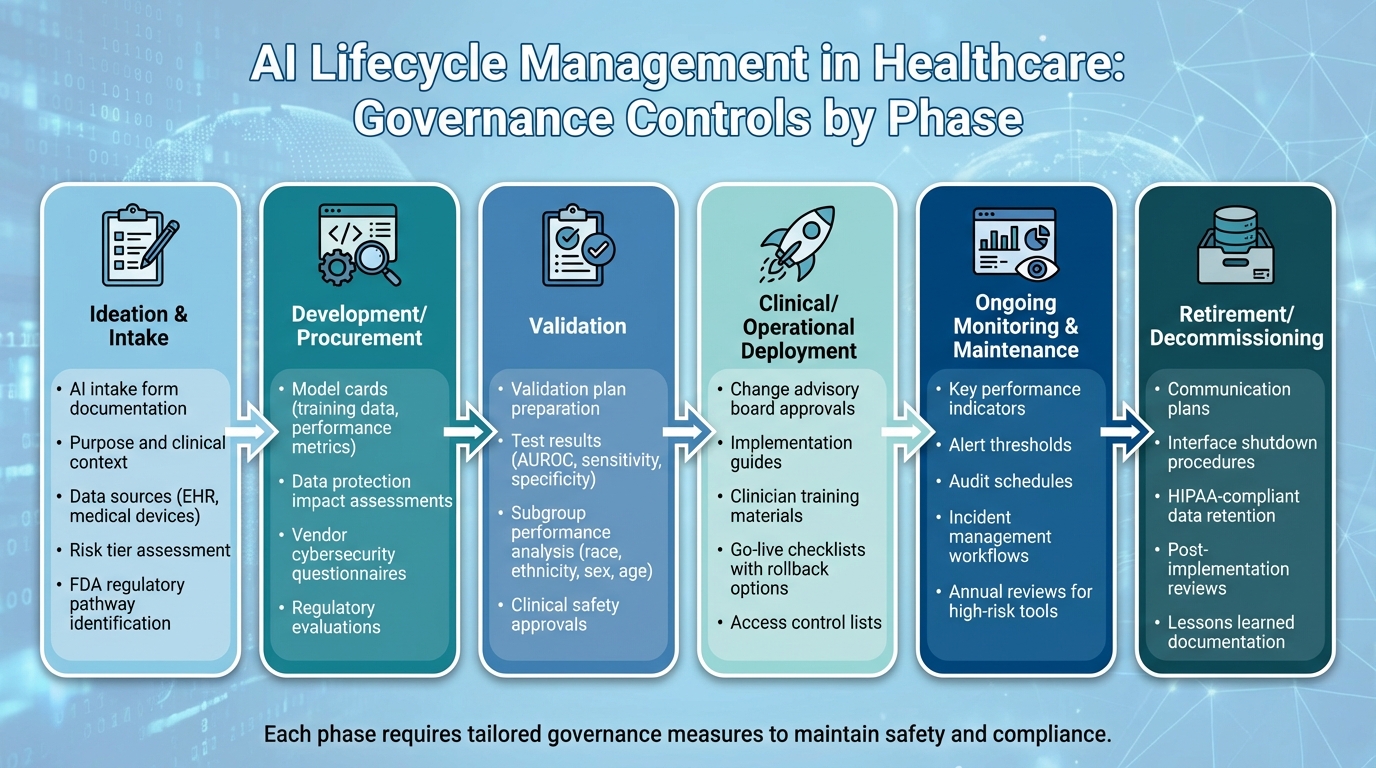

- Lifecycle Management: Tailored controls for each AI phase - development, deployment, monitoring, and decommissioning.

- Cybersecurity: Address risks like data breaches, ransomware, and model vulnerabilities, especially with third-party vendors.

Core Principles and Regulatory Requirements for AI Governance

Ethical Principles for AI in Healthcare

Effective AI governance in healthcare hinges on key values like transparency, fairness, accountability, and human oversight. Transparency means AI systems should clearly explain how they arrive at decisions. For example, diagnostic algorithms must clarify how they interpret patient data so clinicians can confidently rely on their outputs. Fairness focuses on minimizing biases, ensuring AI models trained on diverse datasets don’t inadvertently disadvantage rural or minority populations. Accountability involves assigning responsibility, often through governance committees tasked with managing AI-related risks. Finally, human oversight ensures clinicians maintain control over critical decisions.

For instance, some healthcare organizations mandate that physicians review AI-generated clinical insights before these are integrated into electronic health records, ensuring that human judgment remains central [9]. To further support these principles, many organizations establish multi-disciplinary AI governance committees. These groups, often including ethicists and patient representatives, review AI projects for potential biases and ensure alignment with organizational values.

These ethical principles form the foundation for navigating the complex regulatory environment surrounding AI in healthcare.

Understanding U.S. Regulatory Requirements

Building on these ethical standards, healthcare AI must also adhere to critical U.S. regulatory frameworks. For instance, HIPAA mandates regular risk assessments to protect patient data, while the FDA enforces premarket approval and ongoing monitoring for high-risk diagnostic tools. The FDA’s Total Product Lifecycle approach ensures that AI systems maintain safety and effectiveness throughout their use, from initial validation to long-term monitoring after deployment.

Additional federal requirements, such as civil rights laws and the HHS AI Strategy’s annual reporting guidelines, further shape the regulatory landscape. Together, these frameworks push healthcare organizations to balance innovation with rigorous safety, transparency, and compliance standards.

Turning Principles into Policies and Risk Frameworks

To translate ethical principles into actionable policies, healthcare organizations rely on multi-disciplinary committees to develop frameworks for explainability, bias audits, and clearly defined roles. These policies often include centralized AI inventories, pre-deployment reviews, and post-deployment monitoring to meet HIPAA and FDA requirements.

The People-Process-Technology-Operations (PPTO) framework provides a structured way to identify organizational gaps. Through stakeholder interviews and mapping governance needs onto clinical workflows, this approach ensures comprehensive oversight. For organizations working with third-party AI vendors or cloud-based solutions, platforms like Censinet RiskOps™ simplify vendor risk assessments. These tools strengthen data protection, support continuous monitoring, and ensure compliance for systems managing patient data, clinical applications, and medical devices.

Building Governance Structures for AI in Healthcare

Creating Multi-Disciplinary AI Governance Committees

To effectively govern AI in healthcare, organizations need committees that bring together diverse expertise. These committees should include clinicians, IT specialists, ethicists, legal advisors, patient representatives, and data scientists. This diverse team ensures that AI-related decisions account for clinical realities, technical possibilities, ethical considerations, and patient needs.

These governance groups are responsible for setting policies, ensuring ethical compliance, evaluating alignment with organizational values, and facilitating communication between clinical, IT, and leadership teams [2].

Striking the right balance between structured protocols and agile decision-making is essential. For example, in September 2025, the Joint Commission and the Coalition for Health AI released guidance outlining seven elements for responsible AI use, starting with the need for clear governance structures and accountability [6]. Depending on an organization’s culture, decision-making can rely on voting or consensus mechanisms. The goal is to maintain rigorous oversight without creating delays that hinder progress.

Patient involvement is a cornerstone of effective governance. Including patient perspectives - through Patient Family Advisory Councils or patient advisors on committees - ensures that AI tools align with the real-world priorities of the communities they serve. This approach promotes transparency and builds trust [2].

This committee-driven strategy lays the foundation for implementing the People-Process-Technology-Operations (PPTO) Framework.

Using the People-Process-Technology-Operations (PPTO) Framework

The PPTO Framework provides a structured way to organize AI governance by focusing on four key areas: People (skills and roles), Process (standardized workflows), Technology (monitoring tools), and Operations (ongoing execution). This framework helps healthcare systems identify gaps and establish comprehensive oversight.

For instance, a Canadian hospital system applied the PPTO Framework through stakeholder interviews and workshops. This effort led to the creation of centralized algorithm inventories, lifecycle management policies, and a dedicated AI governance committee [2]. The framework proved flexible enough to adapt to the organization’s maturity level, translating broad principles into actionable steps.

Start by creating centralized AI inventories and standardizing workflows. Develop clear documentation and training materials, and share these resources through familiar channels like staff meetings or internal portals [2]. This approach ensures that the framework is accessible and actionable for all team members.

By implementing the PPTO Framework, healthcare organizations can seamlessly link AI governance with their existing quality and risk management systems.

Connecting AI Governance with Existing Systems

AI governance is most effective when integrated into established clinical governance, quality improvement, and patient safety structures. Aligning AI oversight with existing workflows - such as digital health initiatives, risk management, and quality improvement - enhances overall accountability.

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare."

- Matt Christensen, Sr. Director GRC at Intermountain Health [1].

Governance structures must address a wide range of risks, including those related to vendors, patient data, medical devices, and supply chains. Platforms like Censinet RiskOps™ facilitate this integration by streamlining vendor risk assessments and fostering collaboration between healthcare organizations and their third-party partners. For example, Terry Grogan, CISO at Tower Health, noted that using Censinet RiskOps™ reduced the full-time employees (FTEs) required for risk assessments from five to two, while simultaneously increasing the volume of assessments completed [1].

Successful integration also depends on effective change management. Early staff training and stakeholder engagement are critical for building support across departments. The American Heart Association’s network of nearly 3,000 hospitals - including over 500 rural and critical access facilities - illustrates how scalable governance can operate across diverse settings [4]. By embedding AI governance into familiar systems and ensuring adequate support, healthcare organizations can make oversight a part of everyday operations, driving continuous improvement throughout the AI lifecycle.

Managing AI Throughout Its Lifecycle in Healthcare

AI Lifecycle Management in Healthcare: 6 Key Phases and Governance Controls

Governance Controls for Each AI Lifecycle Phase

AI systems in healthcare progress through six key phases: ideation and intake, development/procurement, validation, clinical/operational deployment, ongoing monitoring and maintenance, and retirement/decommissioning. Each step demands tailored governance measures to maintain safety and compliance [2][5].

In the ideation and intake phase, organizations rely on an AI intake form to document critical details like the tool’s intended purpose, clinical context, affected workflows, data sources (e.g., EHR or medical devices), and risk tier. This form helps governance committees decide if the tool will directly influence diagnosis or treatment and identify any necessary FDA regulatory pathways early on [2][5][7].

During development/procurement, essential documentation includes model cards detailing training data and performance metrics, data protection impact assessments, vendor cybersecurity questionnaires, and regulatory evaluations. For validation, organizations must prepare a thorough validation plan. This includes test results (e.g., AUROC, sensitivity, specificity), subgroup performance data broken down by race, ethnicity, sex, and age, and clinical safety approvals from relevant specialists [4][5][8].

Deployment controls focus on ensuring smooth integration, including change advisory board approvals, implementation guides, clinician training materials, go-live checklists with rollback options, and access control lists. Once deployed, monitoring involves setting up key performance indicators, alert thresholds, audit schedules, and incident management workflows. When decommissioning, organizations follow a structured process, including communication plans, interface shutdown procedures, HIPAA-compliant data retention decisions, and post-implementation reviews to document outcomes and lessons learned [2][3]. These governance measures support comprehensive risk evaluations before and after deployment.

Pre-Deployment and Post-Deployment Monitoring

Before deployment, organizations conduct multi-layered validation processes to assess technical performance, bias, and fairness. Technical validation uses a held-out test set reflecting the local patient population to report discrimination metrics and calibration scores [3][4]. Demographic bias analyses are performed, and mitigation strategies are applied if disparities in performance are detected [4][5].

For vendor-provided models, local validation is critical to account for variations in practice patterns, devices, and patient demographics. Clinical and usability testing - via simulations or pilot studies - evaluates factors like cognitive load, alert fatigue, and whether clinicians can override AI recommendations while understanding its limitations [4][5][8].

After deployment, ongoing monitoring relies on automated dashboards and statistical controls to track key metrics, such as input data and prediction drift. Any major changes trigger investigations or even model rollbacks [3][4][5]. Regular reassessments, especially for high-risk models, ensure performance remains consistent across demographic groups as clinical practices and populations evolve [4][5].

AI-related safety incidents are integrated into existing patient safety systems, with governance committees analyzing trends and root causes [2][4]. Clinician feedback mechanisms, such as EHR feedback buttons and surveys, provide an avenue for reporting concerns, overrides, or workarounds, helping refine and improve the AI systems over time [2][5]. Together, these strategies ensure AI tools operate safely and effectively throughout their lifecycle.

Managing Continuous Improvement

Continuous improvement hinges on regular reviews and structured feedback. Leveraging the foundation of monitoring and governance, healthcare organizations conduct formal reviews to adapt AI systems to evolving clinical needs. High-risk AI tools undergo detailed annual reviews to evaluate updated performance metrics, bias findings, safety incidents, and user feedback [4][5]. Major updates - like retraining, algorithm adjustments, or new features - are treated as new versions, requiring thorough impact analysis and governance approval [5].

AI monitoring is increasingly integrated into broader risk management frameworks. Tools like Censinet RiskOps™ enable third-party assessments for AI systems, particularly those handling patient data or clinical applications, ensuring continuous risk reduction across vendors, medical devices, and supply chains [1].

A notable example of the commitment to improvement comes from the American Heart Association, which has allocated $12 million for research in 2025 to test AI delivery strategies across nearly 3,000 hospitals [4]. By embedding feedback loops into daily workflows, healthcare organizations can ensure AI systems evolve responsibly while meeting regulatory and patient safety standards.

sbb-itb-535baee

Cybersecurity and Third-Party Risk Management for Healthcare AI

AI-Specific Cybersecurity Risks in Healthcare

As AI systems become more integral to U.S. healthcare, they also introduce new cybersecurity challenges. These systems often handle large volumes of protected health information (PHI), connect with electronic health records and medical devices, and rely on intricate data pipelines and cloud infrastructure. This combination creates a wider attack surface, making them prime targets for ransomware and data breaches [2].

AI systems face unique threats that go beyond traditional cybersecurity concerns. For instance, techniques like model inversion and membership inference attacks can extract sensitive PHI from trained models, even when the data has been de-identified. Data poisoning attacks can corrupt training datasets, subtly influencing clinical decisions, while adversarial examples might cause AI systems to misclassify critical diagnostic images. Additionally, when clinicians use generative AI or large language models, risks like prompt injection and data leakage could expose sensitive information. Ransomware attacks on AI-driven workflows further jeopardize patient safety and disrupt care delivery. Tackling these risks is essential to ensure the reliability and secure evolution of AI in healthcare.

Best Practices for Third-Party AI Risk Management

Since many healthcare AI tools are provided by third-party vendors, thorough vendor assessments are crucial. Organizations should require vendors to complete detailed security questionnaires covering key areas like PHI handling, encryption methods, access controls, data segregation, and AI model update protocols. To verify a vendor's security measures, organizations should request independent evidence such as SOC 2 Type II reports, ISO 27001 certifications, penetration testing results, and HIPAA compliance attestations.

Business Associate Agreements (BAAs) provide a framework for managing PHI and ensuring security. These agreements should clearly define PHI ownership, restrict secondary use of data, establish security expectations, outline incident reporting timelines, and include requirements for model explainability and change management.

Healthcare organizations should also schedule regular reviews of AI tools, conducting annual evaluations for high-risk systems and biennial reviews for those deemed lower-risk. Ongoing monitoring of vendor incident reports, system updates, and new integrations ensures timely responses to emerging risks. These measures lay the groundwork for more efficient risk management, especially when supported by specialized platforms.

Using Platforms for Risk Management

Given the complexity of manual risk assessments, technology-driven platforms are an effective alternative. Managing third-party AI risks with spreadsheets can quickly become unmanageable. Platforms designed for this purpose streamline the process by automating risk scoring and centralizing documentation.

For example, Censinet RiskOps™ enables healthcare organizations to standardize their risk workflows. It provides tailored security questionnaires, automates evidence collection, and tracks remediation efforts across teams like security, compliance, supply chain, and clinical operations. Acting as a cloud-based risk exchange, the platform connects healthcare providers with a network of over 50,000 vendors, allowing for secure sharing of cybersecurity and risk data. This centralized approach simplifies audits and regulatory reviews, while continuous monitoring flags issues like expiring certifications or unresolved risks. This helps AI governance committees focus their oversight on tools with the highest clinical impact and PHI exposure.

Terry Grogan, CISO of Tower Health, highlights the efficiency gained through such platforms:

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required."

- Terry Grogan, CISO, Tower Health

Conclusion

AI governance in healthcare plays a crucial role in ensuring patient safety, adhering to regulations, and driving responsible progress. As AI systems take on tasks like managing protected health information, supporting clinical decisions, and working alongside medical devices, healthcare organizations must adopt governance strategies that address ethics, regulations, and cybersecurity at every stage of AI development and deployment. This thoughtful approach not only safeguards patients but also encourages ongoing improvements in how care is delivered.

To make this work, structured frameworks are key to managing AI effectively throughout its lifecycle. Frameworks such as PPTO help turn ethical and regulatory principles into actionable governance strategies. Multi-disciplinary governance committees - which unite clinical, technical, compliance, and security experts - ensure thorough oversight, from evaluating AI systems before deployment to monitoring them afterward. The American Heart Association's pledge of over $12 million in 2025 research funding to explore AI delivery strategies across nearly 3,000 hospitals, including more than 500 rural and critical access facilities, highlights the growing national commitment to implementing AI in a safe and evidence-based way[4].

On top of that, secure operations demand robust cybersecurity measures. Automated tools are essential for managing cybersecurity and third-party risks. Platforms like Censinet RiskOps™ simplify vendor evaluations and enable continuous monitoring, providing the focused oversight needed to maintain secure and reliable AI systems.

FAQs

What are the core ethical principles for governing AI in healthcare?

In healthcare, the ethical use of AI revolves around three main pillars: patient safety, data privacy, and confidentiality. These principles ensure that AI tools prioritize the well-being of patients while safeguarding sensitive information.

For AI systems to be trusted, they need to be transparent and easy to understand. It's also critical that they operate without bias or discrimination, promoting fairness in every decision they support.

Equally important is accountability. Healthcare providers and organizations must take ownership of the results generated by AI, ensuring ongoing monitoring to uphold ethical practices and regulatory compliance.

What is the PPTO framework, and how does it improve AI governance in healthcare?

The PPTO framework provides a structured approach to improve AI governance in healthcare by focusing on four core steps: Prioritize, Plan, Test, and Optimize AI systems. This method ensures that AI technologies are deployed securely, stay compliant with regulations, and adapt over time to meet the ever-changing demands of healthcare organizations.

By concentrating on these essential areas, the PPTO framework helps minimize risks, safeguard sensitive patient information, and uphold the reliability of clinical applications and medical devices throughout the AI system's lifecycle. It’s a practical way to boost operational efficiency while prioritizing patient safety in an industry that’s constantly evolving.

What are the main cybersecurity risks of using AI in healthcare?

AI systems in healthcare come with their own set of cybersecurity challenges, which, if not addressed, could jeopardize patient safety and compromise sensitive information. Some of the most pressing risks include data breaches that reveal private patient records, unauthorized access to protected health information, and weaknesses in AI infrastructure that hackers might exploit. On top of that, issues like manipulation or bias in AI algorithms can skew decision-making, potentially leading to incorrect diagnoses or treatment plans.

To tackle these challenges, healthcare organizations need to adopt strong security protocols, keep a constant watch for cyber threats, and adhere to industry regulations. These steps are crucial for safeguarding both patient trust and the systems that support their care.

Related Blog Posts

- AI Governance Talent Gap: How Companies Are Building Specialized Teams for 2025 Compliance

- Healthcare AI Data Governance: Privacy, Security, and Vendor Management Best Practices

- Board-Level AI: How C-Suite Leaders Can Master AI Governance

- The AI Governance Playbook: Practical Steps for Risk-Aware Organizations

Key Points:

What is AI governance in healthcare?

Definition: AI governance in healthcare refers to the structured frameworks, policies, and practices that ensure the ethical, safe, and compliant use of AI technologies in healthcare settings. It encompasses data governance, risk management, and ethical oversight to align AI systems with patient safety and regulatory standards.

Why is AI governance important in healthcare?

Importance:

- Ensures patient safety by mitigating risks associated with AI systems.

- Protects sensitive patient data and ensures compliance with regulations like HIPAA and GDPR.

- Builds trust in AI technologies by promoting transparency and accountability.

- Addresses ethical concerns, such as algorithmic bias and equitable access to AI-enabled care.

What are the key components of AI governance in healthcare?

Key Components:

- Data Governance: Ensures high-quality, well-governed data fuels AI models while respecting data rights and ownership.

- Risk Management: Identifies and mitigates risks associated with AI systems, including cybersecurity vulnerabilities and algorithmic errors.

- Ethical Oversight: Establishes frameworks to address bias, fairness, and equity in AI applications.

- Regulatory Compliance: Aligns AI systems with healthcare regulations like HIPAA, GDPR, and FDA guidelines.

- Continuous Monitoring: Regularly audits AI systems to ensure ongoing safety, performance, and compliance.

What challenges exist in implementing AI governance in healthcare?

Challenges:

- Data Quality Issues: Ensuring datasets are diverse, accurate, and representative to avoid bias.

- Algorithmic Bias: Addressing disparities in AI performance across different patient populations.

- Transparency: Explaining AI decision-making processes to stakeholders, including patients and providers.

- Regulatory Complexity: Navigating varying state, national, and international regulations.

What are the best practices for AI governance in healthcare?

Best Practices:

- Establish governance committees with diverse stakeholders, including clinicians, data scientists, and ethicists.

- Use frameworks like PPTO (Practical, Comprehensive, Relevant) to operationalize governance.

- Conduct regular audits to evaluate AI performance, risks, and compliance.

- Foster collaboration between government, industry, and academic entities to accelerate AI validation and adoption.

- Implement monitoring dashboards to track AI system performance and detect anomalies in real-time.

How can healthcare organizations scale AI governance effectively?

Scaling Strategies:

- Centralize governance oversight to ensure consistency across multiple AI applications.

- Automate compliance processes, such as risk assessments and documentation, to reduce manual effort.

- Leverage partnerships with industry organizations like the Coalition for Healthcare AI (CHAI) and academic institutions to develop scalable governance frameworks.

- Use platforms that enable governance scalability, allowing organizations to manage multiple AI systems without multiplying governance efforts.