AI Model Security Testing: Key Steps for HDOs

Post Summary

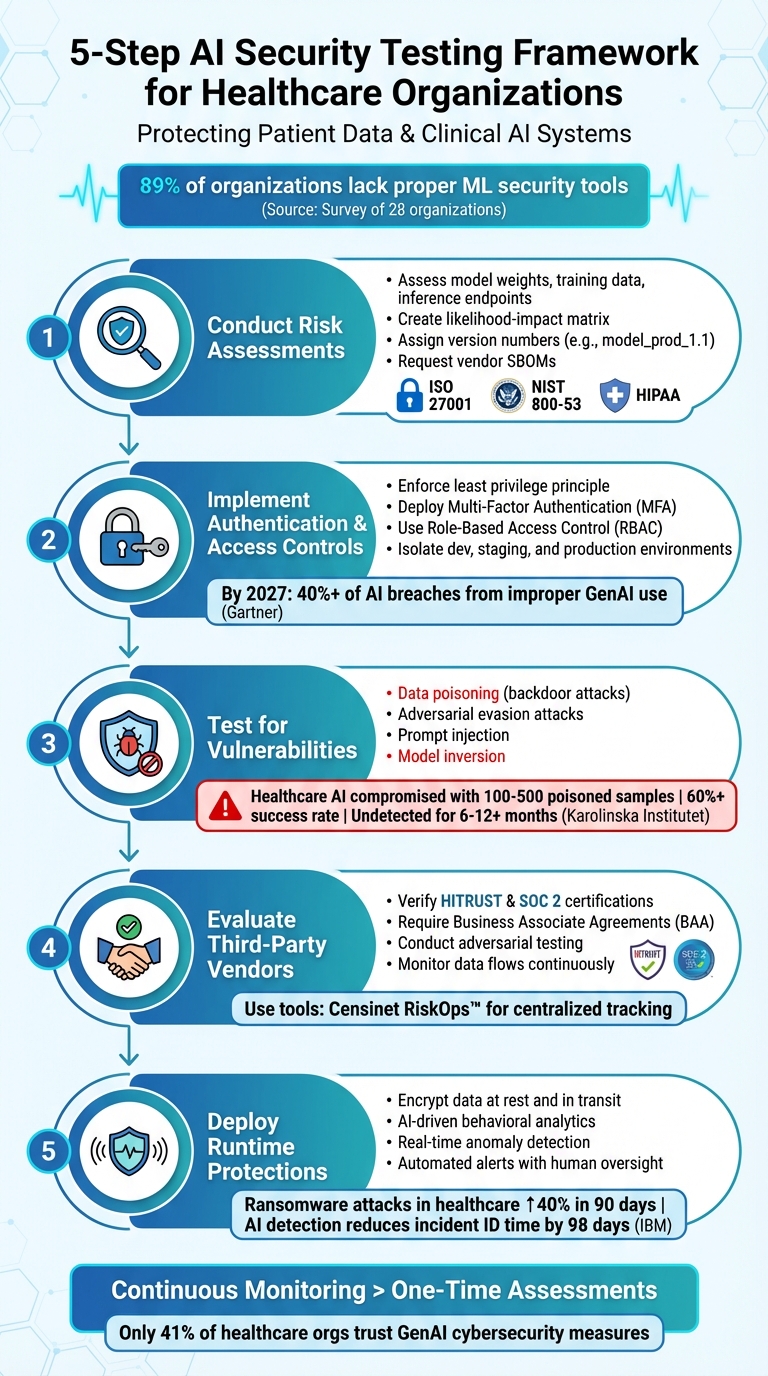

Healthcare Delivery Organizations (HDOs) increasingly rely on AI for critical tasks like diagnostics and patient care, but these systems come with unique security risks. Unlike traditional IT systems, AI is vulnerable to attacks like data poisoning, adversarial inputs, and model inversion, which can compromise patient safety and data privacy.

Here’s what you need to know to secure AI in healthcare:

- AI-specific threats: Data poisoning can introduce backdoors with as little as 0.025% of corrupted samples, leading to systematic errors in clinical decisions.

- Regulatory challenges: Laws like HIPAA and GDPR can unintentionally hinder anomaly detection, complicating efforts to safeguard AI systems.

- Supply chain risks: Third-party AI models can expose multiple institutions to attacks, requiring rigorous vendor evaluations for third-party AI.

- Testing and validation: Regular adversarial testing and runtime protections are critical to identifying vulnerabilities and maintaining secure operations.

5-Step AI Security Testing Framework for Healthcare Organizations

AI's Role in Healthcare Cybersecurity

sbb-itb-535baee

Step 1: Conduct Risk Assessments for AI Models

When it comes to securing AI systems, a traditional network or access risk assessment simply won’t cut it. Instead, an AI-specific Security Risk Analysis (SRA) must cover the entire lifecycle - starting from data collection and training to deployment and monitoring. Why? Because AI models face unique threats that can jeopardize sensitive patient data and even influence clinical decisions.

This is especially critical in healthcare. A survey of 28 organizations, including Fortune 500 companies and government entities, revealed that 89% (25 out of 28) lacked proper tools to secure their machine learning systems [4]. This gap leaves healthcare systems vulnerable to attacks that traditional security frameworks often miss.

"Failing to adequately secure AI systems increases risk to not only the AI systems addressed in this assessment, but more generally to the entire information technology and compliance environment." - Microsoft [4]

Let’s break down the key components of an effective AI model risk assessment.

What to Include in an AI Model Risk Assessment

AI systems come with their own set of vulnerabilities. Your risk assessment should focus on these unique aspects, starting with potential attack surfaces like model weights, decision logic, training data pipelines, and inference endpoints. These areas can be targeted through methods such as:

- Model poisoning: Inserting malicious backdoors into training data.

- Prompt injection: Exploiting security gaps via harmful input.

- Adversarial examples: Altering inputs to manipulate model outputs.

Data integrity is the cornerstone of AI security. Use cryptographic hashes to uniquely identify datasets and ensure they haven’t been tampered with. This is especially important to guard against poisoning attacks. Additionally, keep a close watch on data sources, requiring management approval for any new ones.

To prioritize risks, create a likelihood-impact matrix. For healthcare AI, risks should be classified as "Critical" if they involve HIPAA-regulated data, applications with potential physical harm, or systems supporting essential health services. This approach ensures that resources are focused on the most pressing threats, protecting both clinical decisions and patient safety.

Set clear risk tolerance thresholds to maintain reliability. For instance, you might decide that no personally identifiable information (PII) should appear in model outputs, or that any drop in accuracy beyond 3% triggers an immediate rollback. These benchmarks provide measurable standards for monitoring and maintaining clinical performance.

Version control is another must-have. Assign distinct version numbers (e.g., "model_prod_1.1") each time a model is retrained. This makes it easier to trace the source of any issues and investigate incidents. It also helps track data sources and configurations across different iterations of the model.

Don’t overlook supply chain risks. Request Software Bills of Materials (SBOMs) from vendors to identify vulnerabilities in pre-trained models or libraries. Maintain an AI asset register that tracks model architecture, training data sources, and versioning to prevent unauthorized models from slipping through the cracks.

Finally, align all these technical measures with healthcare-specific regulatory requirements.

Meeting Healthcare Regulatory Requirements

To meet healthcare standards, map your AI risk assessments to established frameworks like ISO 27001, NIST 800-53, NIST AI Risk Management Framework (RMF), ISO/IEC 42001, or HIPAA. These frameworks ensure comprehensive risk management throughout the AI lifecycle, which is essential under HIPAA and emerging regulations like the EU AI Act.

For AI systems handling Protected Health Information (PHI), ensure they are isolated from research environments. Map out data flows to prevent unauthorized exposure of PII or PHI. This separation is critical because a compromised AI system in healthcare could lead to misdiagnoses or the exposure of sensitive medical records.

"A compromised AI system in healthcare could affect patient diagnoses, while poisoned financial models might enable fraud or create regulatory violations." - SentinelOne [5]

Network segregation adds another protective layer. Use gateway devices to filter traffic between domains and block unauthorized access to machine learning infrastructure. Additionally, restrict AI research or development accounts from accessing production databases to avoid cross-contamination and data leaks.

After deployment, establish quarterly review cycles and set up automated alerts to detect model drift or emerging vulnerabilities. This continuous monitoring aligns with HIPAA’s requirement for ongoing risk analysis and helps identify signs of poisoning or performance degradation.

If your organization uses Censinet RiskOps™, its AI risk dashboard can centralize all AI-related policies, risks, and tasks. Findings and action items can be routed to the appropriate stakeholders, like members of the AI governance committee, ensuring timely responses and accountability. This centralized system not only streamlines risk management but also strengthens your overall AI security framework.

Lastly, document all administrative controls tied to AI and information security. Regularly reviewing model training code and design reduces risks to availability, integrity, and confidentiality. These documented policies not only enforce consistent security practices but also provide evidence of compliance during audits. This level of diligence is crucial for maintaining trust and meeting regulatory expectations.

Step 2: Implement Authentication and Access Controls

After evaluating your AI model's risks, the next step is to manage who can access your AI systems and what they’re allowed to do. Authentication and access controls act as the first defense against breaches or unauthorized access. Gartner predicts that by 2027, over 40% of AI-related data breaches will result from the improper use of generative AI across borders [9]. In industries like healthcare, where 71% of organizations are expected to use generative AI in at least one business function by 2025 [9], these safeguards are absolutely critical.

Both the UK National Cyber Security Centre (NCSC) and the US Cybersecurity and Infrastructure Security Agency (CISA) stress the importance of ongoing security measures:

"Security must be a core requirement, not just in the development phase, but throughout the life cycle of the system" [6].

Your authentication strategy needs to account for every phase: design, development, deployment, and operation [7]. A weak link in any one of these areas could compromise the entire system. Below, we explore specific ways to implement access controls effectively.

Access Control Methods

Start by enforcing the principle of least privilege. This means granting users only the permissions they need for their specific role. For instance, a data scientist working on model training should only have access to designated storage buckets and specific model registries [8].

"Users must have only the minimum permissions that are necessary to let them perform their role activities" [8].

Multi-Factor Authentication (MFA) is another must-have. With phishing schemes like "Tycoon 2FA" targeting healthcare credentials [6], MFA provides an extra layer of security by requiring users to verify their identity through multiple methods before accessing sensitive systems or data.

Role-Based Access Control (RBAC) is also essential. This system assigns permissions based on job responsibilities - whether someone is a clinician, data engineer, or compliance officer. For example, RBAC can restrict access to data lakes, feature stores, or model hyperparameters based on the user's role [8].

To take this further, use context-aware policies. These evaluate authentication requests based on factors like user identity, IP address, or device attributes at the time of access [8]. Conditional IAM policies can require specific request origins or trusted build triggers before allowing a service account to execute a pipeline. These extra layers of security go beyond what standard RBAC can provide.

For MLOps pipelines, use service accounts that are limited to interacting with pre-approved resources during training and model registration [8]. Add Just-in-Time (JIT) elevation to grant temporary access privileges, reducing the risk of prolonged exposure or unauthorized actions [8].

Finally, digitally sign artifacts like packages and containers to ensure only verified code reaches production environments [8]. Logging access details also helps with compliance and tracking.

Isolating AI Model Environments

In addition to strong authentication, isolating your AI environments ensures that a breach in one area doesn’t affect critical systems. Keeping development, staging, and production environments separate is key. Experimental code or untested models should never interact with live healthcare operations [7][10].

At the cloud account level, dedicate specific accounts for processing Protected Health Information (PHI). Strictly enforce policies to ensure that health data doesn’t enter development or testing accounts [12]. As RAND points out:

"AI security is not static - risks evolve throughout the lifecycle of your system, and adversaries target different components at each phase" [7].

Use tools like virtual networks (VNETs), private links, and network segmentation to isolate AI system communications from broader clinical networks. Secure file systems that store sensitive health data using security groups and operating system-level permissions [11][12].

Container and pipeline hardening can further reduce vulnerabilities. Use hardened containers, such as distroless images, and reproducible environments like virtualenv to isolate training and inference jobs [10]. Protect inference APIs with authentication, rate limiting, and input validation [7][11].

Don’t forget about orphaned deployments - outdated models left in production without updated controls. Regularly scan for and remove these assets [10]. Instead of storing or hardcoding credentials, use managed identities for secure authentication between AI components [11]. These isolation strategies ensure that even if one environment is compromised, the breach won’t spread to systems handling live patient data.

Step 3: Test AI Models for Vulnerabilities

Once you've secured access and isolated environments, the next critical step is testing your AI models for weaknesses. Adversarial testing mimics real-world attacks, helping you uncover vulnerabilities before they can be exploited.

The risks are especially pronounced in healthcare. Research from Karolinska Institutet reveals that healthcare AI systems can be compromised with as few as 100 to 500 poisoned samples, achieving success rates of 60% or more. Alarmingly, such breaches can go undetected for 6 to 12 months - or even longer [2][3]. Dr. Farhad Abtahi of Karolinska Institutet highlights:

"Attack success depends on the absolute number of poisoned samples rather than their proportion of the training corpus, a finding that fundamentally challenges assumptions that larger datasets provide inherent protection" [2].

Traditional security tools often fail to address AI-specific threats like backdoored models or adversarial examples, emphasizing the need for testing methods tailored to AI systems.

Testing Methods for AI Models

When testing, prioritize these common attack vectors:

- Data Poisoning (Backdoor Attacks): Injecting malicious samples into training data creates backdoors, causing models to misbehave under specific triggers. For instance, in breast cancer detection models, a 10% label-flipping attack reduced diagnostic accuracy from over 97% to 89.47% [13].

- Adversarial Evasion Attacks: Subtle changes to inputs, such as medical images, during inference can drastically lower accuracy - dropping it from 97.36% to 61.40% in some cases [13].

- Prompt Injection: For Large Language Models used in clinical tasks, attackers can craft manipulative instructions (e.g., "ignore previous instructions") to bypass safeguards, potentially leading to unauthorized actions or exposure of sensitive data [14][15].

- Membership Inference and Model Inversion: These attacks can identify if a specific patient's data was used in training or even reconstruct sensitive details. Mitigate these risks with strict API rate limits and rounding confidence values [16].

- Supply Chain Vulnerabilities: Using third-party foundation models introduces systemic risks. A single vendor breach could affect 50 to 200 healthcare institutions [1].

Testing should occur in isolated environments that replicate production conditions without live patient data. Use "shadow models" to monitor responses in real-world scenarios without disrupting clinical operations [10]. Testing through system APIs ensures that safeguards like input filtering and rate limiting are properly evaluated [14].

Leverage tools like ART or MITRE's Armory to simulate large-scale attacks. Since AI models are inherently non-deterministic, run tests multiple times with consistent configurations for reliable results [14].

The insights gained from these tests are the foundation for creating effective security protocols.

Using Test Results to Create Security Protocols

Testing is only valuable if vulnerabilities are addressed promptly. Document findings in a risk register and prioritize them using a likelihood-impact matrix tailored to healthcare contexts and regulations [5]. For example, if your radiology AI is vulnerable to adversarial perturbations, techniques like input denoising and feature squeezing can help reduce the attack surface [16].

Adopt a layered defense strategy by combining technical controls with governance measures. Use an iterative approach - test, fix, and validate vulnerabilities. For prompt injection risks, implement input sanitization, structured prompt templates, and output moderation [16]. To counter data poisoning, deploy anomaly sensors to monitor training data for irregularities [16]. As Kush Varshney from IBM Research puts it:

"If we don't have that trust in those models, we can't really get the benefit of that AI in enterprises" [17].

Pair attack inputs with automated detection systems, such as tools for identifying sensitive data patterns or API-level filtering, to ensure scalability. When working with sensitive patient data, techniques like differential privacy or neuron dropout can mitigate membership inference risks [16][10]. Keep detailed logs of AI system behaviors and decisions to support accountability and regulatory compliance [17].

For third-party AI vendors, request a Software Bill of Materials (SBOM) to validate the integrity of pre-trained models and dependencies [5].

Platforms like Censinet RiskOps™ can simplify these processes by integrating robust testing into a broader risk management framework designed for healthcare organizations. Incorporate these protocols into a continuous cycle of security improvement.

Step 4: Evaluate Third-Party AI Vendors

Once you've tested your internal AI models for vulnerabilities, it's time to turn your attention outward. Third-party vendors play a significant role in your AI ecosystem, and their security practices can directly impact your organization.

Every time you integrate a third-party AI API, you increase the potential exposure of Protected Health Information (PHI). Standard tools often fail to catch risks like prompt injection or data leakage, making a thorough evaluation of these vendors absolutely essential. Without proper vetting, these systems can introduce unauthorized use of patient data or compliance issues, leaving your organization exposed to legal and financial repercussions. Relying on annual vendor questionnaires alone isn't enough to keep up with the fast-changing threat landscape.

How to Assess Vendor Risk

Start by requesting HITRUST and SOC 2 certifications from any vendor you work with. HITRUST certification ensures that the vendor adheres to healthcare-specific security controls aligned with HIPAA, while SOC 2 certification covers broader trust criteria like security, availability, confidentiality, and privacy. These certifications provide concrete evidence of the vendor's security measures.

Additionally, make sure that every vendor handling PHI signs a Business Associate Agreement (BAA). This legally binds them to HIPAA standards and should clearly outline their responsibilities, including breach notification procedures and the principle of using only the minimum necessary data. To further protect your data, include contractual clauses that explicitly prohibit vendors from using your healthcare data to train or enhance their AI models without your written consent. For more specialized applications, like precision medicine or genomics, carefully map out data flows to identify risks of re-identification and ensure ethical data management.

Don't stop at paperwork. Conduct adversarial testing to uncover vulnerabilities in the vendor's systems. This includes testing for jailbreak scenarios, prompt manipulation, and bias across different patient demographics. Review their audit logs for any unusual activity. For example, a surgical robotics company partnered with HIPAA Vault to secure cloud-based AI video processing, achieving faster incident response times by leveraging AI-enhanced threat detection and automated alerts. This kind of hands-on testing can reveal how well a vendor's systems perform in real-world conditions.

By taking these steps, you'll not only protect PHI but also strengthen your overall compliance and security posture. Once the initial evaluation is complete, keep monitoring vendors to ensure their practices remain up to par.

Monitoring Vendors and Tracking Compliance

A one-time assessment only provides a snapshot of a vendor's security posture. Over time, their practices and systems may change, which is why continuous monitoring is essential. Real-time oversight allows you to track data flows, model behavior, and adherence to policies. Key metrics to monitor include authentication status, rate limiting, error responses, hallucination rates, and unusual patterns in usage or latency. AI-driven Security Information and Event Management (SIEM) systems can flag suspicious activity, track blocked PHI instances, and maintain audit trails that comply with HIPAA's continuous monitoring requirements.

Platforms like Censinet RiskOps™ make this process more manageable by centralizing risk assessments, compliance tracking, and anomaly detection. Designed specifically for healthcare, this tool automates the collection of audit evidence, monitors vendor certifications, and generates immutable logs that support HIPAA audits and Joint Commission reviews. By replacing outdated, manual assessments with continuous, AI-powered monitoring, you can scale oversight across your entire vendor ecosystem.

Establish baseline performance profiles for each vendor's AI systems and investigate any significant deviations that might signal a problem. A hybrid approach, combining automated alerts with human oversight, ensures that ethical standards are upheld. Security experts can review flagged issues to provide the necessary judgment and context, allowing for better decision-making in sensitive healthcare environments. Balancing automation with human expertise is key to maintaining trust and ensuring patient safety.

Step 5: Deploy Runtime Protections and Ongoing Validation

To secure your AI models during operation, it's essential to implement runtime protections and continuous validation. Runtime protections work in real-time to safeguard AI models as they process sensitive patient data. Meanwhile, ongoing validation ensures these protections stay effective as new threats emerge. Together, these measures provide an active defense layer that complements earlier risk assessments and access controls, offering real-time security.

Healthcare organizations are navigating an increasingly hostile threat environment. For instance, ransomware attacks in healthcare have surged by 40% in just the past 90 days, underscoring the need for strong runtime defenses that can identify and respond to threats as they arise [18]. Combining technical safeguards with constant monitoring is critical to adapting to these threats without compromising patient care.

Security Measures for AI Models

To protect AI systems, encrypt data both at rest and in transit, and sanitize all inputs to prevent malicious payloads from infiltrating systems. At runtime, this approach blocks attacks like adversarial examples designed to manipulate diagnoses or predictions. Techniques such as boundary testing can also help identify manipulated medical images [20].

Regularly patch AI frameworks and infrastructure to address vulnerabilities, as gaps in GenAI controls remain a significant concern [19]. Use version control systems with rollback capabilities, and test all patches in isolated environments before deployment. Additionally, cryptographic verification of training data sources can help prevent poisoning attacks, while segregating training environments from production systems adds another layer of security.

Static measures alone aren’t enough. Continuous oversight is crucial to detect and address emerging threats effectively.

Real-Time Monitoring and Risk Management

Real-time monitoring is key to detecting security breaches as they occur. AI-driven behavioral analytics can establish baseline access patterns and flag anomalies, such as unusual activity within electronic health records (EHRs), which could indicate a breach. Research from IBM shows that AI-enhanced detection can reduce the time to identify incidents by 98 days, enabling faster responses to potential threats [18]. Statistical process control can also track AI performance and detect when outputs deviate from expected patterns, signaling potential manipulation or system degradation.

For example, a leading surgical robotics company integrated AI-driven security into its cloud-based video processing system for medical data. This approach led to a 70% reduction in incident response times, thanks to real-time threat detection [18]. By using machine learning models and automated alerts, healthcare organizations can build scalable, HIPAA-compliant infrastructures that enhance security.

Platforms like Censinet RiskOps™ further simplify these efforts. By automating risk assessments and streamlining third-party vendor risks, this tool enables collaborative risk management for patient data and clinical AI applications. It also provides cybersecurity benchmarking and continuous vendor compliance monitoring, shifting the focus from one-time assessments to continuous, AI-powered risk management.

To ensure ongoing security, establish baseline performance profiles for each AI model and investigate any significant deviations. Employ ensemble models that compare outputs from multiple systems to detect discrepancies, which can signal manipulation. For high-stakes decisions, incorporate human oversight and maintain audit trails to comply with HIPAA regulations. This balanced approach integrates both technical safeguards and human judgment, ensuring patient safety and maintaining trust in AI systems. It also closes the security loop, ensuring earlier protections remain effective during live operations.

Conclusion: Creating a Secure AI Framework for HDOs

Main Points to Remember

Protecting AI models in healthcare demands a layered strategy that blends technical measures with ongoing oversight. Start by conducting AI risk assessments to identify weak points and ensure compliance with regulations like HIPAA and FDA Section 524B. Strengthen security through robust authentication protocols, regular vulnerability testing, and thorough evaluation of third-party vendors. Interestingly, only 41% of healthcare respondents currently trust GenAI cybersecurity measures, highlighting the need for improvement [19].

Switching from one-time assessments to continuous monitoring is a game-changer for managing AI security in healthcare. Tools like real-time behavioral analytics and automated alerts can detect threats faster, while human oversight remains essential for ethical decisions in critical clinical situations. This balanced approach combines automation with clinical expertise, maintaining patient trust and meeting Joint Commission standards. However, AI security isn’t static - it requires constant evolution to address new risks.

Preparing for Future AI Security Threats

While today’s safeguards address current vulnerabilities, tomorrow’s threats require forward-thinking solutions. Emerging challenges include quantum computing’s potential to disrupt encryption, risks tied to edge computing and IoMT devices, and increasingly advanced adversarial AI techniques. To stay ahead, healthcare organizations should start preparing now by planning for post-quantum cryptography, mapping data flows for technologies like genomic medicine, and creating incident response protocols tailored to healthcare-specific risks.

Platforms like Censinet RiskOps™ offer tools to tackle these future challenges. By automating risk assessments, tracking vendor compliance in real-time, and managing risks tied to PHI and clinical AI applications, the platform helps healthcare organizations shift from manual, periodic reviews to continuous, AI-driven risk management. This not only saves time but also strengthens security. By centralizing risk management, healthcare providers can focus on patient care while remaining vigilant in protecting sensitive medical data in an increasingly complex digital landscape.

FAQs

How can we tell if our AI model is leaking PHI?

To determine whether your AI model is exposing PHI (Protected Health Information), you need to focus on rigorous security assessments. Start by evaluating safeguards such as encryption protocols and access control mechanisms. These measures are essential for keeping sensitive data secure.

Additionally, adversarial testing plays a critical role. This involves simulating potential attacks to identify weak spots that could lead to data breaches. By uncovering vulnerabilities early, you can address them before they become real threats.

Regular testing is key. It helps ensure your model aligns with data protection standards and reduces the risk of exposing sensitive patient information.

What’s the fastest way to test a clinical AI model for poisoning or adversarial attacks?

The fastest way to evaluate a clinical AI model for potential poisoning or adversarial attacks is by simulating harmful behaviors through security testing techniques. This involves methods like model performance validation, adversarial input testing, and penetration testing. These strategies are specifically aimed at pinpointing weaknesses quickly while maintaining the model's integrity and ability to withstand threats.

What should we require from an AI vendor before sharing any PHI?

Before disclosing any Protected Health Information (PHI), it's critical to take several precautions with your AI vendor. First, ensure they provide signed Business Associate Agreements (BAAs), which legally bind them to protect sensitive data. Next, verify their security measures and confirm they are compliant with HIPAA regulations. It's also wise to have their AI tools validated through external reviews to assess their reliability and safety. Lastly, make sure they commit to continuous risk monitoring to address any emerging vulnerabilities. These actions are essential for protecting sensitive information and meeting healthcare regulatory standards.