AI-Powered Risk Prediction: Healthcare Use Cases

Post Summary

AI is transforming healthcare cybersecurity by predicting risks like data breaches, ransomware, and device vulnerabilities. Hospitals and healthcare providers face unique challenges due to their reliance on electronic health records (EHRs), connected devices, and vast amounts of sensitive patient data. AI-driven models analyze patterns in real-time data - like EHR logs, network activity, and device telemetry - to detect potential threats early and prevent major disruptions.

Key highlights:

- Healthcare Cyber Risks: High-value patient data, outdated systems, and increased attack surfaces make healthcare a prime target for cyberattacks.

- AI Applications: AI identifies suspicious EHR access, predicts ransomware, and flags vulnerable medical devices.

- Techniques Used: Machine learning methods - like supervised and unsupervised learning - process diverse data sources to improve risk detection accuracy.

- Benefits: Faster threat detection, fewer false alerts, and compliance with regulations like HIPAA and the HITECH Act.

- Tools in Action: Platforms like Censinet RiskOps™ streamline vendor risk management and assess vulnerabilities across healthcare systems.

AI is reshaping how healthcare organizations protect patient data and ensure operational safety by integrating predictive insights into cybersecurity workflows.

How AI Works in Healthcare Cyber Risk Prediction

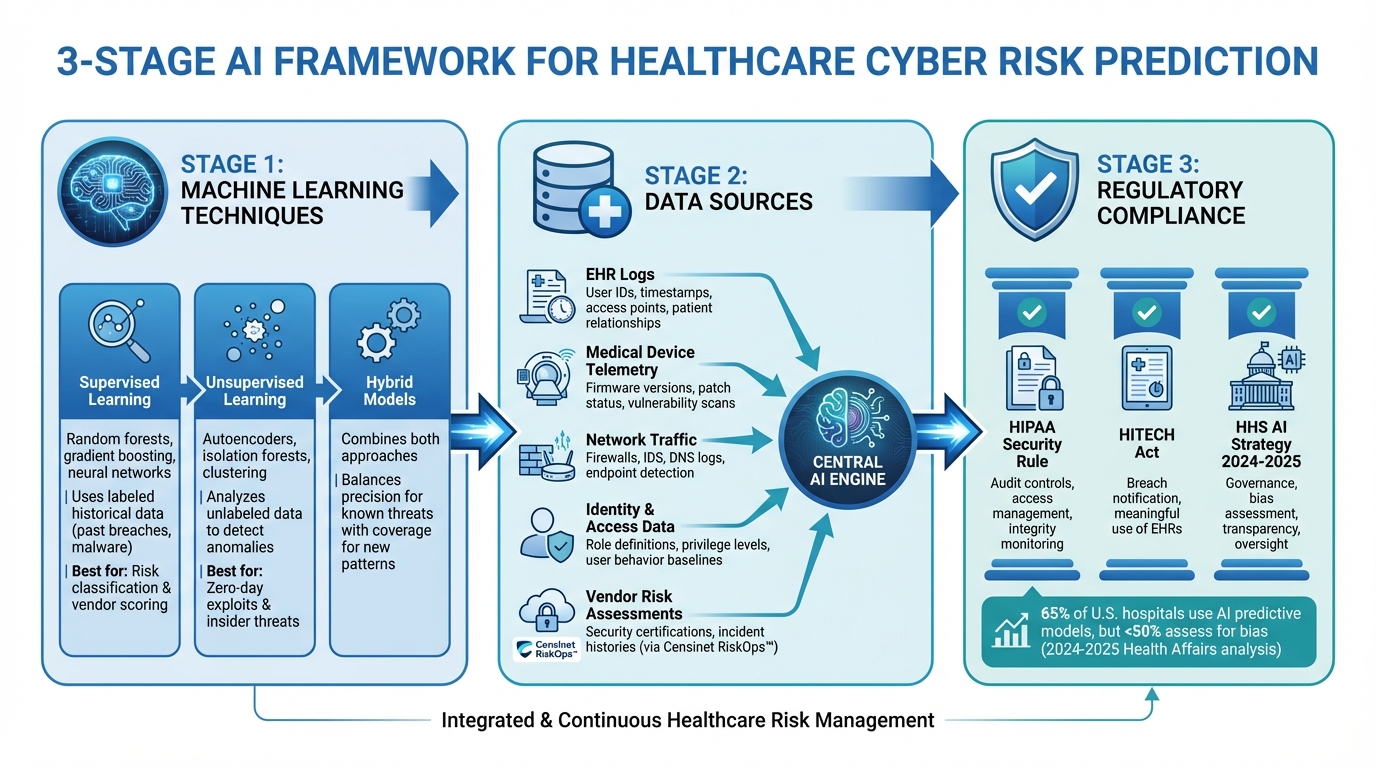

How AI Predicts Healthcare Cyber Risks: 3-Stage Framework

Machine Learning Techniques for Risk Prediction

Healthcare organizations rely on three core approaches: supervised learning, unsupervised anomaly detection, and hybrid models. Supervised learning methods - like random forests, gradient boosting, and neural networks - use labeled historical data, such as past breaches, malware incidents, or unauthorized access cases, to identify and classify risks. These models excel at assigning risk levels and supporting vendor risk scoring based on known patterns.[3]

Unsupervised methods, on the other hand, operate without labeled datasets. Tools like autoencoders, isolation forests, and clustering algorithms analyze large sets of unlabeled data - such as network flows, device telemetry, and user behavior - to determine what constitutes "normal" activity. Deviations from this baseline are flagged as potentially suspicious, making this approach especially useful for identifying zero-day exploits or insider threats where examples are sparse.[3]

Hybrid models combine the strengths of both approaches. For instance, unsupervised techniques can detect unusual behavior, which is then fed into supervised models trained on past investigation outcomes. Another common setup integrates rule-based systems with machine learning to fine-tune risk thresholds. This layered approach balances precision in identifying known threats with broad coverage for new attack patterns, helping security teams focus on the most critical risks. These methods rely on diverse data sources to ensure comprehensive and accurate risk prediction.

Data Sources Used for Risk Prediction

The success of cyber risk prediction in healthcare hinges on consolidating data from multiple sources across the organization. EHR logs are a crucial resource, capturing details like user IDs, timestamps, access points, patient relationships, and data types to identify unauthorized access attempts. Medical device telemetry provides information on firmware versions, patch statuses, communication behaviors, and vulnerability scans, helping prioritize devices based on their risk and clinical importance.[3]

Other key data streams include real-time network traffic from firewalls, intrusion detection systems, DNS logs, and endpoint detection tools, which help models predict threats like ransomware and malware. Identity and access management data - such as role definitions, privilege levels, shift schedules, and user behavior baselines - enhances the detection of insider risks. Additionally, vendor and third-party risk assessments supply structured data, including security certifications and incident histories. Tools like Censinet RiskOps™ centralize these assessments, creating a standardized dataset for AI-driven vendor risk scoring and benchmarking tailored to healthcare's needs.

Regulatory and Ethical Requirements

Implementing these advanced AI techniques requires compliance with strict regulatory and ethical standards. The HIPAA Security Rule mandates safeguards for protecting electronic PHI, including audit controls, access management, and integrity monitoring - areas where AI can significantly improve compliance efforts.[3][5] The HITECH Act further enforces breach notification requirements and incentivizes the meaningful use of EHRs, which generate the data needed for AI systems.

The U.S. Department of Health and Human Services' 2024–2025 AI strategy positions AI as a critical tool for advancing healthcare while emphasizing governance to ensure safety, privacy, and security.[4] This includes guidelines for assessing bias, ensuring transparency, and maintaining oversight throughout an AI system's lifecycle. Healthcare organizations must avoid unfair outcomes, such as biased risk scoring of clinicians or departments, by maintaining algorithmic transparency and fairness.[7][5]

A 2024–2025 analysis published in Health Affairs revealed that while 65% of U.S. hospitals use AI-assisted predictive models, fewer than half formally assess these models for bias.[7] This highlights the urgent need for rigorous governance practices, including model validation, monitoring, and documentation, to ensure AI tools enhance security without sacrificing fairness or accountability.

AI Use Cases for Cyber Risk Prediction in Healthcare

Detecting Unauthorized EHR Access

AI-powered user and entity behavior analytics (UEBA) play a critical role in monitoring how healthcare professionals - clinicians, staff, and administrators - interact with electronic health records (EHRs). These systems establish personalized behavior patterns for each user, tracking details like which patient records they access, the times and locations of access, and the devices they typically use. When someone deviates from these established norms - say, by accessing records during unusual hours or from an unexpected location - the AI flags the activity for immediate review by privacy and security teams.

What sets these systems apart is their ability to incorporate contextual data, which helps reduce false alarms. For instance, they link access events with scheduled appointments, active orders, or documented consults, allowing them to distinguish between legitimate emergency access and inappropriate snooping. By analyzing additional factors like shift schedules, department assignments, and on-call status, these models can accurately detect insider threats and unauthorized access. Healthcare organizations using such tools report identifying privacy violations early, preventing them from escalating into major data breaches. This not only supports HIPAA compliance but also strengthens patient trust.

Predicting Ransomware and Malware Attacks

AI is increasingly being used to predict and mitigate ransomware and malware threats by analyzing network traffic, endpoint behavior, and email activity for early warning signs. These models establish baselines for normal system behavior - such as typical file access patterns, process creation activities, and communication flows across networks. Any deviations, like sudden increases in lateral movement, unusual SMB or RDP activity, rapid file changes, or attempts to disable backup systems, are flagged as potential threats. Often, these warning signs appear hours or even days before ransomware fully deploys, giving security teams a critical window to isolate affected systems and protect operations.

By combining data from multiple sources - network traffic, email patterns, and endpoint activity - AI systems can quickly identify and respond to anomalies such as phishing attempts or abnormal file modifications. For U.S. hospitals, where system downtime can directly impact patient care, this predictive capability is crucial. It enables incident response teams to act swiftly, preventing ransomware from spreading to vital systems like EHRs, imaging platforms, or medication dispensing tools.

Identifying Medical Device Security Vulnerabilities

The growing number of connected medical devices - ranging from infusion pumps and ventilators to imaging systems and patient monitors - has expanded the attack surface in healthcare. AI helps organizations manage this risk by automatically discovering, classifying, and monitoring these devices. It identifies details like manufacturer information, firmware versions, and known vulnerabilities (CVEs), while also mapping each device's normal communication behavior. When a device starts exhibiting unusual traffic patterns, connects to unexpected IP addresses, or runs outdated firmware with critical vulnerabilities, the AI system generates a prioritized alert.

These systems go a step further by calculating risk scores that combine exploitability metrics with clinical impact. They consider factors like the device's importance, the severity of patient conditions it supports, and its exposure on the network. This ensures that mitigation efforts are focused on vulnerabilities that pose the greatest risk to patient safety. Continuous monitoring of devices aligns with the healthcare sector’s commitment to protecting patients while meeting regulatory requirements. For example, platforms like Censinet RiskOps™ centralize AI-detected vulnerabilities alongside third-party risk assessments, helping organizations create coordinated remediation plans that safeguard both patient care and compliance with FDA guidelines.

These examples highlight how AI transforms complex data into actionable security insights, making it easier for healthcare organizations to integrate these insights into their broader risk management strategies.

Building and Operating AI Risk Prediction Systems

Data Integration and Feature Engineering

The foundation of effective AI risk prediction systems lies in unified data pipelines. These pipelines bring together diverse sources like EHR data (via HL7 v2/FHIR), access logs, network activity, device performance metrics, vulnerability scans, and asset inventories. All this information flows into a centralized SIEM, enabling near real-time threat detection through streaming architectures.[3][6]

Feature engineering plays a critical role by turning raw data into actionable risk signals. For example, anomalies like unusual EHR access times or sudden spikes in failed logins can indicate potential threats.[3][6] Adding context - such as department assignments, user roles, device importance, or PHI sensitivity - helps differentiate between legitimate emergency actions and suspicious behavior. However, fragmented EHR systems and outdated devices often generate incomplete or noisy logs. To address this, data quality controls are essential, ensuring that the input data is accurate and reliable.[3][9] This strong data foundation is key to building precise risk prediction models across healthcare systems.

Model Training and Evaluation

Training AI models for risk prediction requires historical incident data, such as confirmed cases of unauthorized EHR access, past ransomware attacks, or validated device vulnerabilities. These historical events are paired with data from the hours or weeks leading up to the incidents.[3][6] Given that true security breaches are rare compared to routine activities, organizations use techniques to balance incident data with benign activity. Many also rely on anomaly detection, which models typical behavior and flags deviations as potential risks.[3]

Choosing the right models is equally important. Gradient-boosted trees and random forests are particularly effective for tabular data like access logs or device inventories. These models handle diverse features well and provide interpretable outputs, such as feature importance scores and SHAP values.[3][5] For time-series data, like patterns in user behavior or device communications, sequence models can capture complex trends while still offering insights into temporal risk factors.[2][3]

Evaluation metrics go beyond overall accuracy. Metrics like precision (how many flagged events are genuinely risky), recall/sensitivity (how many true threats are detected), and ROC-AUC or PR-AUC scores help assess performance. Operational measures, such as the number of false alerts per day per analyst, are crucial for minimizing alert fatigue.[3][6] Healthcare systems have seen improved detection rates and fewer false alerts when transitioning from rule-based systems to AI-driven models.[6] Temporal validation - training on older data and testing on newer data - ensures models remain reliable over time. Continuous monitoring is also necessary to address performance drift as hospital operations and threat patterns evolve.[3][7][5] These fine-tuned models integrate seamlessly into security workflows, bridging the gap between detection and response.

Integrating AI with Security Operations

Once models are thoroughly evaluated, their outputs can be integrated directly into Security Operations Center (SOC) workflows. Through platforms like SIEM or SOAR, AI-generated alerts come enriched with risk scores, contributing factors, and recommended next steps, such as locking accounts, isolating devices, or requiring additional authentication.[6][9] High-confidence alerts can automatically trigger incidents or containment actions, while lower-confidence alerts are sent to human analysts with detailed explanations of the risks involved.[6]

Given the direct impact of cyber events on patient care, AI alerts must also align with clinical workflows and escalation protocols.[6][8] Initially, these alerts can operate in shadow mode, running alongside traditional rules and manual investigations. This allows healthcare systems to validate their reliability and fine-tune workflows before enabling automated controls.[3][9][5] Tools like Censinet RiskOps™ demonstrate how AI can centralize vulnerability detection, third-party risk assessments, and device inventories. By doing so, they help healthcare organizations coordinate remediation efforts that safeguard patient care while meeting regulatory requirements.[1]

sbb-itb-535baee

How Censinet RiskOps™ Powers AI-Driven Risk Management

Censinet RiskOps™ for Automated Risk Assessments

Censinet RiskOps™ simplifies vendor and enterprise risk management by combining all relevant data into a cloud-based platform. Using AI, it classifies vendors, links them to critical systems, and prioritizes assessments based on factors like the volume of protected health information (PHI), integration depth, and past incidents. Tailored questionnaires align with U.S. healthcare standards - such as HIPAA, HITECH, HHS 405(d) HICP practices, and NIST CSF - and highlight control gaps by comparing responses to an up-to-date baseline.

This approach replaces outdated spreadsheet workflows, cutting assessment times from weeks to just days. Censinet AI™ leverages natural language processing to analyze documents like security questionnaires, SOC 2 reports, business associate agreements, and policy documents, automatically extracting control evidence. From there, machine learning and rules-based scoring convert this evidence into consistent cyber and compliance risk scores. The platform also benchmarks vendors against anonymized peer data - comparing, for example, EHR add-on apps to imaging vendors - to identify outliers.

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required." - Terry Grogan, CISO, Tower Health

Censinet RiskOps™ connects a network of over 50,000 vendors and products across the healthcare sector, enabling shared due diligence. Once a vendor completes a detailed assessment on the platform, that same assessment can be reused by other healthcare organizations, eliminating duplicate efforts and boosting efficiency across the industry. These automated processes set the stage for proactive, forward-looking risk management.

Censinet AI for Predictive Risk Analysis

Censinet AI takes risk management a step further by predicting potential issues, such as data breaches, ransomware attacks, or device vulnerabilities, over specific timeframes. It generates predictive risk scores based on technical factors (like patching frequency, network exposure, and outdated operating systems), process maturity (such as incident response readiness, backup strategies, and MFA adoption), and business impact (including PHI volume, clinical dependency, and downtime costs in dollars).

When it comes to medical devices, the platform evaluates details like device type, connectivity, FDA recall records, and software bill of materials (SBOM) risk patterns. This helps organizations identify which devices need urgent attention, whether through isolation or remediation. Early-warning indicators - like a rising risk score for a vendor recently breached, delays in critical remediation, or high-risk services concentrated in one cloud provider - are flagged and translated into actionable priorities. For example, users might see lists like "top 50 vendors most likely to face ransomware attacks in the next 12 months" or "device models requiring segmentation or replacement within 6 months."

To ensure accuracy and align with regulations, human oversight is integrated into the process. Security and privacy analysts can review and adjust AI-generated scores, flag false positives or negatives, and approve or reject suggested remediations. These human interventions are logged and used to refine the AI models over time, ensuring better alignment with internal audits, regulatory inquiries, and board-level accountability.

Censinet's Impact on Healthcare Cybersecurity

With these predictive tools, healthcare organizations can focus their resources on addressing critical vulnerabilities. By identifying high-risk vendors, applications, and devices, Censinet helps prioritize protection efforts where patient care is most at stake. For instance, vendors managing EHR systems, medication workflows, or imaging technologies can be subjected to stricter controls, stronger network segmentation, and more frequent monitoring, reducing the risk of data loss or system downtime during an attack. Similarly, medical devices like life-support systems or networked infusion pumps can be prioritized for patching and segmentation, minimizing the chances of ransomware or lateral attacks disrupting essential therapies.

The result? Broader assessment coverage, clearer and more consistent risk scores for informed decision-making, and fewer serious incidents linked to third-party risks. Over time, this approach reduces the likelihood of extended downtime for critical systems and ensures cybersecurity and clinical engineering budgets are spent where they matter most - on the highest-risk assets.

"Not only did we get rid of spreadsheets, but we have that larger community [of hospitals] to partner and work with." - James Case, VP & CISO, Baptist Health

From a financial perspective, Censinet helps organizations avoid costs tied to breaches - like forensic investigations, legal settlements, and credit monitoring - while also reducing overtime costs during downtime events. The platform’s benchmarking data provides CISOs and CIOs with a clearer picture of what "good" looks like for specific service lines or vendor categories. This supports more informed board discussions, clearer risk appetite statements, and capital planning that aligns with the organization’s actual risk landscape.

Conclusion

AI-driven risk prediction is reshaping how healthcare organizations tackle cyber threats. By forecasting risks, it helps minimize cyber incidents, safeguard protected health information (PHI), and improve patient safety. U.S. healthcare systems are particularly vulnerable due to their reliance on intricate electronic health record (EHR) systems, interconnected devices, and their inability to endure prolonged downtime. These factors make them attractive targets for ransomware attacks and operational disruptions. AI's role is becoming increasingly critical across all stages of the cyber incident lifecycle.

AI enhances cyber risk management by offering continuous risk evaluations for users, systems, and vendors. It enables faster anomaly detection, identifying ransomware or malware patterns with greater accuracy and fewer false positives than traditional tools. Post-incident, AI refines its models, improving predictions about which assets or workflows are most likely to face future threats, thereby strengthening overall security measures.

However, the success of AI risk prediction depends on how well it integrates into day-to-day operations. Effective implementation requires seamless data integration, clear governance structures, and close collaboration among security, IT, and clinical teams to ensure swift responses. Solutions like Censinet RiskOps™ illustrate this approach by applying AI to manage third-party and enterprise cyber risks. This platform enables healthcare organizations to streamline vendor risk assessments, measure cybersecurity readiness, and coordinate remediation efforts. By combining structured risk data with AI models, organizations can pinpoint high-risk vendors, clinical systems, and medical devices, prioritize mitigation strategies, and maintain ongoing risk monitoring. Such efforts not only support regulatory compliance but also enhance the protection of PHI and healthcare services.

Key Takeaways

AI's transformative potential in healthcare cybersecurity lies in its ability to unify data from EHRs, identity systems, networks, devices, and vendors. To succeed, predictive models must balance performance with clarity, ensuring they are interpretable and validated to maintain trust among clinicians and security teams. AI insights should seamlessly integrate into existing workflows, such as Security Operations Centers (SOCs), ticketing systems, and clinical escalation processes, enabling teams to act decisively without succumbing to alert fatigue.

Compliance with U.S. regulations - such as HIPAA, FTC guidelines for AI-enabled products, and emerging HHS and OMB recommendations - is essential. Organizations need robust governance frameworks, including AI oversight committees, model risk management protocols, and thorough evaluation processes. These measures ensure models are validated, monitored for bias, and used appropriately within defined clinical and security contexts. Security analysts, compliance officers, and clinicians must understand the rationale behind high-risk alerts for users, devices, or vendors, fostering both trust and accountability.

Healthcare leaders should focus on a few high-impact use cases - like detecting unauthorized EHR access, providing early ransomware warnings, or identifying high-risk vendors - where data availability and potential benefits are clear. Investing in data readiness and establishing governance structures early on will pave the way for broader AI adoption in the future.

FAQs

How does AI improve cybersecurity in healthcare?

AI plays a pivotal role in bolstering cybersecurity within the healthcare sector by analyzing risks in real time. This capability helps organizations pinpoint and address vulnerabilities across patient data, medical devices, and third-party systems. It also automates threat detection, prioritizes risks, and aids compliance efforts, allowing for quicker and more precise responses to potential threats.

One standout example is Censinet RiskOps™, which leverages AI to simplify risk assessments, enhance cybersecurity benchmarks, and promote collaborative risk management. By reducing manual tasks and increasing efficiency, this tool empowers healthcare organizations to safeguard sensitive information and protect critical systems more effectively.

What are the key machine learning methods for predicting risks in healthcare?

Machine learning has become an essential tool for identifying and predicting risks in healthcare. Using advanced algorithms, it can process and analyze complex datasets in ways that were once unimaginable. Popular approaches include supervised learning techniques such as decision trees, random forests, support vector machines, and neural networks. These methods are commonly applied to evaluate patient data, safeguard medical devices, and detect potential system vulnerabilities.

With these tools in action, healthcare organizations can take a proactive stance on managing risks. This includes improving patient safety, protecting sensitive data, and optimizing operational processes - all of which contribute to better care and more secure systems.

What steps can healthcare organizations take to ensure AI complies with HIPAA regulations?

Healthcare organizations can meet HIPAA requirements for AI compliance by conducting frequent risk assessments and utilizing advanced AI-based risk management tools specifically designed for the healthcare sector. These tools play a key role in protecting patient data, maintaining privacy, and identifying potential security issues.

In addition, it's essential to invest in continuous staff training, enforce stringent data access restrictions, and carry out regular audits. These steps work together to safeguard sensitive information and ensure adherence to HIPAA regulations.