Clinical AI Bias Testing: How to Assess and Mitigate Algorithmic Risks in Healthcare

Post Summary

Clinical AI bias testing evaluates AI systems for disparities in performance across patient groups to ensure fairness, safety, and compliance in healthcare.

AI bias can lead to misdiagnoses, delayed treatments, and inequitable care, disproportionately affecting marginalized populations and eroding trust in AI systems.

Bias arises from underrepresented data, flawed algorithm designs, and human developer biases, often perpetuating existing healthcare disparities.

Best practices include auditing data quality, using fairness metrics, applying bias mitigation strategies across the AI lifecycle, and establishing governance controls.

Tools like SHAP and LIME help interpret predictions, while fairness metrics like demographic parity and equalized odds assess performance across subgroups.

Trust is built by involving diverse stakeholders, increasing transparency, and continuously monitoring AI systems for bias throughout their lifecycle.

AI in healthcare is transforming clinical decision-making, but algorithmic bias poses serious risks to patient safety and equity. Here's how healthcare organizations can address it:

- Why It Matters: AI bias can lead to misdiagnoses, delayed treatments, and poor outcomes, disproportionately impacting marginalized communities.

- Key Causes: Bias stems from underrepresented data, flawed algorithm designs, and human developer biases.

- What’s Needed: U.S. healthcare organizations must identify and address bias to meet regulatory expectations and ensure fair care.

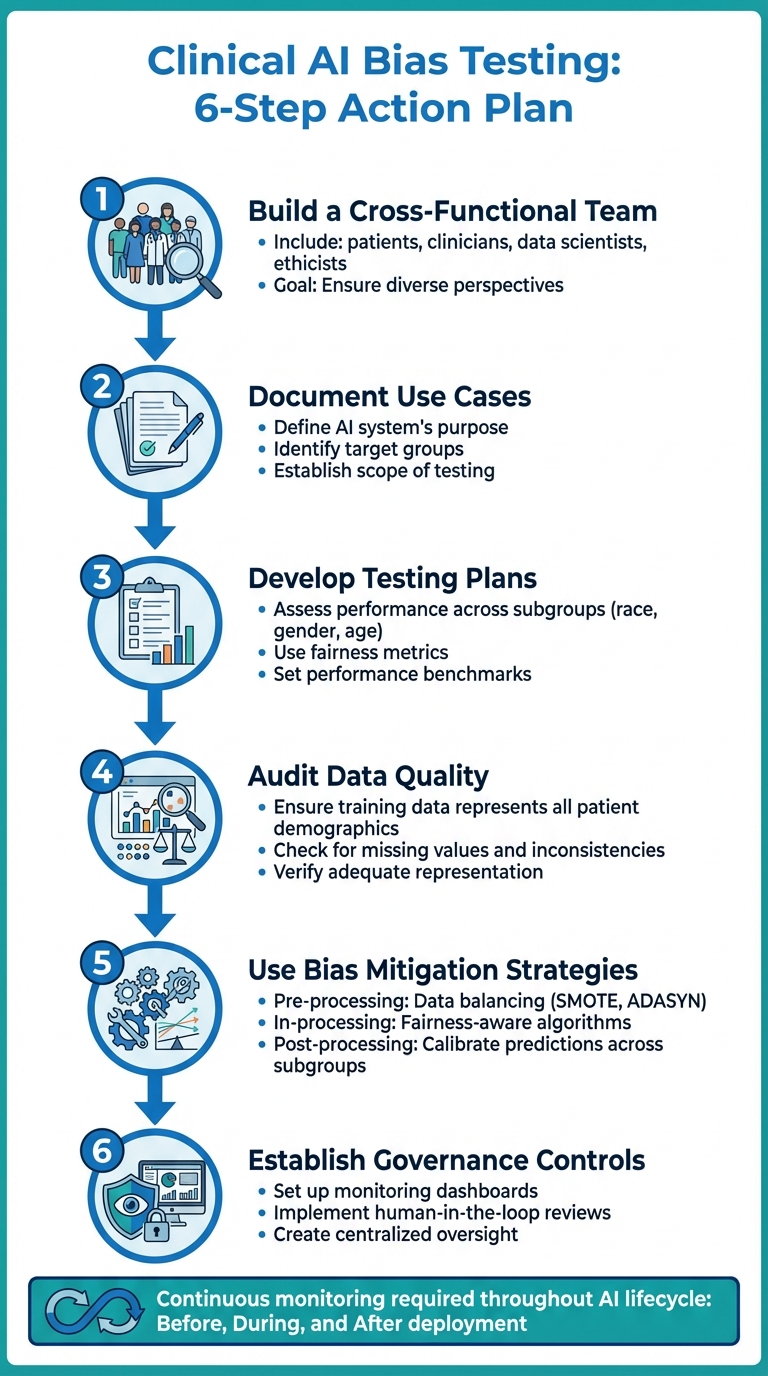

Action Plan:

- Build a Cross-Functional Team: Include patients, clinicians, data scientists, and ethicists to ensure diverse perspectives.

- Document Use Cases: Clearly define the AI system’s purpose, target groups, and scope of testing.

- Develop Testing Plans: Assess performance across subgroups (e.g., race, gender) using fairness metrics.

- Audit Data Quality: Ensure training data represents all patient demographics adequately.

- Use Bias Mitigation Strategies: Apply techniques before, during, and after model training to reduce disparities.

- Establish Governance Controls: Set up monitoring dashboards, human-in-the-loop reviews, and centralized oversight.

Continuous Monitoring:

Bias testing must occur throughout the AI lifecycle - before, during, and after deployment. Regular audits, real-time tracking, and detailed documentation are essential for maintaining compliance and improving outcomes.

Bottom Line: Tackling AI bias is critical for delivering safe, equitable healthcare and meeting regulatory standards. With the right strategies, organizations can reduce risks and build trust in AI systems.

6-Step Clinical AI Bias Testing Action Plan for Healthcare Organizations

How to Build a Clinical AI Bias Testing Program

Creating a robust bias testing program starts with assembling the right team and setting clear objectives. A well-structured approach ensures healthcare organizations can address technical, clinical, ethical, and regulatory factors effectively. With this groundwork in place, the steps for assessing bias become much more rigorous and actionable.

Assemble a Cross-Functional AI Risk Team

The key to a successful bias testing program lies in building a diverse team that brings multiple perspectives to the table. This team should include:

- Patients or patient advocates to prioritize safety and equitable outcomes.

- Clinicians to provide clinical insights and validate real-world scenarios.

- Hospital administrators to manage resources and institutional risk.

- IT staff and data engineers to ensure data infrastructure and quality.

- AI specialists and data scientists to develop and audit models.

- Ethicists and compliance professionals to oversee regulatory adherence.

- Behavioral scientists to identify societal biases and assess health equity impacts [4].

"By involving stakeholders throughout the evaluation process, this framework also begins to address the issue of trust in AI tools in healthcare. By including end users in the evaluation and by increasing transparency, this framework may increase the likelihood that users will trust the tool as they understand the limitations and steps taken to ensure the tool's reliability." - npj Digital Medicine [4]

Mapping out stakeholder roles is crucial to understanding how each team member contributes and identifying potential organizational challenges. To foster collaboration, regular workshops and training sessions can help close knowledge gaps and build a shared understanding of AI bias and testing methods.

Document AI Use Cases and Testing Scope

Before diving into testing, clearly document the AI system's purpose, expected outcomes, and key stakeholders. This step ensures that the testing process is grounded in a well-defined problem statement [5].

Take a close look at your data sets to confirm that they appropriately represent diverse patient populations. Your documentation should outline the AI tool’s intended use, target groups, and role in clinical decision-making. This clarity helps establish boundaries for bias testing while integrating technical, clinical, ethical, and regulatory considerations throughout the AI’s lifecycle [5].

Develop a Bias Testing Plan

A solid bias testing plan begins with identifying protected subgroups, such as race, ethnicity, age, gender, socioeconomic status, and geographic location [4].

Next, select performance and fairness metrics that align with your clinical goals. Address data quality concerns to ensure all relevant subgroups are adequately represented. Define risk tolerance levels and set clear performance benchmarks for each patient group. Finally, establish a process for interpreting results, deciding who will review them, and outlining the steps to take if bias is found.

Methods and Metrics for Testing Clinical AI Bias

Once your testing plan is ready, the next step is to apply targeted methods to identify and address bias. This means analyzing your data, evaluating how your model performs across various patient groups, and digging into the reasons behind specific predictions. These steps are crucial to uncover disparities that could affect patient care. Below, we’ll explore how to audit data, assess fairness, and interpret outcomes effectively.

Audit Data Representation and Quality

Start by reviewing the demographic makeup of your training data, focusing on factors like race, ethnicity, age, gender, socioeconomic status, and geographic location. It’s important to ensure that the data represents all patient populations adequately. Often, imbalances in data arise during the pre-processing stage - long before the model is trained - and these can lead to poorer performance for underrepresented groups [3][7].

Use visualization tools to audit your training data for both representation and quality. These tools can help spot missing values, inconsistent coding, or groups that are undersampled. Additionally, structured evaluation frameworks can help determine if your data covers the full range of patients who will interact with the AI system. Pay close attention to protected characteristics and ensure that data collection methods do not inadvertently exclude vulnerable populations.

Measure Model Performance and Fairness

Metrics like overall accuracy or AUC can hide disparities, so it’s essential to break down performance metrics - such as sensitivity, specificity, and predictive values - by subgroup [8][9].

Choose fairness metrics that align with your clinical goals. Group fairness metrics compare performance across different groups, looking at factors like equal positive prediction rates or how groups are prioritized. Individual fairness metrics ensure that similar patients receive similar predictions, often using similarity-based or counterfactual analysis. Distribution fairness metrics, on the other hand, assess how outcomes and rewards vary across populations [8][9]. Keep in mind that optimizing one type of fairness may affect another, so understanding these tradeoffs is key.

Use Interpretability and Error Analysis Tools

Interpretability tools can shed light on why your model makes certain predictions and whether those reasons are clinically sound. Tools like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are particularly helpful. They can pinpoint which features influence predictions for individual patients or specific subgroups.

For example, if your model heavily relies on zip code as a predictor, it might be using socioeconomic status as a stand-in for health risks rather than identifying actual clinical factors. Error analysis should focus on identifying where and why the model fails for particular groups. This helps differentiate between acceptable clinical variation and concerning biases. Conducting this type of analysis during the post-processing stage is critical for catching issues that might arise in real-world use [3][7].

How to Reduce Algorithmic Bias and Establish Governance Controls

Tackling algorithmic bias and setting up governance controls requires a multi-pronged approach throughout the machine learning (ML) pipeline. Building on the earlier framework for bias testing, this section explores how to reduce bias through deliberate interventions. Success hinges on addressing bias at various stages of the ML pipeline, incorporating practical controls into clinical workflows, and embedding bias mitigation into an overarching governance framework. These steps link technical development with actionable measures in real-world clinical settings.

Apply Bias Mitigation Strategies Across the ML Pipeline

Reducing bias demands specific strategies tailored to different phases of the ML process - before, during, and after model training.

Pre-processing strategies focus on preparing your data before it enters the model:

- Use data balancing techniques like SMOTE or ADASYN to address underrepresented groups.

- Handle missing data more effectively with robust imputation methods.

- Replace biased proxies, such as using socioeconomic deprivation factors or zip codes instead of race-based correction factors [3] [11].

- Capture often-overlooked factors like social determinants of health by using standardized questionnaires or natural language processing to analyze electronic health records [3].

In-processing strategies target the training phase of the model:

- Implement fairness-aware algorithms that include constraints to reduce reliance on non-clinical features, such as adversarial learning or loss-based methods [7] [11] [12].

- Use feature disentanglement techniques to focus on genuine disease characteristics while minimizing dependence on shortcuts like data source or demographic proxies [12].

- For imaging models, apply methods like histogram equalization and lung masking to address dataset inconsistencies [12].

Post-processing strategies come into play after training and during deployment:

- Calibrate predictions across subgroups to ensure consistent performance for different patient populations.

- Validate models rigorously at diverse clinical sites to test for external reliability.

- Set up real-time monitoring systems to detect and address emerging biases in everyday use [3] [10] [13].

- Define performance thresholds for acceptable differences and use automated tools to flag deviations that exceed these limits [13].

These technical adjustments provide the foundation for governance structures, ensuring bias mitigation efforts are carried through to clinical applications.

Implement Clinical Workflow Controls

To integrate bias mitigation into clinical settings, add specific controls to workflows:

- Human-in-the-loop reviews: For high-stakes decisions, have clinicians review and confirm AI recommendations, particularly for diagnoses or treatment plans that could significantly impact patient outcomes.

- Automation guardrails: Address deployment challenges like automation bias (over-reliance on AI), feedback loop bias (AI reinforcing its own inaccuracies), and dismissal bias (ignoring alerts due to fatigue) [13]. Design interfaces to present AI outputs as decision aids, not directives, and adjust how recommendations are displayed while limiting alert frequency to maintain clinician focus.

- Monitoring dashboards: Use real-time dashboards to track outcomes across different patient subgroups. Include metrics like prediction accuracy, false positive/negative rates, and clinical outcomes broken down by factors such as race, ethnicity, age, gender, and socioeconomic status. This allows clinical teams to quickly identify and address disparities.

Integrate Bias Mitigation into AI Governance

Bias mitigation should be a core part of your organization's AI governance framework, not a standalone effort. Document all mitigation decisions and track them using centralized AI risk oversight tools. This not only ensures accountability but also enables your organization to learn from both successes and challenges.

An effective governance framework aligns with organizational ethics and includes diverse perspectives. Your AI governance committee should feature a mix of clinicians from various specialties, data scientists, patient advocates, and community representatives from groups most affected by potential bias [10] [13]. This diversity helps uncover systemic biases that might otherwise go unnoticed.

Centralized platforms for AI risk management, such as Censinet RiskOps, can simplify the process. These tools allow organizations to track AI models throughout their lifecycle - documenting bias testing, managing mitigation strategies, and maintaining audit trails for regulatory compliance. This approach ensures that bias mitigation becomes a seamless part of your organization's overall AI risk management strategy, connecting technical processes, clinical workflow adjustments, and governance oversight.

sbb-itb-535baee

How to Maintain Continuous Bias Testing in U.S. Healthcare

Bias testing isn't a one-and-done task. As clinical practices evolve and patient demographics shift, AI systems in healthcare can experience performance changes over time. This makes continuous monitoring a must to ensure fairness and accuracy. To meet U.S. regulatory expectations, healthcare organizations should weave bias testing into their everyday operations, keeping pace with these changes and ensuring their AI tools remain reliable [16][4][3].

Test for Bias Throughout the AI Lifecycle

Bias testing needs to be part of every major stage in your AI system's lifecycle: before deployment, during deployment, and after deployment. Start with thorough bias assessments during pre-deployment validation, continue with real-time monitoring once the system is live, and follow up with post-market surveillance to catch and address any performance issues. If any group's outcomes fall below acceptable benchmarks, immediate reviews should be triggered. Aligning your testing schedule with the latest regulatory guidelines ensures your AI tools stay compliant and clinically relevant [17].

Add Bias Testing to Risk Management Programs

Bias testing isn't just about fairness - it's also a critical part of managing risks. By including AI bias assessments in your risk management strategies, you can protect patient safety, ensure operational efficiency, and stay compliant with regulations [14]. Treat bias testing as you would other key risks, like cybersecurity threats or patient safety incidents. Centralizing AI oversight within your risk management framework can provide a clearer, unified view of potential vulnerabilities.

Follow Documentation and Reporting Standards

Proper documentation isn't just about compliance - it's a tool for ongoing improvement. Maintain detailed, time-stamped records (MM/DD/YYYY) of your bias testing efforts, including methodologies, subgroup performance data, disparities identified, corrective actions taken, and metadata about the datasets used [1][5][6].

With regulatory bodies like the FDA emphasizing fairness, equity, and transparency in healthcare AI, it's vital to create accessible repositories for your bias testing records. These should be available to clinical teams, compliance officers, and auditors. Regular audits can help assess AI performance across diverse patient groups, and the insights gained should drive quality improvement initiatives. This cycle of auditing, reporting, and refining ensures your AI systems continue to meet both clinical and regulatory expectations throughout their lifecycle [15].

Conclusion

Clinical AI bias poses a serious risk to patient safety, care outcomes, and regulatory compliance throughout the entire AI lifecycle. To address this, healthcare organizations must adopt a holistic approach that blends technical precision, ethical oversight, and well-defined governance structures. With regulatory bodies like the FDA and the EU's AI Act stepping up demands for transparency, fairness, and accountability in AI-powered healthcare tools, such a framework is no longer optional - it’s essential [14].

To tackle bias effectively, healthcare organizations should integrate bias testing into their everyday workflows. This means rigorously evaluating AI systems across diverse patient populations, prioritizing explainable AI models, and assembling cross-disciplinary teams that include clinicians, data scientists, ethicists, and compliance experts [14][2]. These steps highlight the importance of strong governance measures.

"Identifying bias is not just a technical challenge. It is a governance challenge." - Lumenova AI [14]

Ongoing oversight is critical to ensure AI systems remain both fair and clinically effective. Tools like Censinet RiskOps™ streamline AI governance by delivering real-time risk dashboards and immediately notifying key stakeholders about critical findings. This allows healthcare leaders to scale bias testing while maintaining the necessary human oversight.

Governance in healthcare AI isn’t just a best practice - it’s a strategic necessity [14]. By embedding bias detection, mitigation, and continuous monitoring into their processes, organizations can ensure equitable care, meet regulatory standards, and build trust in AI systems. The tools and frameworks are already available; now it’s about taking action to make sure algorithms serve all patients fairly and effectively.

FAQs

What steps can healthcare organizations take to reduce bias in their AI systems?

Healthcare organizations can work toward minimizing bias in their AI systems by emphasizing a few critical strategies:

- Incorporate diverse datasets: Use data that represents a broad spectrum of patient demographics to ensure the AI reflects the diversity of real-world populations.

- Regularly assess for bias: Implement fairness checks using statistical tools to identify and address any imbalances in the AI's decision-making processes.

- Collaborate across disciplines: Bring together teams of clinicians, data scientists, and ethicists to uncover and mitigate hidden biases in algorithms.

- Validate in real-world settings: Test AI systems thoroughly in clinical environments to ensure they perform consistently and fairly across various patient groups.

Focusing on these steps can lead to more equitable AI systems, better clinical decisions, and improved outcomes for all patients.

How can AI bias be identified and managed in healthcare systems?

AI bias in healthcare can often be spotted using a few key methods. Techniques like counterfactual analysis help ensure decisions remain consistent across scenarios. Statistical tools are useful for uncovering disparities, while evaluating how models perform across different subgroups can reveal hidden biases. Another crucial step is gathering datasets that are both diverse and representative, paired with ensuring that the models themselves are easy to interpret.

Addressing bias requires a thoughtful approach. Using inclusive data, designing models with care, and testing them rigorously across various populations are essential. Transparency is non-negotiable, as is working closely with stakeholders. And let’s not forget the importance of thorough testing, including clinical trials, to make sure AI tools are fair and reliable in healthcare settings.

Why is it important to continuously monitor AI systems in healthcare?

Continuous monitoring plays a key role in keeping healthcare AI systems accurate, fair, and effective over time. It’s essential for spotting and addressing problems like bias drift, declining performance, or unintended consequences - issues that could jeopardize patient safety or the quality of care.

Healthcare settings and societal expectations are always changing, and AI systems must keep pace. Ongoing monitoring ensures these algorithms stay aligned with up-to-date medical practices, ethical guidelines, and patient priorities. This not only helps deliver better care but also builds trust in AI-powered healthcare solutions.

Related Blog Posts

Key Points:

What is clinical AI bias testing?

Definition: Clinical AI bias testing is the process of evaluating AI systems for disparities in performance across different patient groups. It ensures that AI models deliver equitable, safe, and effective care by identifying and addressing algorithmic risks that could lead to unfair outcomes.

Why is addressing AI bias important in healthcare?

Importance:

- Prevents misdiagnoses, delayed treatments, and inequitable care.

- Protects marginalized populations from being disproportionately affected by biased algorithms.

- Ensures compliance with regulatory standards like FDA and GDPR.

- Builds trust in AI systems by demonstrating fairness and transparency.

What causes AI bias in healthcare?

Causes:

- Underrepresented Data: Training datasets that fail to include diverse patient demographics.

- Flawed Algorithm Designs: Models that rely on biased proxies, such as zip codes or socioeconomic factors.

- Human Developer Biases: Implicit or explicit biases introduced during data collection, model development, or deployment.

- Systemic Inequities: Historical disparities in healthcare access and outcomes reflected in the data.

What are the best practices for mitigating AI bias?

Best Practices:

- Audit Data Quality: Ensure training data represents diverse patient populations and addresses underrepresented groups.

- Use Fairness Metrics: Apply metrics like demographic parity, equalized odds, and counterfactual fairness to evaluate performance across subgroups.

- Bias Mitigation Strategies: Implement pre-processing (e.g., data balancing), in-processing (e.g., fairness-aware algorithms), and post-processing (e.g., reweighting predictions) techniques.

- Establish Governance Controls: Set up monitoring dashboards, human-in-the-loop reviews, and centralized oversight to ensure accountability.

What tools and metrics are used to test AI bias?

Tools and Metrics:

- Interpretability Tools: SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) help explain predictions and identify biases.

- Fairness Metrics:

- Demographic Parity: Ensures equal positive prediction rates across groups.

- Equalized Odds: Ensures similar true positive and false positive rates across groups.

- Counterfactual Fairness: Ensures similar predictions for individuals in similar circumstances.

How can healthcare organizations build trust in AI systems?

Building Trust:

- Involve Diverse Stakeholders: Include patients, clinicians, data scientists, and ethicists in the AI development process.

- Increase Transparency: Document use cases, testing plans, and limitations of AI systems.

- Continuous Monitoring: Regularly audit AI systems for bias throughout their lifecycle to ensure ongoing fairness and compliance.