The Ghost in the Machine: Detecting AI-Generated Cyber Threats

Post Summary

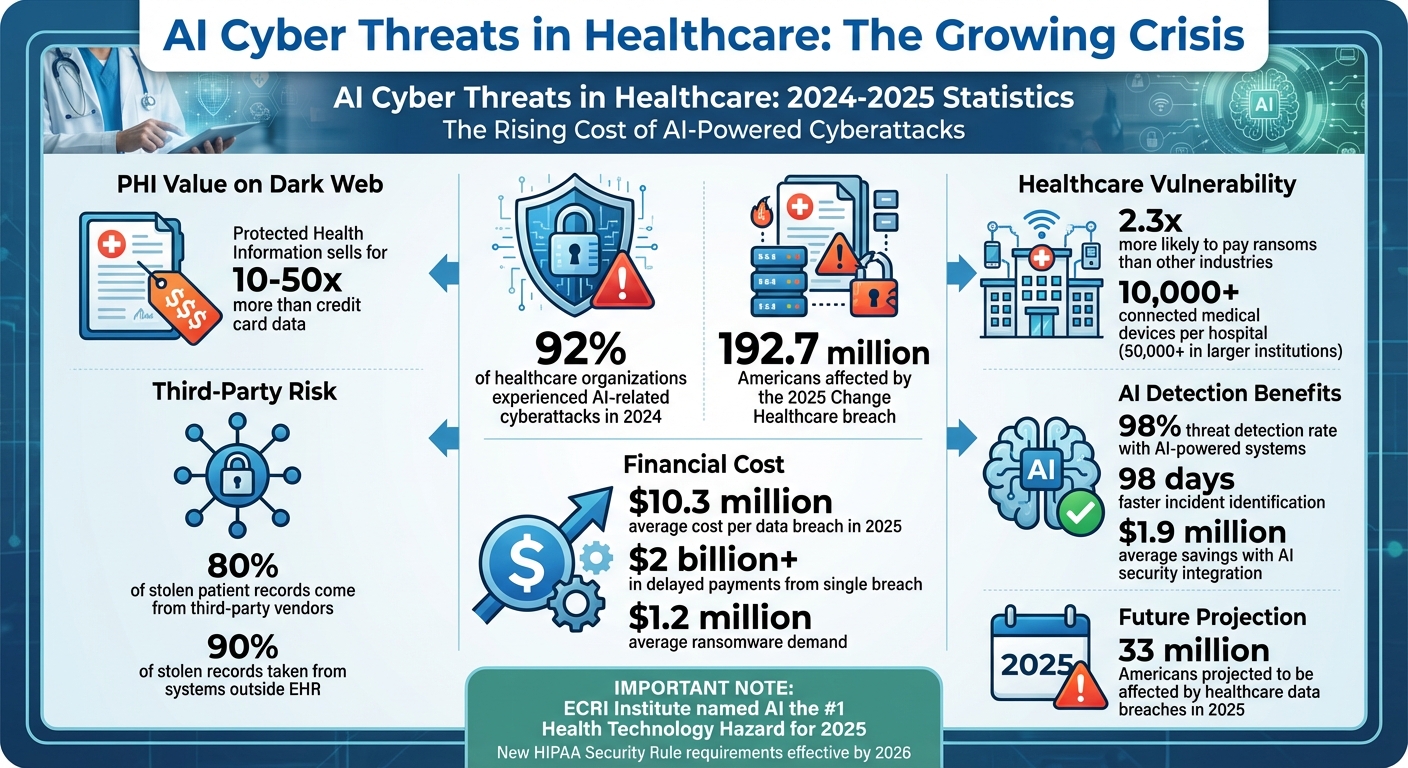

Healthcare organizations are facing a surge in cyberattacks fueled by AI. These attacks are more sophisticated, automated, and harder to detect than ever before. The risks include stolen medical data, ransomware, and even compromised medical devices that directly threaten patient safety. In 2024, 92% of healthcare organizations experienced AI-related cyberattacks, with breaches costing billions and affecting millions of Americans.

Key takeaways from this article:

- AI-driven threats: Cybercriminals use AI to automate phishing, deepfakes, and malware, bypassing traditional defenses.

- Healthcare vulnerabilities: Legacy systems, unsecure medical devices, and third-party vendor connections are major weak points.

- Detection strategies: AI-powered tools like Darktrace and Vectra AI can identify unusual activity and evolving threats faster than traditional methods.

- Risk management: Platforms like Censinet RiskOps™ centralize threat detection, risk assessments, and governance to improve defenses.

- Regulatory updates: Changes to HIPAA require stronger safeguards like encryption and multi-factor authentication by 2026.

Healthcare organizations must act now by combining AI-powered detection tools with strong governance frameworks to counter these growing threats effectively.

AI Cyber Threats in Healthcare: 2024-2025 Statistics and Impact

AI-Generated Cyber Threats in Healthcare

AI-powered threats are reshaping the cybersecurity landscape in healthcare, allowing attackers to automate, scale, and adapt their methods to avoid detection. In 2024, a staggering 92% of healthcare organizations reported experiencing AI-related cyberattacks [4]. Protected health information (PHI) is particularly enticing to cybercriminals, often fetching 10–50 times more than credit card data on dark web marketplaces [4]. The 2025 Change Healthcare breach serves as a sobering example of these dangers, affecting 192.7 million Americans. The breach not only exposed sensitive data but also caused over $2 billion in delayed payments by disrupting prescription processing and insurance claims [4]. These developments highlight the urgent need to understand the specific tactics employed in these attacks.

Common AI-Driven Attack Methods

Cybercriminals are leveraging generative AI to refine and intensify their attack strategies. Personalized phishing and social engineering have become more convincing, with attackers mimicking trusted contacts to exploit both technical and human vulnerabilities. Meanwhile, AI-enhanced malware and ransomware are capable of scanning healthcare networks, pinpointing weaknesses, and launching tailored attacks that evolve in response to defenses. This adaptability has made healthcare organizations 2.3 times more likely to pay ransoms, as immediate risks to patient safety - such as delayed surgeries or diverted ambulances - leave them with little choice [4].

Beyond ransomware, attackers are using advanced techniques like data poisoning and deepfake fraud. These methods can compromise medical AI systems, sometimes by altering as little as 0.001% of input data, leading to catastrophic diagnostic errors or treatment failures [4]. Such threats underscore the importance of addressing vulnerabilities within healthcare systems that make these attacks possible.

Healthcare System Vulnerabilities

Healthcare systems face unique challenges that make them particularly susceptible to AI-driven attacks. Many facilities rely on legacy systems and outdated medical devices that are difficult to update due to regulatory hurdles [4]. Hospitals, on average, manage over 10,000 connected medical devices, with larger institutions overseeing upwards of 50,000. Unfortunately, these devices often lack robust security measures, making them easy targets [4].

Another major vulnerability lies in the interconnected nature of healthcare operations. With thousands of third-party connections - such as electronic health record vendors, billing processors, laboratory networks, and telehealth platforms - attackers have numerous entry points to exploit. Alarmingly, 90% of stolen healthcare records are taken from systems outside the electronic health record, often through vendor-managed infrastructure [4].

Medical AI systems add another layer of risk. The ECRI Institute identified AI as the top health technology hazard for 2025, stating:

"AI topped this list not due to inherent malevolence but because of the catastrophic potential when medical AI systems fail or face compromise. Unlike traditional technology failures that might delay care or require workarounds, compromised AI systems can actively cause patient harm through incorrect diagnoses, inappropriate treatment recommendations, or medication errors." [4]

These systems, designed with a focus on accuracy and efficiency rather than security, are particularly vulnerable to adversarial attacks, data poisoning, and prompt injection. Such weaknesses can result in diagnostic mistakes, medication errors, or the exposure of sensitive patient data, highlighting the critical need for enhanced security measures in healthcare [4].

Detection Methods for AI-Driven Threats

Spotting AI-generated cyber threats demands a fresh approach compared to traditional security measures. These attacks are automated, constantly evolving, and often leave no recognizable patterns for standard tools to pick up. Strengthening detection is the first step in managing AI-related cybersecurity risks, especially in the healthcare sector. AI-powered systems have shown impressive results, achieving a 98% threat detection rate while cutting down incident identification time by 98 days. This time-saving is crucial since every hour of delay increases the chances of data breaches or system compromises [3] [4]. Organizations that heavily integrate AI into their security measures save an average of $1.9 million compared to those that don't [3]. These advanced capabilities are key to tackling both phishing/deepfake attacks and automated intrusions.

Detecting AI-Powered Phishing and Deepfakes

AI-generated phishing and deepfake attacks are particularly challenging because they replicate legitimate communications with incredible precision. Traditional email filters, which rely on spotting known malicious patterns or suspicious links, often fail to catch these advanced threats. Modern detection tools must go further, analyzing context, user behavior, and subtle inconsistencies to identify AI-generated content.

For example, AI-powered platforms like Tessian learn an organization’s typical communication patterns and flag unusual activities, such as misdirected emails or potential data leaks [6]. Tools like Vectra AI (Vectra Cognito) focus on analyzing network traffic for attacker behaviors rather than relying solely on malware signatures, making them effective against deepfake-related threats and sophisticated phishing attempts [6]. Similarly, self-learning systems like Darktrace create a "pattern of life" for every device and user, detecting even small deviations that could signal emerging threats, including zero-day attacks [6]. Microsoft Security Copilot integrates AI into Microsoft’s cybersecurity ecosystem, offering advanced threat intelligence and automation [6].

Healthcare organizations can strengthen their defenses by adopting robust validation frameworks to test AI models for vulnerabilities, including errors and adversarial manipulation [4]. This involves techniques like robustness testing, generating adversarial examples, and boundary testing to evaluate how models behave under attack. Regular employee training and simulated phishing exercises are also critical, as spotting deepfakes often requires close attention to subtle inconsistencies [2].

Identifying Automated Malware and Network Intrusions

Automated malware and AI-driven network intrusions operate differently from traditional threats. They adapt quickly, probe defenses, and exploit vulnerabilities faster than human attackers. Tools like Network Intrusion Detection Systems (NIDS) and Network Detection & Response (NDR) monitor internal network traffic in real time, flagging suspicious activities as they occur [3].

AI analytics play a vital role by establishing baseline behaviors for users and systems. They detect anomalies such as accessing sensitive files at odd hours or logging in from unexpected locations. Endpoint protection platforms, next-generation firewalls, and Managed Detection and Response (MDR) services incorporate AI to monitor network activity, identify irregularities, and respond swiftly to potential threats [3] [5]. Looking ahead, autonomous response systems are expected to take center stage, allowing AI tools to block suspicious activities or limit access immediately, minimizing damage in high-risk scenarios [3].

Healthcare organizations must also secure their AI training pipelines against "poisoning attacks" by implementing data provenance and integrity controls [4]. This includes using cryptographic methods to verify training data sources, maintaining detailed audit trails for data changes, and keeping training processes separate from production environments. Additionally, staying on top of software updates and patches helps close vulnerabilities that AI-driven tools might exploit during reconnaissance [1] [2]. While AI excels at monitoring and handling lower-risk issues quickly, human analysts remain indispensable for assessing complex threats and making critical decisions [3]. Together, these detection strategies lay the groundwork for comprehensive risk management and governance frameworks to combat AI-driven threats effectively.

Using Censinet RiskOps™ to Address AI Threats

As AI-driven cyber threats become more sophisticated, healthcare organizations need tools that centralize their response efforts. That’s where Censinet RiskOps™ steps in. This platform combines risk assessments, monitoring, and mitigation into one streamlined system, blending automated workflows with human oversight. By addressing the unique challenges posed by AI-based attacks, Censinet RiskOps™ transforms detection insights into actionable, organization-wide defenses.

Automated AI Threat Risk Assessments

One standout feature of Censinet RiskOps™ is its ability to speed up risk assessments using Censinet AITM. This tool automates critical steps in evaluating both third-party and internal risks tied to AI-generated threats. For instance, vendors can complete security questionnaires instantly, while the system compiles evidence, highlights integration details, and flags risks involving fourth-party vendors. It even generates risk summary reports, consolidating all relevant data to help healthcare organizations tackle potential threats much faster.

What makes this process even more effective is its human-in-the-loop design, which combines automation with customizable rules for validating evidence and mitigating risks. This approach directly addresses a key challenge with AI systems: their tendency for "hallucinations" - or producing inaccurate outputs - that demand human oversight [1].

AI Governance and Team Collaboration

Censinet RiskOps™ also acts as the central hub for AI governance and risk management, ensuring that key findings and tasks reach the right people at the right time. By routing assessment results to stakeholders such as AI governance committees and Governance, Risk, and Compliance (GRC) teams, the platform keeps everyone on the same page. Its real-time AI risk dashboard consolidates data into a user-friendly interface, making it easier to track policies, risks, and tasks across the organization.

This centralized system builds on earlier detection efforts by maintaining continuous oversight and enabling quick responses. Routine tasks are automated, freeing up experts to focus on strategic decisions [3]. Additionally, continuous monitoring ensures that AI systems remain accountable and adaptable, addressing the ever-changing nature of AI algorithms. By uniting stakeholders and streamlining risk management, Censinet RiskOps™ enables healthcare organizations to scale their operations without losing the critical human judgment needed to navigate complex AI-driven threats [1].

sbb-itb-535baee

Building AI Governance Frameworks

When it comes to tackling AI-driven cyber threats, healthcare organizations can't rely solely on advanced detection tools. While these tools are essential for identifying risks, they need to be supported by strong governance frameworks to ensure long-term protection. These frameworks lay the groundwork for how AI systems are utilized, monitored, and secured - especially when they involve sensitive patient data or influence clinical decisions. Without clear policies and accountability, even the most advanced technology won't be enough to counter AI-powered threats.

Core Framework Elements

A solid governance framework starts with clear policies that define access rights and usage conditions. Only trained personnel should have access, and safeguards must be in place to protect confidential patient information [2]. These policies should seamlessly integrate with existing Clinical Risk Management (CRM) and Enterprise Risk Management (ERM) systems, shifting organizations from reactive responses to proactive prevention [1].

Risk assessment procedures are another critical piece of the puzzle. These procedures should include scoring systems tailored to healthcare priorities, such as data sensitivity, system importance, access scope, and geographic considerations. It's also vital to test AI models for vulnerabilities, ensuring they can withstand attacks [4]. To further protect data integrity, implement controls like cryptographic verification, audit trails, and segregated environments to secure training pipelines against poisoning attacks [4]. With 80% of stolen patient records now linked to third-party vendors [4], these assessments must also measure both the current security posture and ongoing risks across your vendor network.

Accountability structures play a key role in fostering transparency. A no-blame culture encourages teams to report AI-related issues without fear, allowing organizations to address problems before they escalate [1]. As Di Palma et al. highlighted:

"The introduction of AI in healthcare implies unconventional risk management and risk managers must develop new mitigation strategies to address emerging risks" [1].

By adopting this proactive approach, healthcare organizations can better align with evolving industry standards and compliance requirements.

Meeting Industry Standards and Regulations

The regulatory landscape is shifting, particularly with updates to the HIPAA Security Rule. These changes now require specific technical controls in Business Associate Agreements (BAAs), such as multi-factor authentication (MFA), encryption, and network segmentation [4]. Healthcare organizations have just over a year to update their BAAs, transforming them into enforceable technical safeguards [4]. This isn't optional - it's a compliance mandate that demands immediate attention to identify and address non-compliant vendor relationships.

Beyond HIPAA, organizations should implement continuous monitoring programs capable of real-time threat detection across vendor connections [4]. Periodic assessments are no longer sufficient in an era where AI-driven threats evolve daily. Establishing security information-sharing agreements with vendors promotes transparency, while managing fourth-party risks ensures that subcontractors meet the same security standards as primary vendors [4]. These measures significantly reduce vulnerabilities that AI-powered cyberattacks often exploit.

With 92% of healthcare organizations experiencing cyberattacks in 2024 and the average data breach cost climbing to $10.3 million by 2025 [4], compliance isn't just about avoiding penalties. It's about safeguarding patient trust and ensuring the financial health of the organization.

Conclusion

Healthcare organizations are grappling with a serious cybersecurity crisis. Recent statistics reveal a troubling rise in attacks and the steadily increasing costs of breaches[4]. The ECRI Institute has identified AI as the top health technology hazard for 2025, emphasizing its dual role: while it enables advanced cyber threats, it also supports cutting-edge defense strategies[4].

Addressing these risks demands a layered strategy that integrates advanced detection technologies, strong governance practices, and ongoing monitoring. Still, technology alone isn’t enough - human oversight remains critical to ensure effective prevention, detection, response, and recovery[3].

To tackle these challenges, Censinet RiskOps™ offers healthcare organizations a centralized solution to manage evolving threats. The platform automates AI threat risk assessments, simplifies vendor security evaluations, and serves as a central hub for AI governance and risk management. With 80% of stolen patient records now linked to third-party vendors, adopting a comprehensive approach to risk management is no longer optional - it’s essential[4].

Staying ahead of these threats requires proactive vigilance. Organizations need to implement robust monitoring systems, establish clear accountability, and adapt to changing regulations like the updated HIPAA Security Rule. With ransomware demands averaging $1.2 million and 33 million Americans projected to be affected by healthcare data breaches in 2025, protecting patient safety and maintaining operational security is more than just a regulatory obligation - it’s a critical responsibility[4].

FAQs

What steps can healthcare organizations take to detect AI-driven cyber threats?

Healthcare organizations can stay ahead of AI-driven cyber threats by using AI-powered security tools. These tools are designed to monitor network traffic, sift through massive datasets to spot anomalies, and detect unusual user behavior in real time. This means they can catch advanced threats like automated phishing attacks or malware before they have a chance to cause damage.

Another key defense is regular cybersecurity training for staff. Running simulated phishing exercises, for example, helps employees become more adept at identifying sophisticated, AI-generated scams. On top of that, AI solutions that can pick up on subtle irregularities - like inconsistencies in deepfake media or strange communication patterns - offer an added layer of protection. By adopting these proactive measures, healthcare organizations can better safeguard sensitive data and critical systems from evolving cyber risks.

What are the main vulnerabilities in healthcare systems that AI-powered cyberattacks target?

Healthcare systems face unique risks when it comes to AI-driven cyberattacks, largely due to several vulnerabilities. These include outdated medical devices, unsecured hospital networks, misconfigured cloud platforms, exposed APIs, and data breaches. Cybercriminals exploit these weak points using advanced methods like automated reconnaissance, highly targeted phishing campaigns, and even deepfake-based social engineering tactics.

Beyond data breaches, AI-powered attacks can interfere with the decision-making processes of healthcare AI systems, which poses serious threats to both patient safety and the security of sensitive information. Mitigating these risks demands a proactive approach, including consistent system updates, implementing strong access controls, and establishing thorough AI governance frameworks specifically designed for the healthcare industry.

How does Censinet RiskOps™ help healthcare organizations manage AI-driven cyber threats?

Censinet RiskOps™ equips healthcare organizations with the tools they need to tackle AI-driven cyber threats head-on. Through detailed risk assessments, real-time monitoring, and automated incident response, it helps uncover vulnerabilities, implement AI governance policies, and address threats like advanced phishing, malware, and data manipulation attacks before they escalate.

By simplifying the process of detecting and managing threats, Censinet RiskOps™ safeguards patient data and ensures healthcare systems remain secure and reliable, helping organizations stay prepared for the ever-changing cyber threat landscape.