Governing the Ungovernable: New Frameworks for AI Risk Management

Post Summary

AI in healthcare is transforming patient care but comes with serious risks. Cybersecurity threats, algorithm bias, regulatory hurdles, and operational failures are major concerns. For example, a 2020 ransomware attack on a German hospital delayed care, leading to a patient’s death. This underscores the urgent need for better AI oversight.

Key Takeaways:

- Top Risks: Cybersecurity breaches, bias in algorithms, compliance with regulations (like HIPAA), and system failures.

- Frameworks to Mitigate Risks:

- NIST AI RMF: Focuses on governance, mapping risks, measuring performance, and managing issues across AI’s lifecycle.

- COSO ERM: Connects AI risks to organizational strategies, treating them as enterprise-level concerns.

- Governance Practices:

- Form AI oversight committees with cross-functional teams.

- Manage AI systems at every stage - development, deployment, operation, and retirement.

- Use tools like Censinet RiskOps for real-time monitoring and risk tracking.

Action Steps for Healthcare Organizations:

- Build governance committees to oversee AI policies and risks.

- Implement frameworks like NIST AI RMF and COSO ERM.

- Establish real-time monitoring systems and ensure human oversight of AI outputs.

AI in healthcare can improve outcomes, but only with strong governance, continuous monitoring, and ethical practices to ensure patient safety and trust.

AI Risk Governance Frameworks for Healthcare

NIST AI Risk Management Framework: Four Key Functions for Healthcare

Healthcare organizations don’t have to reinvent the wheel when it comes to managing AI risks. Two established frameworks - the NIST AI Risk Management Framework and the COSO Enterprise Risk Management framework - offer structured approaches that can be tailored to meet the specific challenges of healthcare environments.

These frameworks act as guides for healthcare cybersecurity teams, helping them identify, evaluate, and address risks tied to AI. The key is adapting these general frameworks to the unique demands of healthcare IT systems.

NIST AI RMF and Healthcare Applications

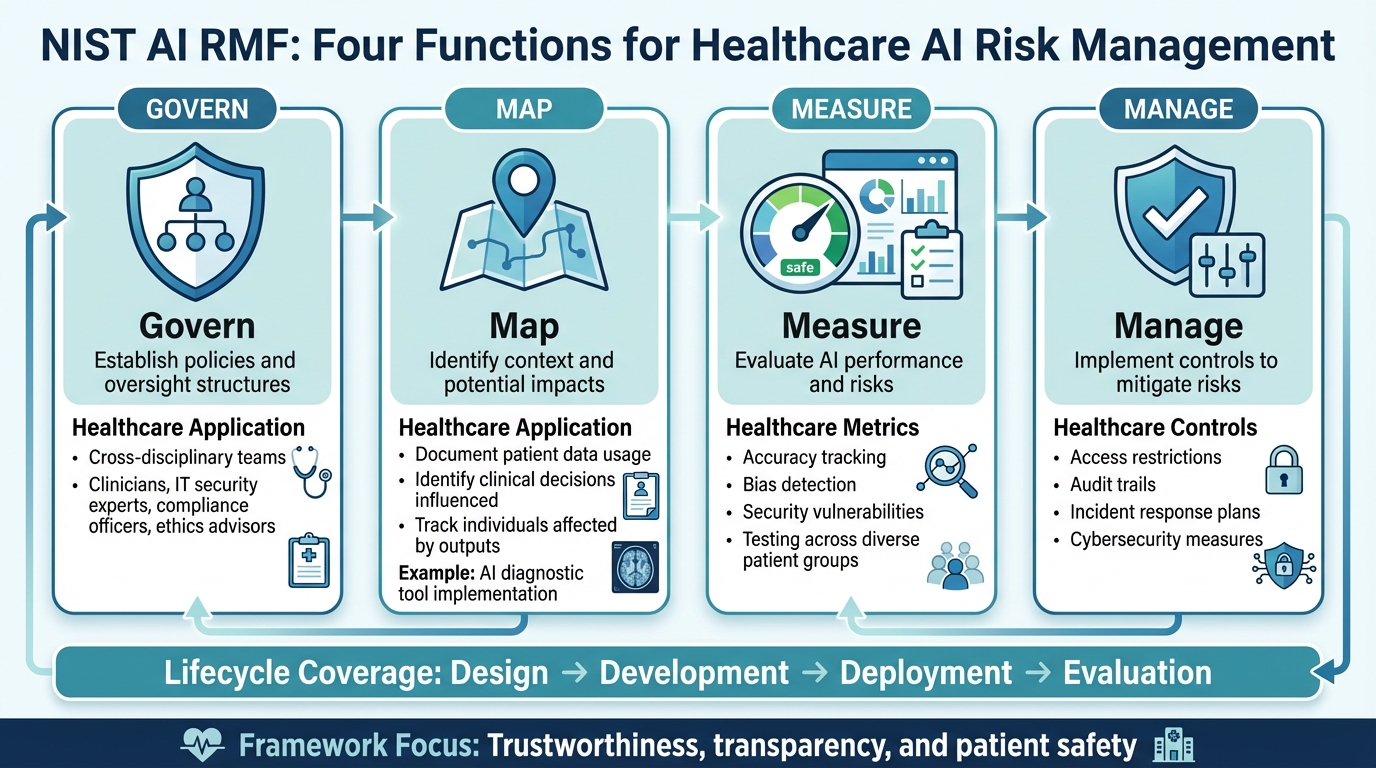

The NIST AI Risk Management Framework (AI RMF) builds on core risk management principles and provides targeted guidance for addressing AI risks. Designed as a voluntary tool, it helps organizations manage risks across the lifecycle of AI systems - from design and development to deployment and evaluation - with a focus on trustworthiness [4][5][6][2]. The framework organizes risk management into four key functions: Govern, Map, Measure, and Manage [5][6].

- Govern: This function establishes the policies and structures for overseeing AI systems. In healthcare, this often involves creating cross-disciplinary teams, including clinicians, IT security experts, compliance officers, and ethics advisors.

- Map: Here, organizations identify the context and potential impacts of their AI systems. For instance, if a healthcare provider is implementing an AI-powered diagnostic tool, this step would involve documenting the patient data being used, the clinical decisions it influences, and the individuals affected by its outputs.

- Measure: This step focuses on evaluating AI performance and risks using specific metrics. In healthcare, teams might track metrics for accuracy, bias detection, and security vulnerabilities. Regular testing for bias across diverse patient groups can help address performance issues before they escalate.

- Manage: This involves implementing controls to mitigate risks, such as access restrictions, audit trails, and incident response plans tailored to AI systems. Applying this function strengthens cybersecurity measures critical to patient safety.

Additionally, the NIST AI RMF Generative AI Profile (NIST-AI-600-1) offers further guidance for generative AI technologies, which are becoming increasingly popular in healthcare for tasks like clinical documentation and patient communication.

COSO ERM Framework for Healthcare AI Risk Management

The COSO Enterprise Risk Management (ERM) framework offers a broader organizational approach, connecting AI risks to overall business strategies and goals [1]. While the NIST AI RMF zeroes in on AI-specific risks, COSO ERM takes a wider view, ensuring that AI risk management is integrated into the organization’s strategic planning.

For healthcare organizations, this means treating AI risks as strategic concerns rather than isolated IT issues. These risks can affect areas like patient safety, financial stability, and reputation. The COSO framework emphasizes risk identification and assessment as critical first steps. Healthcare providers should systematically evaluate all AI systems, identify potential failure points, and analyze the likelihood and impact of various risk scenarios. For example, what would happen if an AI scheduling system failed during peak patient hours? Or if a clinical decision tool produced biased recommendations?

By embedding AI risk management within the broader ERM framework, healthcare leaders can ensure that governance, strategy, and risk assessments work together seamlessly. This approach supports informed decision-making about AI investments and resource allocation for risk mitigation, ultimately strengthening the organization’s ability to handle AI-related challenges.

Aligning Frameworks with Regulatory and Ethical Standards

AI governance in healthcare requires establishing policies and standards that ensure AI systems are secure, compliant, and aligned with medical ethics. Key ethical principles - accountability, transparency, fairness, and safety - are central to maintaining trust in healthcare delivery [7].

The regulatory environment for AI in the United States is complex, with no single overarching federal law. Instead, organizations must navigate a mix of FDA guidelines, NIST AI RMF, and sector-specific regulations like HIPAA [7][8].

- HIPAA compliance: Any AI system handling protected health information must meet HIPAA requirements. This includes implementing technical safeguards, ensuring AI vendors are covered by business associate agreements, and maintaining audit logs to track data flow.

- FDA guidelines: AI systems classified as medical devices, such as diagnostic tools, must comply with the FDA’s risk-based classification system. This includes adhering to monitoring and reporting requirements.

- HHS AI strategy: The Department of Health and Human Services (HHS) emphasizes transparency and accountability in AI deployment. Organizations should document AI decision-making processes, assign clear responsibilities for AI outcomes, and provide mechanisms for patients and providers to question or challenge AI-generated recommendations.

Aligning frameworks with these standards isn’t a one-time task. Instead, it’s an ongoing process that evolves as regulations change and as organizations grow more sophisticated in their use of AI. By staying proactive, healthcare organizations can ensure their AI systems remain safe, ethical, and compliant.

Implementing AI Risk Governance in Healthcare IT

For healthcare organizations, managing AI risks effectively means putting practical governance structures into place. This involves building dedicated teams, creating clear processes, and leveraging reliable tools to keep AI systems secure and compliant throughout their lifecycle. These steps bring theoretical AI risk management frameworks into real-world, day-to-day operations.

The current state of affairs is concerning: just 16% of health systems have a system-wide governance policy specifically addressing AI usage and data access [9]. This lack of preparation leaves many organizations exposed to risks that proper governance can mitigate. Addressing this starts with three key actions: forming oversight committees, managing AI systems throughout their lifecycle, and centralizing governance efforts.

Creating AI Governance Committees

AI governance can't be confined to a single department - it demands collaboration across multiple functions. A well-rounded governance committee should include security experts, compliance officers, clinical leaders, and data scientists [3][5]. Each member plays a critical role: security teams tackle technical vulnerabilities, clinicians focus on patient safety, compliance officers ensure adherence to regulations, and data scientists monitor algorithm performance.

"AI risk management begins with leadership. Boards and executives must embed AI oversight into the organization's culture, ensuring that ethical use, transparency, and accountability are prioritized."

– Performance Health Partners [9]

These committees should have the authority to review and approve AI implementations, define approval workflows for new tools, and establish clear escalation paths for addressing risks. Their responsibilities should span the entire AI lifecycle - from development and validation to deployment and eventual retirement [3][5]. This means they aren’t just approving purchases; they actively oversee how systems perform, how they’re used, and when they need to be decommissioned. Regular meetings are essential for reviewing inventories, identifying emerging risks, and updating governance policies as needed.

Managing AI Systems Throughout Their Lifecycle

Effective governance goes beyond forming committees - it requires hands-on oversight of AI systems at every stage, from development to decommissioning. AI systems evolve over time as they process new data, receive updates, or experience performance shifts. Keeping track of these changes is essential. Start by maintaining a comprehensive inventory of all AI systems, documenting their functions, dependencies, and potential vulnerabilities [3].

A useful approach is to classify AI tools using a five-level autonomy scale, which aligns the level of human oversight with the system's risk profile [3]. For instance, a low-risk AI chatbot that answers general health questions may need minimal oversight, while a high-risk diagnostic tool guiding treatment decisions requires much closer monitoring. This framework helps prioritize governance efforts and allocate resources efficiently.

Key control points should be identified and monitored during each phase of the AI lifecycle:

- Development and Validation: Test for biases, ensure data quality, and document decision-making logic.

- Deployment: Set up strict access controls, enable audit logging, and train users on proper system use.

- Ongoing Operations: Continuously monitor for performance issues, security threats, and compliance lapses.

- Decommissioning: Follow secure data deletion and system retirement protocols to minimize residual risks.

Clearly defining roles and responsibilities for each of these stages is critical [3][5]. For example, who approves new AI tools? Who reviews audit logs? Who intervenes when an AI system produces questionable outputs? Addressing these questions upfront helps prevent future complications.

Using Censinet for AI Risk Governance

Centralized solutions like Censinet RiskOps can streamline the implementation of these governance measures. Censinet simplifies the process by consolidating policies, assessments, and real-time monitoring, ensuring that elevated risks are promptly flagged and routed to the appropriate stakeholders. Its AI risk dashboard provides governance committees with a clear, real-time view of all AI-related risks across the organization.

Additionally, Censinet AI enhances risk assessments by automating tasks like evidence validation, summarizing vendor documentation, and identifying risks from third-party vendors. This system incorporates human oversight through customizable review processes, ensuring that automation supports decision-making rather than replacing it. This approach allows risk teams to scale their efforts without compromising safety.

When an AI system presents a significant risk, the platform immediately alerts the relevant committee members, tracks remediation efforts, and maintains detailed audit trails. This ensures accountability and continuous oversight, enabling organizations to address issues efficiently and effectively, while maintaining a culture of transparency and safety.

sbb-itb-535baee

Methods for AI Risk Assessment and Monitoring

Practical methods for assessing and monitoring AI risks are essential to managing these risks throughout their lifecycle. While governance frameworks lay the groundwork, the real challenge lies in implementing systems that go beyond policies - like technical assessments, detailed documentation, and real-time monitoring - to detect and address potential issues before they escalate.

In 2025, the Health Sector Coordinating Council (HSCC) Cybersecurity Working Group launched an AI Cybersecurity Task Group, involving 115 healthcare organizations, to create operational guidance for handling AI risks [3]. Their findings emphasize that AI systems require dedicated assessment techniques that go beyond traditional cybersecurity measures. For instance, AI models may degrade over time, produce biased outputs, or face threats like model poisoning, all of which demand a tailored approach.

Technical Methods for AI Risk Assessment

AI risk assessment starts with AI-specific threat modeling. This involves identifying vulnerabilities unique to machine learning models, such as adversarial attacks designed to manipulate outputs, data corruption, or model drift - when a model’s performance declines as data patterns evolve. Healthcare organizations should embed these assessments into their existing cybersecurity strategies, creating an AI Security Risk Taxonomy to address the unique risks of various AI technologies, not just Large Language Models [3].

Adversarial testing is another key practice. This process identifies weaknesses by simulating attacks or challenges. For example, testing an AI diagnostic tool to see if it can be misled by altered medical images, or evaluating how a clinical documentation assistant handles ambiguous inputs. The HSCC Cyber Operations and Defense subgroup highlights the importance of resilience testing, which relies on collaboration between cybersecurity and data science teams [3].

Monitoring model performance is equally critical. By tracking data distribution shifts, changes in prediction accuracy, or unusual output patterns, organizations can identify and address potential problems. Feedback loops are essential here, allowing teams to continuously refine risk management strategies based on new insights or technological advancements [5]. Importantly, this monitoring must happen continuously, not just during the initial deployment phase.

These technical evaluations are supported by robust documentation, which enhances both transparency and accountability.

Documentation and Audit Practices for AI Systems

Comprehensive documentation is crucial for understanding and managing AI systems. This includes detailing data lineage (where training data originates), feature selection (the variables the model uses), validation methods, and strategies for mitigating bias [12][13]. Such documentation not only supports compliance but also helps troubleshoot issues when they arise.

Standardized formats like model cards and data sheets provide clear insights into an AI system’s development, including its training data, known limitations, and regulatory status, such as FDA approvals [12][13]. In 2025, the Office of the National Coordinator for Health Information Technology mandated that health IT developers disclose this information under the ONC HTI-1 Rule, ensuring AI systems are not "black boxes" and that clinicians can understand how recommendations are made [12].

An AI Compliance Log is another essential tool. This log should record details such as the AI tools in use, their versions, reviewer information, and outcomes. For example, when AI suggests a diagnosis or treatment, the log must show that a qualified human reviewed and approved the recommendation.

"AI can’t sign attestations or testify in an audit. AI can’t assume OIG responsibility. It is the human reviewer who remains accountable, always."

– Melinda McGuire, MS, Master of Am Studies, RHIA [10]

Healthcare organizations must also independently validate AI model performance using their own data, rather than relying solely on vendor-provided scorecards [12]. For instance, in 2025, Mass General Brigham conducted a pilot study for generative AI in ambient documentation systems. They ensured privacy by sharing only deidentified data with vendors, prohibited long-term data storage, and required clinicians to review and edit all AI-generated clinical messages before they were sent to patients. They tracked system usage, monitored edits, and gathered feedback to refine performance and maintain accountability [13].

Feedback loops and discrepancy tracking are vital for continuous improvement. Organizations should monitor overrides, errors, and discrepancies in AI outputs, using this information to update models or review vendor performance [10][11]. For example, UST Health Proof revamped its chart review process in 2025 by implementing AI to handle diverse medical records, from handwritten notes to complex 10,000-page electronic records. Their approach improved productivity while maintaining a 95% inter-rater reliability score. Importantly, no AI recommendations were used without human coder review, ensuring compliance and accuracy [11].

Real-Time Monitoring with Censinet Tools

Real-time monitoring tools are indispensable for maintaining oversight of AI systems. Centralized platforms make this process scalable. For instance, Censinet RiskOps offers a real-time AI risk dashboard that consolidates data from various sources, providing a clear and up-to-date view of system performance.

Censinet AI further enhances monitoring by automating routine governance tasks while keeping human oversight intact. The platform validates vendor evidence, summarizes documentation, identifies risks from third-party vendors, and flags compliance issues. When a risk is detected - such as a vendor security breach or a sudden drop in AI performance - alerts are sent to designated stakeholders, including members of the AI governance committee. Detailed audit trails document every action taken, ensuring accountability.

This "air traffic control" approach ensures that the right teams address the right issues promptly. Automation supports, rather than replaces, human decision-making, allowing healthcare organizations to manage risks more efficiently while safeguarding patient safety.

Incident reporting tools also play a crucial role. These allow staff to document and categorize AI-related issues in real time, detect patterns, and shift from reactive to preventive risk management [9].

"The integration of technologies such as artificial intelligence and digital information systems enhances the ability to monitor, assess, and respond to clinical risks with greater speed and precision."

– Gianmarco Di Palma et al. [1]

Next Steps for AI Risk Management

Key Points for AI Risk Governance

Managing AI risks in healthcare requires structured frameworks like NIST AI RMF and COSO ERM, along with the support of dedicated AI governance committees [3]. These frameworks provide the foundation, but success also depends on technical evaluations, thorough documentation, and real-time monitoring. Tools like Censinet RiskOps streamline this process by automating risk governance tasks and quickly identifying critical issues. The goal? Ensuring AI systems remain transparent, accountable, and compliant with regulations throughout their entire lifecycle.

By putting these governance practices in place, healthcare organizations can begin advancing toward higher levels of AI risk maturity.

Creating a Roadmap for AI Risk Maturity

With frameworks and governance strategies established, the next step is to develop a clear roadmap for improving AI risk management. Start by evaluating your organization's current capabilities. To help with this, the Health Sector Coordinating Council is working on an AI Governance Maturity Model, set to launch in 2026, which will offer benchmarks for progress [3].

Begin with organization-wide training to increase awareness of AI risks and proper usage [3]. Establish well-defined governance processes and assign clear accountability for AI oversight. Implement an Enterprise Risk Management (ERM) framework tailored to address AI's specific challenges, moving beyond simple compliance checklists to tackle both technical and societal concerns [9].

Focus on prioritizing risks based on their likelihood and potential impact. This helps allocate resources where they are needed most [5][9]. Encourage collaboration across teams - cybersecurity, data science, clinical, and compliance professionals should work together to create feedback loops. These feedback loops are essential for driving ongoing improvements and transitioning from reactive problem-solving to proactive risk prevention [1].

FAQs

What steps can healthcare organizations take to implement AI risk management frameworks like NIST AI RMF and COSO ERM effectively?

Healthcare organizations can apply AI risk management frameworks like NIST AI RMF and COSO ERM by adopting well-organized, forward-thinking strategies. This involves setting up clear governance policies, performing regular risk assessments, and encouraging teamwork across departments to tackle AI-related challenges. Prioritizing transparency, fairness, and compliance with laws like HIPAA is essential.

To enhance risk management efforts, organizations can use real-time monitoring tools, tap into AI-powered predictive analytics, and promote ongoing improvements. Building a culture of accountability and conducting consistent reviews can help address risks like data breaches, algorithmic bias, and regulatory issues, creating a more secure and reliable healthcare IT system.

How can healthcare organizations reduce algorithm bias and ensure fairness in AI systems?

To reduce bias in algorithms and ensure equity in AI systems within healthcare, organizations can take several practical steps:

- Use diverse datasets: Training models with datasets that truly represent the population they aim to serve helps cut down the risk of skewed or biased results.

- Apply fairness metrics: Incorporate metrics specifically designed to detect and measure bias during both the development and deployment stages.

- Perform regular audits: Consistently review models to spot and correct any emerging biases over time.

- Bring in multidisciplinary teams: Engage experts from various fields - like clinicians, data scientists, and ethicists - to provide a well-rounded perspective on AI applications.

- Adopt explainable AI (XAI): Use tools that make AI decisions more transparent, allowing stakeholders to understand and trust how outcomes are determined.

These steps can help healthcare organizations create AI systems that are not only more reliable but also better aligned with ethical principles and patient care needs.

Why is real-time monitoring essential for managing AI risks in healthcare, and how does Censinet RiskOps support this effort?

Real-time monitoring plays a crucial role in managing AI risks within the healthcare sector. It allows for the immediate detection of anomalies, vulnerabilities, and cyber threats as they arise. This quick response capability is vital for safeguarding sensitive patient data, preventing breaches, and reducing the potential for misuse.

Censinet RiskOps contributes to this by automating risk assessments and providing continuous identification of vulnerabilities. This system empowers healthcare organizations to address issues before they grow into larger problems. By simplifying these processes, it strengthens security measures, ensures regulatory compliance, and fosters confidence in the adoption of AI systems in healthcare settings.