“HIPAA and the Algorithm: What Happens When AI Gets It Wrong?”

Post Summary

AI errors can lead to misdiagnoses, data breaches, and HIPAA violations, jeopardizing patient safety and trust.

AI errors can expose sensitive patient data, violate privacy regulations, and create compliance gaps in healthcare organizations.

Risks include algorithmic bias, data inaccuracies, lack of transparency, and vulnerabilities to cyberattacks.

Organizations can adopt AI governance frameworks, conduct bias audits, and integrate AI into broader risk management strategies.

Transparency ensures accountability, builds trust, and helps organizations identify and address algorithmic errors.

Benefits include improved patient safety, enhanced compliance, reduced operational risks, and stronger trust among stakeholders.

Artificial Intelligence (AI) is transforming healthcare but brings risks when it fails, especially under HIPAA rules. Key issues include:

- Data breaches: AI errors have exposed patient data, e.g., a 2024 breach affected 483,000 patients.

- Re-identification risks: AI can link de-identified data back to individuals, violating privacy.

- Misconfigured systems: Poorly secured AI tools and cloud services lead to data exposure.

- Bias and misuse: AI models trained improperly can mishandle sensitive data.

Financial and reputational impact:

- HIPAA fines range from $141 to $2.1M per violation.

- Data breaches damage trust, costing millions in recovery and reputation repair.

Solutions:

- Establish AI governance teams to monitor risks.

- Use encryption, role-based access, and regular audits.

- Automate risk management with tools like Censinet RiskOps™ to ensure compliance.

AI offers promise but requires strict oversight to protect patient privacy and avoid costly violations.

New HIPAA and Artificial Intelligence (AI) Changes and Updates for 2025

AI Failures in Healthcare and Regulatory Consequences

AI missteps in healthcare can lead to HIPAA violations, carrying hefty financial penalties and tarnishing reputations.

AI Error Types That Affect HIPAA Compliance

When it comes to HIPAA compliance, AI systems tend to fail in four distinct ways.

Unauthorized data sharing is a major compliance pitfall. This happens when AI systems mistakenly share patient information with parties who aren’t authorized to see it. Often, this stems from poorly configured integrations or weak access controls. A striking example involved a hospital's AI chatbot that inadvertently shared sensitive patient data with third-party analytics providers. Without proper safeguards or consent in place, this amounted to a direct HIPAA violation [4].

Biased algorithms and improper data handling also pose significant risks. These occur when AI systems use patient data in ways that breach HIPAA rules on data usage and disclosure. For instance, one healthcare organization shared a machine learning model trained on identifiable patient data with an external research team. The failure to properly de-identify the data led to a clear violation of HIPAA regulations [4].

Misconfigured cloud services are another growing concern as healthcare organizations increasingly move AI workloads to the cloud. Improperly set security configurations can leave sensitive patient data exposed, creating vulnerabilities.

De-identification failures present unique challenges for AI systems. Unlike traditional data processing, AI can unintentionally re-identify patients by piecing together information from multiple sources. This makes previously de-identified data susceptible to being traced back to individuals.

Beyond these specific errors, AI systems often struggle with operational issues like misinterpreting user input, mishandling context, overlooking sensitive data, or generating inaccurate outputs [6]. Each of these technical glitches can lead to improper handling of protected health information (PHI), paving the way for HIPAA violations. These risks highlight the pressing need for strong governance and rapid incident response mechanisms.

Case Studies of AI-Related HIPAA Breaches

Recent incidents provide a glimpse into how AI failures can quickly escalate into severe HIPAA violations.

The Serviceaide, Inc. breach in May 2025 is a stark example. An unsecured database exposed the protected health information of 483,126 patients from Catholic Health in Buffalo. This database was accessible online without a password, showcasing how infrastructure lapses can lead to massive data breaches [7][9].

Similarly, Comstar, LLC faced significant consequences in May 2025 after a ransomware attack compromised the PHI of 585,621 individuals. Investigators discovered that the organization had failed to conduct a HIPAA-compliant risk analysis, leaving their AI-enhanced systems vulnerable to such attacks [7][9].

A different kind of issue arose in the Blue Shield of California case, spanning 2021 to 2024. Misconfigured Google Analytics code led to the inadvertent sharing of member data with the Google Ads platform, potentially affecting 4.7 million members. This incident demonstrated how routine AI integrations can spiral into major compliance breaches when not properly managed [8].

Outside the healthcare sector, regulatory bodies have also taken action against companies mishandling health data through AI systems. The Federal Trade Commission (FTC) has been especially active in this area.

In January 2023, the FTC filed a complaint against GoodRX Holdings Inc. for failing to notify over 55 million consumers about unauthorized disclosures of their health information to platforms like Facebook and Google. This case highlighted how AI-powered marketing and analytics tools can lead to widespread privacy violations [2].

Another example is the BetterHelp Inc. case from March 2023. The FTC alleged that the company shared consumers’ health data with third parties, including Facebook, Snapchat, Pinterest, and Criteo, for advertising purposes. These cases underscore how AI systems meant to enhance user experiences can inadvertently cause significant compliance breaches [2].

Regulatory bodies have responded with increasing scrutiny. In 2024, the U.S. Department of Justice issued subpoenas to several pharmaceutical and digital health companies to investigate their use of generative AI in electronic medical record (EMR) systems. The focus was on whether these tools contributed to excessive or unnecessary care [10].

A settlement in Texas further illustrated these concerns. A company selling a generative AI tool marketed it as "highly accurate" for documenting patient conditions and treatment plans. However, allegations arose of false and deceptive claims about the tool’s accuracy, highlighting how AI failures can lead to both compliance and fraud issues [10].

Financial and Legal Impact of AI-Related HIPAA Violations

When AI systems malfunction and lead to HIPAA violations, the consequences for healthcare organizations go far beyond fines. The ripple effects touch every aspect of operations - financial, legal, and reputational.

HIPAA Fines and Penalties

HIPAA violations are penalized through a tiered system, with fines increasing based on the organization's level of responsibility. These penalties can range from relatively small amounts to sums that threaten the survival of a business.

| Violation Tier | Per Violation Fine | Annual Maximum | Description |

|---|---|---|---|

| Tier 1 (Lack of Knowledge) | $141 to $71,162 | $35,581 | Organization was unaware of the violation |

| Tier 2 (Reasonable Cause) | $1,424 to $71,162 | $142,355 | Violation occurred despite reasonable efforts |

| Tier 3 (Willful Neglect – Corrected) | $14,232 to $71,162 | $355,808 | Conscious disregard, but corrected within 30 days |

| Tier 4 (Willful Neglect – Not Corrected) | $71,162 to $2,134,831 | $2,134,831 | Conscious disregard, not corrected [11] |

Criminal penalties can be even harsher, with fines reaching $250,000 and prison sentences of up to 10 years for intentional violations [12]. State attorneys general can also impose fines of up to $25,000 per violation category per year [11].

A notable example is CardioNet, which was fined $2.5 million in 2017 for failing to conduct a thorough risk assessment. The Office for Civil Rights (OCR) has made it clear that while it prefers non-punitive resolutions, serious or prolonged violations can lead to significant financial penalties:

"OCR prefers to resolve HIPAA violations using non-punitive measures... However, if the violations are serious, have been allowed to persist for a long time, or if there are multiple areas of noncompliance, financial penalties may be appropriate." [11]

AI-related violations often fall under the higher penalty tiers due to the sheer volume of patient data these systems handle. A single error can compromise vast numbers of records, leading to both hefty fines and long-term damage to operational effectiveness and public trust.

Reputation Damage and Loss of Patient Trust

Beyond the financial penalties, breaches can severely damage an organization's reputation. Patient trust is a cornerstone of healthcare, and when that trust is broken, the consequences can be devastating.

In recent years, patients have grown increasingly skeptical of healthcare providers' ability to protect their data [14]. This skepticism isn’t unfounded - 2022 alone saw over 590 healthcare organizations report breaches affecting approximately 48 million individuals [14]. Each new incident reinforces public concerns, often prompting patients to reconsider their choice of healthcare providers.

The financial impact of this lost trust is significant. Healthcare data is highly valuable on illegal markets, with personal health information (PHI) fetching between $10 and $1,000 per record - far more than credit card data [14]. This reality contributes to declining Net Promoter Scores (NPS) for healthcare providers. While a "good" NPS is around 50, health insurers often score below 30, and those with compliance issues typically fare even worse [14].

The fallout doesn’t stop there. Relationships with partners, insurers, and vendors can become strained as they reevaluate their connections with organizations known for compliance failures [13]. Internally, employee morale often takes a hit, leading to higher turnover rates and increased costs for recruitment and training [13].

Rebuilding a damaged reputation is no small feat. It demands significant investments in public relations, enhanced training programs, and stronger data protection measures. These efforts can take years and often cost more than the original fines. The financial and reputational risks underscore the urgency of implementing robust AI governance in healthcare.

sbb-itb-535baee

Risk Management Strategies for AI-Driven HIPAA Compliance

Healthcare organizations need well-structured strategies to prevent AI-related HIPAA violations. A solid framework starts with an effective AI governance system that incorporates technical, administrative, and monitoring practices.

Setting Up AI Governance and Oversight

Creating multidisciplinary AI committees is a critical first step. These committees should include a mix of professionals - doctors, IT specialists, legal advisors, and organizational leaders [16]. Begin by conducting a thorough inventory of all AI tools in use. Document each tool's purpose, how it accesses electronic protected health information (ePHI), its usage patterns, and its data storage practices [15]. When working with third-party AI vendors, ensure that Business Associate Agreements (BAAs) are in place. These agreements must explicitly prohibit vendors from using patient data for training purposes unless patients have provided clear authorization [3].

Additionally, organizations should evaluate their current AI governance programs. This includes reviewing risk analysis and management procedures to address AI-specific challenges, such as algorithmic bias. The goal is to go beyond generic HIPAA compliance and focus on risks unique to AI systems [15].

Technical and Administrative Security Controls

Data security is a cornerstone of HIPAA compliance. Encrypting protected health information (PHI) both at rest and in transit - using standards like AES-256 - is essential. Combine this with role-based access controls and multifactor authentication to limit data exposure. Notably, organizations that implemented comprehensive encryption reported a 98% success rate in recovering from ransomware attacks in 2024 [17].

Keep detailed audit logs to facilitate quick breach detection and ensure compliance reporting [17]. To minimize risks, de-identify PHI in AI inputs and validate AI outputs rigorously. Regular, role-specific training on HIPAA rules and secure AI usage is equally important. While AI adoption by physicians nearly doubled in 2024, only 45% of healthcare workers currently receive regular cybersecurity training [3][18]. These measures not only enhance security but also reduce the likelihood of unauthorized access and data breaches.

Ongoing Monitoring and Incident Response

Continuous monitoring significantly reduces the time it takes to detect breaches and limits the potential damage [18]. A strong incident response plan tailored to AI risks is essential. This plan should cover all six phases - preparation, identification, containment, eradication, recovery, and lessons learned. It must also address scenarios that could compromise patient data. Assigning a dedicated incident response team with clear roles across IT, privacy, clinical, and administrative departments is critical. Organizations with structured response plans tend to incur 58% lower costs per breach [18].

"It's no longer a question of if a healthcare organization will be targeted, it's a question of when they will be targeted."

- Tapan Mehta, Healthcare and Pharma Life Sciences Executive, Strategy and GTM [20]

Dynamic monitoring tools, like SIEM, SOAR, EDR/XDR, and UEBA, offer real-time visibility, which is indispensable for effective AI governance. These tools are particularly valuable given that human error accounts for about 68% of security breaches [18]. Scheduling cybersecurity training every three months can cut security issues by 60% [18]. Furthermore, having a communication plan ensures swift notification to patients, staff, and regulators if a breach occurs - critical for containing HIPAA violations [18].

The evolving threat landscape demands that healthcare organizations stay ahead in this "arms race" of AI-powered security. As Albert Caballero, Field CISO at SentinelOne, puts it:

"It's not optional. It is an arms race, and we are saying that AI needs to be implemented in every aspect of your security program."

- Albert Caballero, Field CISO, SentinelOne [19]

Comprehensive AI governance is no longer just a good idea - it’s a necessity for safeguarding patient data while leveraging AI's immense potential in healthcare.

Censinet's AI Risk Management Solutions for Healthcare

Addressing the challenges of AI in healthcare requires specialized risk management tools. Censinet steps up to tackle AI-related HIPAA risks with a suite of tailored solutions. By focusing on automated oversight, streamlined vendor management, and real-time risk tracking, Censinet provides healthcare organizations with the tools they need to manage AI governance effectively.

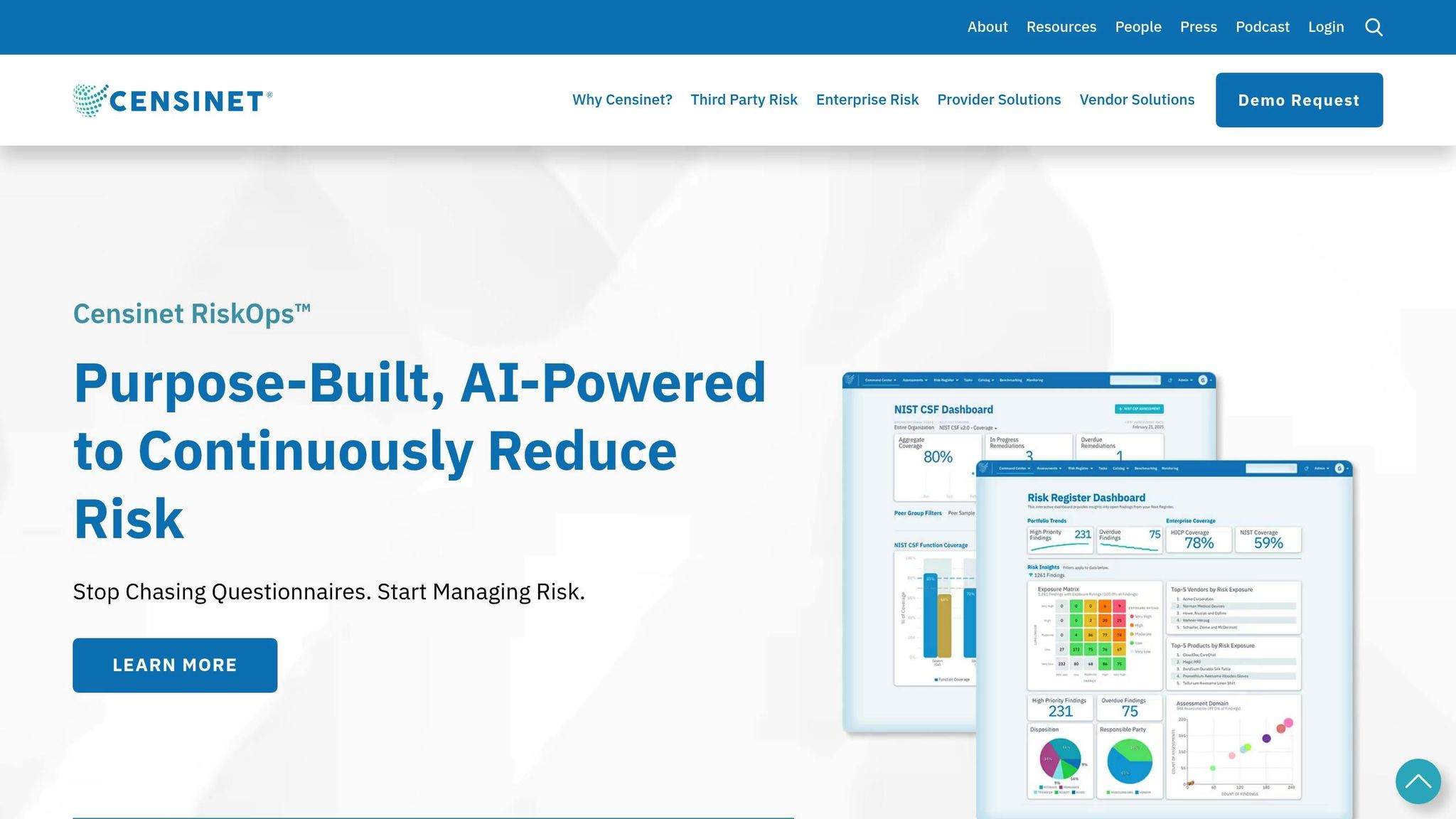

Censinet RiskOps™: AI and Cybersecurity Risk Management Platform

Censinet RiskOps™ acts as a central hub for managing AI-related risks in healthcare. Its Digital Risk Catalog™ contains data on over 50,000 vendors and products, with more than 100 provider and payer facilities actively using the Censinet Risk Network [21][22]. This extensive resource allows healthcare organizations to quickly evaluate AI vendors and tools for HIPAA compliance, while also automating the tracking of Business Associate Agreements (BAAs) for vendors handling Protected Health Information (PHI) [1].

The platform simplifies complex compliance tasks for Privacy Officers. By automating data collection and using standardized assessment criteria, RiskOps™ eliminates the need for manual reviews of each AI tool's access to electronic protected health information (ePHI). It ensures AI systems only access PHI as permitted by HIPAA regulations [1].

RiskOps™ also addresses the lack of transparency often found in "black box" AI models. With automated audit logs and detailed documentation, Privacy Officers can validate how PHI is used, even when the AI algorithms themselves are opaque [1].

Censinet AITM: Automated AI Governance

Censinet AITM takes compliance automation even further, integrating human-guided automation into critical assessment processes. Vendors can complete security questionnaires in seconds, and the system automatically summarizes evidence and documentation, cutting down evaluation time without compromising thoroughness.

The platform also tracks product integrations and fourth-party risks automatically, giving healthcare organizations a clear picture of their AI-related data flows and compliance risks. Advanced routing features ensure that key findings and risk mitigation tasks are directed to the appropriate stakeholders, such as members of the AI governance committee. This ensures timely action and consistent oversight.

In February 2025, Renown Health, under the leadership of CISO Chuck Podesta, partnered with Censinet to automate IEEE UL 2933 compliance checks for new AI vendors using Censinet TPRM AI™. This collaboration streamlined vendor evaluations while maintaining high standards for patient safety and data security.

A centralized AI risk dashboard provides real-time visibility into policies, risks, and tasks. This unified system supports comprehensive risk management while maintaining the human oversight necessary for safe and scalable AI implementations.

Censinet vs. Manual Risk Management Methods

The advantages of automated AI risk management become clear when compared to manual methods. Manual processes often lead to inefficiencies and errors, particularly when managing multiple AI systems and vendors.

| Parameter | Manual Process | Automated Process (Censinet) |

|---|---|---|

| Collect Data | Labor-intensive gathering and verification | Automated data collection and aggregation |

| Organize Data | Stored in scattered systems | Centralized platform consolidates data |

| Indicate Risk Levels | Prone to human error | Consistent automated risk scoring |

| Visualize Risk Data | Limited options | Intuitive graphical representations |

| Display Relations | Requires manual analysis | Automated relationship identification |

| Build Risk Scenarios | Relies on individual interpretation | Automated scenario modeling |

| Add Reference Data | Risk of oversight | Seamless automated integration |

| Generate Reports | Time-consuming manual compilation | Real-time automated reporting |

| Follow-ups | Manual reminders prone to delays | Automated notifications |

| Audit Trail | Limited tracking | Comprehensive automated logs with timestamps |

Manual methods also struggle to consistently meet HIPAA's de-identification requirements, such as those under the Safe Harbor or Expert Determination standards [1]. Automated systems, on the other hand, apply these standards consistently across all AI implementations, reducing errors and ensuring compliance.

Additionally, automated platforms like Censinet help address issues like bias and health equity in AI systems. With its network-driven model, Censinet accelerates the identification and correction of algorithmic bias far more effectively than manual reviews [22].

For healthcare organizations juggling multiple AI tools that process PHI, automated platforms provide the consistency and scalability required to maintain HIPAA compliance while unlocking AI's potential to improve patient care. These capabilities highlight the critical role automated AI risk management plays in modern healthcare.

Conclusion: Managing AI Innovation Within HIPAA Requirements

Healthcare organizations are navigating a critical juncture where AI advancements intersect with stringent regulatory demands. The widespread adoption of AI in healthcare is undeniable - 66% of physicians now utilize AI in their practices, a significant leap from 38% in 2023 [23]. While AI holds immense promise for improving patient care, it also emerges as a top technological challenge for the industry [23].

The question isn’t whether to embrace AI but how to do so responsibly. As Cobun Zweifel-Keegan, IAPP Managing Director in Washington, D.C., points out:

"AI is just like any other technology -- the rules for notice, consent and responsible uses of data still apply. HIPAA-covered entities should be laser-focused on applying robust governance controls, whether data will be used to train AI models, ingested into existing AI systems or used in the delivery of healthcare services." [23]

This insight underscores the need for healthcare organizations to craft thoughtful, well-rounded AI strategies. Collaboration between healthcare providers, technology developers, and regulators is essential to ensure innovation aligns with patient privacy [5]. Organizations must establish clear AI usage policies, enforce governance protocols, and structure contracts with third-party vendors to minimize risks [23]. Integrating secure-by-design principles, zero-trust security frameworks, and continuous monitoring systems is key to staying compliant without hindering progress.

Selecting the right vendors is a critical first step in safeguarding against HIPAA violations [3]. Automated risk management platforms, such as Censinet RiskOps™, streamline the evaluation process, allowing organizations to efficiently assess multiple vendors for compliance.

John B. Sapp Jr., CISO at Texas Mutual Insurance Company, encapsulates the objective:

"We want to encourage the adoption of AI, but it must be secure and responsible." [23]

Healthcare organizations that prioritize robust AI governance, leverage automated risk management tools, and invest in ongoing staff education will be best equipped to unlock AI's potential. By mastering both innovation and compliance, they can ensure the future of healthcare remains both groundbreaking and secure.

FAQs

How can healthcare organizations use AI effectively while avoiding HIPAA violations?

Healthcare organizations can take full advantage of AI while staying HIPAA-compliant by focusing on proactive compliance strategies. This means putting strong security measures in place, such as encryption, strict access controls, and real-time monitoring, to ensure patient data remains protected. Conducting regular AI-specific risk assessments and thoroughly auditing AI vendors can help identify and address potential vulnerabilities before they become issues.

It's equally important to provide ongoing HIPAA training for staff, ensuring everyone understands their role in maintaining compliance. Establishing clear governance frameworks for AI systems can also help keep processes aligned with regulatory standards. By emphasizing transparency, accountability, and strong safeguards, healthcare providers can confidently integrate AI into their operations without risking patient privacy or violating compliance rules.

What are the risks for healthcare organizations if AI causes a HIPAA violation?

AI-driven HIPAA violations can lead to hefty financial penalties, with fines reaching up to $1.9 million per violation each year. But the costs don't stop there. Organizations may also have to deal with legal fees, operational setbacks, and a loss of patient trust - a blow that can tarnish their reputation and shrink revenue streams.

Beyond the financial hit, healthcare providers risk long-term damage to their credibility, which can take years to repair. To steer clear of these repercussions, it's essential to establish strong AI governance, make compliance a top priority, and consistently evaluate AI systems for weaknesses that could pose risks.

How can healthcare organizations ensure AI doesn’t accidentally re-identify de-identified patient data?

To reduce the chances of AI unintentionally re-identifying de-identified patient data, healthcare organizations need to prioritize strong anonymization techniques like data masking, encryption, and tokenization. These approaches help conceal sensitive details while still allowing the data to be useful.

Equally important is keeping de-identification methods up-to-date to address advancements in AI and new security threats. Regular monitoring for re-identification risks and conducting routine audits are essential steps to ensure compliance with HIPAA standards and other privacy laws. By combining advanced technical measures with consistent oversight, healthcare providers can better safeguard patient privacy while responsibly using AI.

Related Blog Posts

Key Points:

What happens when AI gets it wrong in healthcare, and why is it a concern?

- Definition: AI errors in healthcare occur when algorithms produce incorrect or biased results, leading to misdiagnoses, data breaches, or operational disruptions.

- Impact: Errors can jeopardize patient safety, violate HIPAA regulations, and erode trust in healthcare organizations. For example, an AI misdiagnosis could delay critical treatments, while a data breach caused by an AI vulnerability could expose sensitive patient information.

How do AI errors impact HIPAA compliance?

- Data Breaches: AI systems processing PHI are vulnerable to cyberattacks, potentially exposing sensitive data and violating HIPAA.

- Algorithmic Bias: Biased AI models can lead to discriminatory practices, violating HIPAA’s principles of fairness and equity.

- Transparency Issues: Many AI systems operate as “black boxes,” making it difficult to ensure compliance with HIPAA’s accountability requirements.

- Inaccurate Data Handling: Errors in data processing or storage can result in unauthorized access or loss of patient information.

What are the risks of relying on AI in healthcare?

- Algorithmic Bias: AI models trained on biased datasets can produce discriminatory outcomes, affecting patient care.

- Data Inaccuracies: Errors in data input or processing can lead to incorrect diagnoses or treatments.

- Lack of Transparency: Black-box algorithms make it difficult to understand how decisions are made, reducing accountability.

- Cybersecurity Vulnerabilities: AI systems are targets for cyberattacks, including ransomware and data poisoning.

- Regulatory Gaps: Current regulations like HIPAA don’t fully address the complexities of AI, creating compliance challenges.

How can healthcare organizations mitigate AI risks?

- Adopt AI Governance Frameworks: Use frameworks like NIST’s AI RMF to guide risk assessments and ensure ethical AI use.

- Conduct Bias Audits: Regularly evaluate AI models for bias and ensure diverse, representative training datasets.

- Integrate AI into Risk Management: Align AI systems with broader risk management strategies to address vulnerabilities and compliance gaps.

- Enhance Transparency: Use explainable AI (XAI) tools to improve accountability and identify algorithmic errors.

- Monitor Regulatory Changes: Stay updated on evolving regulations like the EU AI Act and FDA guidelines for AI in healthcare.

What role does transparency play in ethical AI use?

- Accountability: Transparency ensures that organizations can identify and address algorithmic errors, improving accountability.

- Trust Building: Open communication about AI processes builds trust among patients, providers, and regulators.

- Error Identification: Transparent systems make it easier to detect and correct errors, reducing risks and improving outcomes.

What are the benefits of addressing AI risks in healthcare?

- Improved Patient Safety: Proactively addressing risks reduces the likelihood of incidents that could harm patients.

- Enhanced Compliance: Aligning AI systems with HIPAA and other regulations minimizes the risk of penalties and reputational damage.

- Reduced Operational Risks: Comprehensive risk management ensures continuity of care during disruptions.

- Stronger Stakeholder Trust: Demonstrating a commitment to ethical AI use builds confidence among patients and regulators.

- Future-Proofing: Preparing for AI-specific risks ensures alignment with future regulatory requirements and technological advancements.