How to Assess Human Factors in Device Cybersecurity

Post Summary

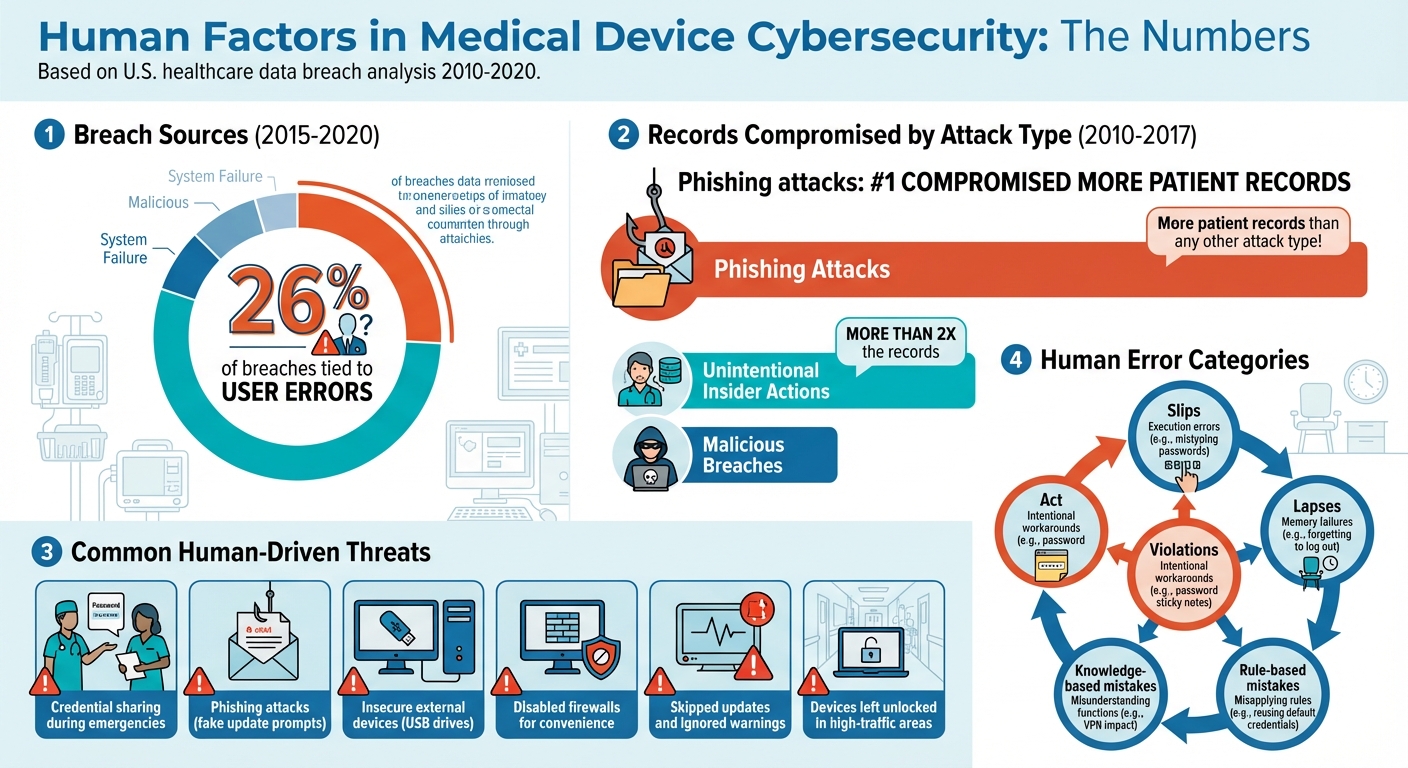

Cybersecurity risks in medical devices often stem from human behavior. Clinicians skipping password prompts, falling for phishing scams, or sharing credentials during emergencies can lead to serious vulnerabilities. Between 2015 and 2020, 26% of breaches were tied to user errors, with phishing attacks compromising the most patient records.

To address this, the FDA now requires organizations to integrate Human Factors Engineering (HFE) into their cybersecurity strategies. This involves:

- Mapping how users interact with devices and identifying risky behaviors.

- Understanding environmental and organizational conditions, like time pressures or informal practices.

- Categorizing errors (e.g., slips, lapses, violations) to trace their root causes.

- Prioritizing solutions that align with user workflows, such as simplifying logins or improving interfaces.

- Continuously monitoring metrics like training pass rates or security violations to refine strategies.

Human Factor Cybersecurity Risks in Healthcare: Key Statistics and Breach Data 2010-2020

Map Human Interactions and Device Use Contexts

To effectively address cybersecurity vulnerabilities, it’s crucial to map out how people interact with devices and the contexts in which these interactions occur. Understanding these dynamics helps uncover human factor-driven risks that could compromise security.

Identify Key Users and Workflows

Start by identifying every type of user who interacts with the device throughout its lifecycle. This includes obvious groups like clinicians (nurses, doctors, therapists), biomedical and clinical engineering teams, IT and security staff, administrators, patients, caregivers at home, and vendor field engineers with remote access. Don’t overlook less visible users, such as float nurses, traveling staff, or third-party technicians who might bypass standard protocols.

Next, map out the critical workflows these users follow. Pay close attention to tasks that directly impact security, such as logging in and out, performing software or firmware updates, connecting devices to networks, managing remote access, and handling passwords or authentication. Real-world observations often reveal that staff skip security steps when controls are inconvenient - for example, staying logged in between patients or using generic logins to save time.

Capture Environmental and Organizational Conditions

The environment where a device is used significantly influences cybersecurity practices. Document factors like whether devices are shared among staff, the unit’s layout, levels of noise and interruptions, and whether devices are mobile or stationary. For instance, a shared workstation in a hectic emergency room poses entirely different challenges compared to a dedicated device in a quieter outpatient setting.

Organizational conditions also play a major role. Take note of time pressures during busy periods, staffing dynamics such as staff-to-patient ratios and shift patterns, and how often interruptions occur during critical tasks. Informal workplace norms, like password sharing or prioritizing speed over strict adherence to security protocols, can introduce vulnerabilities. Similarly, the frequency and quality of cybersecurity training often influence whether staff stick to - or sidestep - security measures.

Document Findings for Risk Assessment Integration

Compile your findings into a matrix that links user roles, tasks, contexts, potential errors, and possible mitigations. This structured approach aligns with methodologies like Use-Related Risk Analysis (URRA), Use/Application Failure Mode and Effect Analysis (FMEA), and cybersecurity risk assessment practices outlined in FDA guidance and ISO 14971. Include supporting materials - like workflow diagrams, screenshots, or training documents - to ensure each identified risk can be traced back to real-world observations. Use version control to keep these maps updated as device configurations, software, or policies evolve.

This detailed mapping process sets the stage for tackling human-driven cybersecurity threats in the next steps.

Identify Human Factor-Driven Threats and Vulnerabilities

Cybersecurity threats often originate from human behavior, making them a critical area to address. A study analyzing U.S. healthcare data breaches from 2010–2017 revealed that most health records were compromised due to human-related security lapses, rather than advanced external hacking techniques [3]. These human-driven vulnerabilities are often more prevalent than technical flaws and demand focused attention.

Recognize Common Human-Centered Threats

By examining user interactions, it's possible to uncover threats linked to human behavior. One common issue is credential sharing, where clinicians share login information to save time during emergencies. While this may seem practical, it opens the door to unauthorized access and diminishes accountability. Another major risk is phishing attacks, where users are tricked into clicking malicious links disguised as legitimate, like fake update prompts. Alarmingly, phishing is responsible for compromising more patient records than any other type of attack [3].

Other risks stem from insecure use of external devices, such as plugging unverified USB drives into critical systems like infusion pumps, potentially introducing malware. Negligence or carelessness also plays a significant role - examples include disabling firewalls on connected monitors for convenience or leaving devices unlocked in high-traffic areas. In fact, unintentional insider actions have been linked to over twice as many compromised records as malicious breaches [3]. Additionally, users may skip updates, ignore warnings, or bypass security protocols due to alert fatigue or poor system usability, leaving systems even more exposed.

Classify Threats Using Human Error Taxonomies

Human error taxonomies provide a structured way to categorize threats and trace their root causes. For example:

- Slips: Execution errors, like mistyping passwords on medical devices due to poorly designed keyboards.

- Lapses: Memory failures, such as forgetting to log out of a shared workstation after a hectic shift.

- Rule-based mistakes: Misapplying a rule, like reusing default credentials under the assumption that the system is already secure.

- Knowledge-based mistakes: Misunderstanding system functions, such as not realizing how a VPN might impact device connectivity.

- Violations: Intentional workarounds, like writing passwords on sticky notes and attaching them to devices.

Mapping these errors to their causes - whether workload, interface design, time pressure, or insufficient training - can guide effective solutions. For instance, redesigning a confusing interface, automating repetitive tasks, or providing better user feedback can reduce errors. Validating these classifications against real-world incident data ensures that the analysis reflects actual risks.

Use Incident Data to Identify Patterns

Incident data is invaluable for uncovering recurring human-factor issues that might not be obvious through observation alone. Review breach reports for descriptions like "employee clicked phishing email" or "technician disabled antivirus for performance." Security logs can reveal patterns such as repeated failed login attempts, unusual access times, or logins from unexpected locations that suggest misuse of credentials. Help desk tickets and user complaints, such as frequent "forgotten logout" reports, can pinpoint vulnerabilities, while clusters of phishing incidents during shift changes may highlight timing-related risks.

Analyze Risk and Prioritize Controls Through a Human Factors Lens

After identifying threats tied to human factors, the next step is to weave these insights into your risk assessment framework. While traditional models often zero in on technical vulnerabilities, real-world human behavior can amplify risks in unexpected ways. By examining mapped user interactions, you can refine risk scoring models to better account for these dynamics. This sets the stage for selecting the right mitigation strategies.

Incorporate Human Factors into Risk Scoring Models

A well-rounded risk scoring approach evaluates both technical vulnerabilities and the probability of human error. The FDA's guidance on cybersecurity in medical devices emphasizes identifying use-related cybersecurity risks and assessing them through tools like Use-Related Risk Analysis (URRA) and Failure Mode and Effects Analysis (FMEA) [2][6]. For instance, consider an infusion pump with weak encryption. Its technical risk might seem low, but if nurses frequently bypass password prompts during busy shifts, applying a 0.3 human error multiplier could significantly increase its overall risk score [2][6].

This methodology aligns with AAMI TIR57’s two-factor model: Threat Likelihood (based on attacker motivation and capability) multiplied by Likelihood of Harm (the chance that exploitation results in harm, heavily influenced by user behavior and situational factors like stress or emergencies) [5]. Adjust scoring scales to reflect conditions where human errors are more likely, such as during night shifts, high-stress situations, or when tasks are overly complex and time-sensitive. Then, use these adjusted scores to guide the selection of the most effective controls, balancing technical solutions with human-centered approaches.

Compare Technical and Human-Centered Mitigations

Once you’ve calculated elevated risk scores, it’s time to identify controls that address both technical vulnerabilities and human factors. Technical measures like encryption or multi-factor authentication reduce the attack surface but often rely on proper user compliance. For example, while encryption safeguards data, users might disable it for convenience, or key mismanagement could still occur. Research shows that unintentional human actions compromise more records than external attacks [3].

On the other hand, human-centered solutions - such as redesigning workflows, simplifying authentication processes, or creating secure-by-design user interfaces - tackle the root causes of error. The FDA advocates for inherent safety designs and user-friendly interfaces over relying solely on training or labeling [2]. For example, implementing single sign-on with automatic session locks tied to task completion can streamline workflows, easing the burden on clinicians who might otherwise struggle with complex security protocols during chaotic shifts.

To prioritize controls, use a matrix to evaluate their effectiveness, feasibility, and residual risk. For instance, encryption might cut technical vulnerability by 90% but still leave a 20–30% chance of human workarounds. In contrast, workflow redesigns - like context-aware access or task-aligned auto-lockouts - could reduce errors by 50% with lower residual risk, as shown through URRA and human factors testing [2][3]. Documenting these trade-offs not only supports regulatory submissions but also strengthens internal governance, ensuring your strategy effectively addresses both the technical and human sides of cybersecurity risks.

sbb-itb-535baee

Design and Implement Human-Centered Cybersecurity Mitigations

To implement a cybersecurity plan effectively, security measures must be seamlessly integrated into interfaces, workflows, and access controls. The goal? Make secure actions the easiest ones to perform, reducing the need for constant user intervention. This approach ensures that clinicians can focus on their work without compromising security [2].

Improve Usability and Secure-by-Design Features

Interfaces should guide users toward secure choices by default. Features like automatic session timeouts, pre-enabled encryption, and strong password requirements help establish a baseline of security. Scheduling updates during off-peak hours ensures systems remain protected without disrupting workflows.

Security prompts should be clear and actionable. For example, instead of a generic error message about a certificate issue, a prompt might state, "Connection is not secure - patient data may be exposed" [2]. Simple visual cues, such as color-coded warnings or icons, can immediately alert users to risks like outdated software or insecure networks.

Progressive disclosure is another effective strategy. It presents only the most critical information upfront, while allowing users to access more detailed context - such as update release notes or security specifics - if needed [2].

The FDA highlights user interface design as a key tool for addressing cybersecurity risks related to human behavior. Simplifying configurations by removing unused network services and ports can reduce complexity. Security steps should fit naturally into clinical workflows, like combining patient identity verification with device association on the same screen. Providing just-in-time guidance during unusual security events further supports secure operations [2]. These measures directly address vulnerabilities caused by human error.

Align Authentication with User Workflows

Secure interface design lays the groundwork, but refining authentication processes takes usability and security a step further. Quick re-authentication methods - such as badge taps, biometric scans, or session unlocking - allow users to step away and return without hassle. This reduces the temptation to share passwords or bypass security protocols.

For higher-risk activities, like changing network settings or updating firmware, step-up authentication ensures stronger verification is required. Meanwhile, role-based access controls streamline permissions by aligning them with job functions, reducing the need for manual approvals and limiting over-privileged accounts [4].

Use Censinet RiskOps™ for Risk Management

Centralized management is essential for tying these human-centered controls into a cohesive defense strategy. Censinet RiskOps™ offers an integrated solution for tracking risks related to devices, patient data, and vendors. Its automated workflows and command center provide real-time visibility into risk status, ensuring all teams - clinicians, biomedical engineers, IT security, and risk managers - are aligned.

The platform simplifies risk assessments by summarizing vendor evidence, capturing product integration details, and generating concise risk reports based on collected data. Automated routing ensures critical findings are sent to the right stakeholders for timely review and action. This unified approach helps organizations effectively manage cybersecurity risks tied to human factors.

Monitor, Measure, and Continuously Improve Human Factor Assessments

To strengthen human-centered cybersecurity, the work doesn’t stop at implementing mitigations. Continuous monitoring and improvement are essential. Organizations need to track key metrics, analyze incidents, and refine controls to keep up with the ever-changing risks tied to human behavior.

Establish Key Human-Factor Metrics

Start by defining clear metrics that help you assess risks tied to human factors. These can be broken into two categories: leading indicators and lagging indicators.

Leading indicators help you catch issues early, before they escalate. Examples include:

- Security training pass rates.

- Time taken to complete critical security tasks (like multi-factor authentication or logins).

- Percentage of devices meeting secure baseline configurations (e.g., default passwords removed, least-privilege roles applied).

- Audit-verified compliance rates.

Lagging indicators, on the other hand, show where things have already gone wrong. These could include:

- Number of security violations by device type or care unit (e.g., unauthorized access attempts, policy overrides).

- Human-factor incidents per 1,000 device uses (like credential sharing or misconfigured settings).

- Reportable breaches where human error was a key cause.

To set useful benchmarks, gather 12–24 months of historical data segmented by device type, department, and user role. If historical data isn’t available - such as for new devices or programs - pilot tests over 3–6 months can help establish provisional baselines. Risk levels should guide your targets, with stricter standards for life-critical equipment compared to general-use devices [3][4].

Consider this: A study of U.S. healthcare data breaches revealed that incidents involving unintentional insiders exposed, on average, more than double the patient records compared to malicious external attacks. Phishing incidents, in particular, compromised more records per breach than any other category [3]. These findings highlight why behavioral metrics are just as important as technical ones.

By tracking these metrics, organizations can create a foundation for continuous improvement through systematic feedback.

Create Feedback Loops for Continuous Improvement

Every incident or near-miss involving human factors should be part of a structured feedback process [3]. For example, if a user bypasses a certificate warning or a technician connects a device to unauthorized Wi-Fi, conduct a root-cause analysis. Focus on areas like task design, interface cues, environmental pressures, and policies [2]. Use the findings to make improvements in three key areas:

- Device configuration and user interface (UI): Simplify login processes, make security warnings more actionable, and adjust session timeouts based on real-world workflows.

- Workflow and processes: Incorporate secure handoff steps into nursing protocols and standardize secure practices for mobile carts.

- Training and communication: Offer scenario-based refreshers using real incidents and embed just-in-time security tips directly into device interfaces.

Monitor whether these changes lead to improvements in key metrics over the following quarters. Document all updates in risk management files to meet regulatory requirements for postmarket surveillance [2][6][4].

"Not only did we get rid of spreadsheets, but we have that larger community [of hospitals] to partner and work with." - James Case, VP & CISO, Baptist Health [1]

Collaborative networks amplify these efforts by allowing organizations to share lessons and drive industry-wide progress.

Schedule Periodic Reviews and Use Platforms

Even after implementing controls, regular reviews are necessary to ensure human-factor risks remain managed as workflows evolve. Incorporate human-factor metric reviews into existing risk management meetings on a quarterly or semiannual basis [2][4]. Update baselines and targets annually or after major changes, documenting the reasoning in risk management plans [2][6][4].

Tools like Censinet RiskOps™ can help benchmark your cybersecurity and human-factor risk posture, making it easier to prioritize resources effectively.

"Benchmarking against industry standards helps us advocate for the right resources and ensures we are leading where it matters." - Brian Sterud, CIO, Faith Regional Health [1]

To make these efforts sustainable, integrate monitoring into existing workflows rather than creating separate processes. For instance:

- Add short human-factor fields to current incident reporting forms.

- Include targeted metrics in established quality dashboards.

- Incorporate cybersecurity scenarios into usability testing required for regulatory submissions.

This integrated approach keeps the process manageable, even in the fast-paced and demanding environment of healthcare. By embedding monitoring into daily operations, organizations can ensure continuous improvement without overwhelming their teams.

Conclusion

Integrating human factors into the cybersecurity of medical devices is critical for safeguarding patient safety and adhering to regulatory demands. Research highlights that accidental insider errors compromise patient records at more than twice the rate of malicious breaches[3]. This stark reality emphasizes one key point: technical defenses alone are insufficient if human behavior isn't addressed.

As discussed, every step in the process - from analyzing user interactions to tracking human-factor metrics - fortifies defenses against device vulnerabilities. Start by mapping workflows, identifying risky practices, and incorporating these insights into structured assessments like URRA and FMEA. Focus on secure-by-design solutions that align seamlessly with clinical workflows, ensuring that the secure option becomes the easiest choice. Test these controls through human factors evaluations, as now mandated by the FDA for cybersecurity-critical tasks[2]. Additionally, maintain an ongoing cycle of monitoring, measuring, and refining strategies based on real-world incidents and user feedback.

This comprehensive approach not only meets regulatory requirements but also strengthens operational reliability. It helps organizations comply with FDA cybersecurity guidance, ISO 14971 standards, and HIPAA security rules while minimizing risks like device failures, altered clinical settings, or breaches of patient data - all of which directly affect care quality.

Tools like Censinet RiskOps™ simplify this process by tracking risks across devices and workflows. Its collaborative features and benchmarking tools allow organizations to learn from industry trends, allocate resources wisely, and sustain improvements without overwhelming limited teams.

Ultimately, clinicians, engineers, and users are integral to creating a secure system shaped by thoughtful design, efficient workflows, and a strong security culture. When cybersecurity measures align naturally with how people work, patient safety improves - and that’s the ultimate goal. By embedding human factors into cybersecurity strategies, healthcare organizations ensure that their defenses evolve in step with clinical practices, keeping patients at the center of care.

FAQs

How does Human Factors Engineering enhance medical device cybersecurity?

Human Factors Engineering plays a critical role in strengthening the cybersecurity of medical devices by examining how people interact with these tools. It identifies risks stemming from human error and creates design solutions aimed at reducing those risks.

By prioritizing user-friendly designs and intuitive interfaces, this approach minimizes the chances of mistakes that could expose security gaps. The result is better protection for sensitive information and secure, reliable device performance in healthcare settings.

What are some common mistakes people make that can compromise the cybersecurity of medical devices?

Human mistakes often contribute to the cybersecurity risks surrounding medical devices. Here are some of the most frequent errors:

- Lack of proper training: Many users are not equipped to identify cybersecurity threats or manage devices securely.

- Improper device configuration: Errors in setup can create weak points that hackers can exploit.

- Skipping updates: Ignoring software updates or patches leaves devices open to vulnerabilities that have already been identified.

- Weak or poorly managed credentials: Using easy-to-guess passwords or mishandling login details can invite unauthorized access.

- Limited awareness of cybersecurity threats: Without understanding risks like phishing or malware, users may unknowingly expose devices to breaches.

To reduce these risks, organizations must prioritize comprehensive training, establish clear security protocols, and ensure systems are consistently updated. These steps can go a long way in safeguarding medical devices from potential threats.

How can organizations incorporate human factors into medical device cybersecurity?

To tackle human-related challenges in medical device cybersecurity, organizations must perform detailed risk assessments that consider how human behavior might influence security. This means pinpointing areas like possible user mistakes, internal threats, and shortcomings in training programs.

Investing in focused cybersecurity training is key. It raises awareness and empowers staff to make more secure choices. On top of that, using tools such as advanced risk management platforms allows organizations to keep an eye on vulnerabilities tied to human factors and address them promptly. This approach helps maintain a stronger, more proactive cybersecurity stance.