The Technology Stack of Tomorrow: Building AI-Ready Infrastructure

Post Summary

Across the U.S., healthcare organizations are racing to integrate AI into clinical and operational systems. But here’s the challenge: AI needs a strong tech foundation to succeed. Without the right infrastructure - secure data systems, scalable computing, and compliance frameworks - AI projects risk failure or harm.

Key points to know:

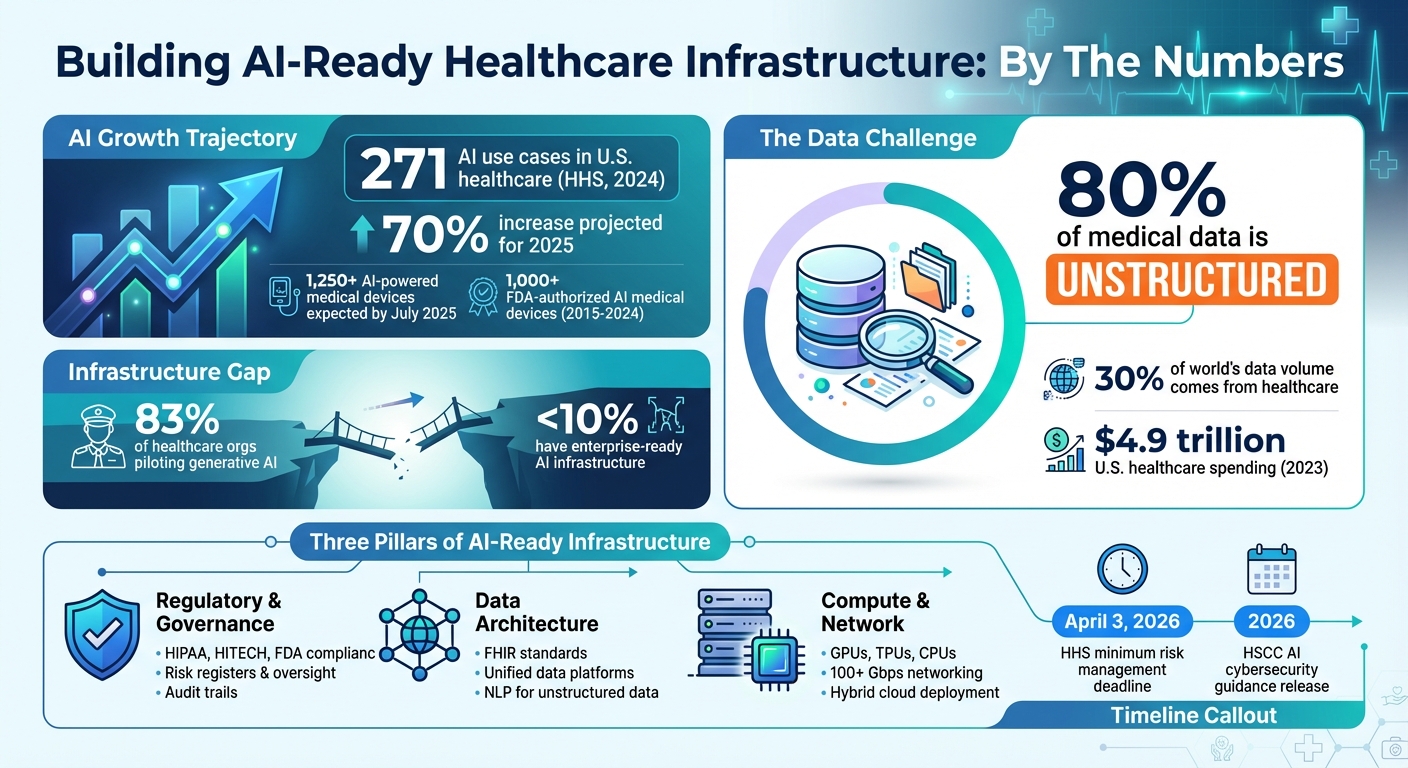

- AI Growth in Healthcare: The U.S. Department of Health and Human Services (HHS) reported 271 AI use cases in 2024, with a 70% increase projected for 2025.

- Infrastructure Challenges: Issues like poor data quality, unstructured formats, and inadequate security can derail AI systems.

- Core Requirements: AI-ready systems need regulated governance, standardized data (e.g., FHIR), scalable computing (e.g., GPUs, TPUs), and secure networks.

This guide dives into the essential components - data, compliance, and computing - plus strategies for building scalable, secure AI systems in healthcare.

AI-Ready Healthcare Infrastructure: Key Statistics and Requirements 2024-2026

Core Components of an AI-Ready Technology Stack

Building an AI-ready infrastructure for healthcare involves three key pillars: regulatory compliance and governance, a strong data architecture, and scalable compute and network resources. Each of these components must cater to the specific demands of the healthcare field, such as safeguarding patient privacy, ensuring safety, and supporting real-time clinical decisions. Together, these elements create a foundation for secure, interoperable, and scalable AI systems in healthcare.

Regulatory and Governance Requirements

In the U.S., regulations like HIPAA, HITECH, and FDA guidelines enforce strict standards for access controls, encryption, and audit trails. The FDA treats AI used for diagnosis, treatment, or disease prevention as a regulated entity, categorizing it as Software as a Medical Device (SaMD) or Software in a Medical Device (SiMD). By July 2025, more than 1,250 AI-powered medical devices are expected to be authorized in the U.S. [3].

To ensure compliance and accountability, organizations should implement AI governance practices such as maintaining risk registers, setting up oversight committees, and keeping detailed logs of model inputs, outputs, and decision-making pathways. These measures enable full auditability of AI systems throughout their lifecycle.

Data Architecture and Interoperability

AI systems thrive on high-quality, accessible data. A unified healthcare data platform is critical for consolidating information from various sources into a single framework that supports data lakes, cloud storage, and real-time streaming [8]. However, around 80% of medical data remains unstructured - like clinical notes and discharge summaries - which presents challenges for traditional systems [8].

Standards like FHIR act as a common language for consistent data interpretation. FHIR APIs enable seamless access to large datasets for training machine learning models while also supporting individual patient record retrieval and bulk data operations [5][6][7]. AI is playing a role in improving interoperability by automating data transformation and standardization. For instance, AI models can map fields across different formats and align clinical terms with standards like SNOMED CT, LOINC, and RxNorm [4][8]. Additionally, Natural Language Processing (NLP) models fine-tuned for clinical text can extract structured insights from unstructured notes, making critical data easily accessible for analysis [8]. This unified approach to data directly supports real-time clinical decision-making.

To maintain compliance, healthcare organizations should enforce strict access controls, encrypt data both at rest and in transit, and implement role-based data access. Robust data governance frameworks should also be established with vendors to ensure accountability. Every data transaction should leave a verifiable audit trail, recording who accessed the information, when, and for what purpose [7][8].

Compute, Storage, and Network Requirements

Once data and governance are in place, the next step is ensuring the right compute and networking resources are available. AI workloads demand specialized infrastructure designed for optimized compute, storage, networking, and orchestration [9]. While 83% of healthcare organizations are piloting generative AI, fewer than 10% have invested in infrastructure capable of supporting enterprise-wide AI deployment [10].

Compute resources should include GPUs for deep learning and transformer models, CPUs for orchestration and lighter tasks, and specialized accelerators like TPUs or Inferentia chips for niche applications [9]. Storage solutions must accommodate various needs, such as object storage for large datasets, high-performance NVMe SSDs or distributed file systems for active training, and caching layers to reduce latency [9].

Networking infrastructure is equally important. Distributed training requires low-latency technologies like InfiniBand or RDMA, while cloud deployments often rely on Ethernet speeds exceeding 100 Gbps [9]. For edge AI applications - such as real-time clinical decision-making at the point of care - local processing and intelligent caching are essential to handle intermittent connectivity and meet immediate data needs [9]. Orchestration tools like Kubernetes, Kubeflow, Ray, and Dask are invaluable for managing these dynamic workloads, offering automated scaling, fault tolerance, and resource optimization across distributed systems [9].

Finally, hybrid deployment models are crucial for balancing the scalability of cloud solutions with the control of on-premises systems. This flexibility allows organizations to train AI models close to where the data resides while deploying inference capabilities wherever clinical decisions are made - whether in a data center, the cloud, or at the edge.

Building Security and Risk Management into AI-Ready Infrastructure

Strengthening cybersecurity within AI infrastructure is a critical step in ensuring patient safety, safeguarding data privacy, and meeting regulatory requirements. Healthcare organizations face unique AI-related threats, including model poisoning, data corruption, adversarial attacks, and AI "hallucinations" that can jeopardize clinical decisions[11][13]. Cybercriminals are also leveraging AI to automate attacks, create deepfakes, and enhance social engineering tactics[14]. To counter these evolving risks, adopting a Zero Trust approach is essential to secure every layer of AI operations.

Zero Trust Security Model

The Zero Trust model operates on a simple principle: trust nothing, verify everything. As Palo Alto Networks puts it:

Zero Trust is a security principle based on the idea that no user or system should be inherently trusted. Every interaction must be verified - no exceptions.[12]

In AI systems, this philosophy translates to implementing robust identity and access controls at every phase, from data ingestion to model training and inference. Tools like role-based access control (RBAC), just-in-time (JIT) access, and microsegmentation ensure that every interaction is authenticated and authorized. This is especially important in multi-tenant environments or systems with exposed APIs, where risks like unauthorized access or data leaks are heightened[12]. Healthcare organizations should also enforce strict policies against sharing login credentials for systems containing electronic Protected Health Information (ePHI) and ensure devices automatically log off when unattended[2].

AI-Specific Cybersecurity Measures

Traditional cybersecurity measures fall short when it comes to protecting AI systems. Healthcare organizations need tailored incident response strategies to address threats like model poisoning and adversarial attacks that can degrade AI performance[11]. In November 2025, the Health Sector Coordinating Council (HSCC) previewed its 2026 AI cybersecurity guidance, which includes practical playbooks designed to handle these challenges. This guidance emphasizes "Secure by Design Medical" principles, which embed security into AI-enabled medical devices from the start, aligning with FDA recommendations and the NIST AI Risk Management Framework[11].

Key measures include continuous monitoring, secure model backups, and rapid containment protocols for breaches. Collaboration between cybersecurity and data science teams ensures that security measures protect AI performance while addressing emerging threats. Maintaining an up-to-date inventory of AI systems - along with a clear understanding of their functions, data dependencies, and security implications - helps organizations classify AI tools and align oversight efforts with system risk levels[11].

In December 2025, the U.S. Department of Health and Human Services (HHS) released its AI strategy, requiring each division to identify "high-impact" AI systems - those that affect health outcomes, rights, or sensitive data - and implement minimum risk management practices by April 3, 2026. These practices include bias mitigation, outcome monitoring, security, and human oversight. The strategy also introduced an AI Governance Board to oversee AI activities and ensure compliance with cybersecurity standards aligned with NIST guidelines[1].

Third-Party and Supply Chain Risk Management

Securing AI systems requires more than just internal safeguards; managing vendor risks is equally critical. Many healthcare AI systems depend on third-party vendors, creating vulnerabilities within the supply chain. The HSCC's 2026 guidance addresses these risks through a workstream focused on Third-Party AI Risk and Supply Chain Transparency. This initiative aims to improve visibility into vendor tools, establish governance policies, and standardize processes for procurement, vendor evaluation, and lifecycle management[11].

Business Associate Agreements (BAAs) remain a cornerstone for vendors handling PHI, outlining permitted uses, safeguards, breach-notification responsibilities, and subcontractor requirements[15]. Meanwhile, newer regulations like the U.S. HTI-1 rule and the EU AI Act introduce AI-specific obligations, including algorithm transparency, risk management, logging, and human oversight[15].

Platforms like Censinet RiskOps™ streamline vendor risk management by automating questionnaires, consolidating evidence, tracking integration details, and identifying fourth-party exposures. Censinet AI™ accelerates risk assessments, enabling healthcare organizations to manage risks more efficiently while maintaining human oversight through configurable rules and review processes. By centralizing AI policies and risks, the platform ensures key findings are routed to appropriate stakeholders for continuous monitoring and action.

Operationalizing AI: MLOps and Analytics Platforms

Putting AI to work in healthcare isn't just about deploying models - it’s about creating workflows that ensure these models deliver consistent, reliable results. Without a robust operational framework, AI systems can fall short or even pose risks in clinical settings. By building on a secure and scalable foundation, MLOps and integrated analytics can help turn AI's potential into meaningful clinical outcomes.

MLOps for Healthcare

MLOps (Machine Learning Operations) is the engine that drives AI deployment, focusing on an ongoing cycle of design, evaluation, scaling, and monitoring[17]. This approach keeps AI models accurate, secure, and compliant throughout their lifecycle.

The quality of the data powering these models is key. High-quality, diverse electronic medical record (EMR) data is critical for training, testing, and validating AI systems[16][18][19]. Poor data quality or biased datasets can lead to unreliable models and risky clinical decisions.

Organizations must integrate IT teams, data scientists, leadership, and ethical oversight into their AI operations. This collaboration ensures models remain secure, compliant, and aligned with regulatory and ethical standards[23]. Strong IT governance practices are essential to safeguarding patient privacy, maintaining data security, and upholding ethical AI use throughout the entire model lifecycle.

Analytics and Decision Support Integration

While MLOps ensures AI systems work as intended, analytics platforms bring these insights to clinicians, making them actionable. To be effective, AI analytics tools must integrate seamlessly with electronic health record (EHR) workflows, delivering insights that are both timely and easy to act on. These platforms can help streamline administrative tasks, ease the workload on healthcare professionals, and optimize resource use[21][22]. However, incorporating AI often requires rethinking workflows to ensure the insights fit naturally into clinical routines[22].

For successful integration, healthcare organizations need specialized infrastructure, efficient data movement, and ongoing monitoring[20]. AI tools should provide insights at just the right moment, avoiding unnecessary alerts that could overwhelm clinicians. Equally important is transparency and explainability - clinicians must understand how AI systems generate their recommendations. Without this understanding, even the most accurate tools may go unused.

To fully operationalize AI, healthcare organizations must address gaps in hardware, software, and connectivity. Implementing phased upgrades can pave the way for seamless integration and scalability[24]. This infrastructure must support real-time decision-making while adhering to the strict security and compliance standards required in healthcare.

sbb-itb-535baee

Implementation Roadmap for AI-Ready Infrastructure

Creating an AI-ready infrastructure in healthcare takes thoughtful planning, gradual implementation, and ongoing adjustments to avoid disruptions in patient care. A well-structured roadmap ensures progress is both ambitious and practical, building on each step to support seamless transitions.

Assessing Current Infrastructure and Identifying Gaps

Start by taking stock of your current AI capabilities. This includes evaluating diagnostic tools, medical devices, clinical software, data centers, cloud services, and network systems [26]. Identify where AI is already making a difference - whether it’s speeding up workflows, improving accuracy, or enhancing overall performance [26].

After mapping out your existing setup, conduct a detailed needs assessment. Define the specific problem AI is meant to solve, understand its root causes, and identify who will benefit from the solution [25]. A strong, evidence-based case for AI adoption is crucial. It should not only highlight the need but also explain how targeted AI solutions will address those challenges. This groundwork sets the stage for a phased and strategic modernization plan.

Phased Modernization and Technology Adoption

Take a step-by-step approach to modernization. Start by focusing on internal operations - centralizing data, streamlining workflows, and strengthening cybersecurity - before rolling out AI solutions in patient-facing areas [27][28].

Equally critical is preparing your workforce. Invest in training and change management programs that help staff adapt to working alongside AI tools. When your infrastructure is updated and your team is ready, the next step is maintaining momentum through consistent oversight and adjustments.

Continuous Improvement and Risk Management

AI infrastructure isn’t a one-and-done effort. It requires ongoing monitoring and updates to stay aligned with technological advancements [1][10]. Regular reviews, clear performance metrics, and a commitment to continuous learning ensure your AI strategy evolves effectively in response to new challenges.

To keep pace with shifting regulations and emerging cybersecurity threats, proactive risk management is essential. Encourage ongoing education through AI-focused forums, webinars, and cross-team collaborations. Tools like Censinet RiskOps™ can provide centralized oversight, offering real-time visibility into AI-related risks, policies, and tasks. This ensures that the right teams address emerging issues promptly.

Conclusion

Creating an AI-ready healthcare infrastructure goes beyond simply adopting new technologies - it requires a strong, adaptable foundation capable of keeping pace with the industry's rapid changes. Recent statistics from the Department of Health and Human Services (HHS) highlight the pressing need for such resilient systems [1][2].

The move toward a modular and interconnected AI architecture - grounded in interoperability, governance, and security - is no longer just a forward-thinking upgrade; it’s a critical step forward [29]. With healthcare contributing to approximately 30% of the world's data volume, the systems managing this information must be equipped to handle the load effectively [30].

As outlined in our phased modernization strategy, the key to success lies in shifting from quick fixes to long-term, strategic solutions. This involves establishing solid data governance frameworks from the start, aligning cybersecurity measures with NIST standards, and ensuring systems allow for real-time data access across the organization [1][29]. Consider this: between 2015 and 2024, the FDA has authorized over 1,000 AI-powered medical devices, a clear indicator of the growing integration of AI in healthcare - and the undeniable need for infrastructure that can support these advancements securely [29].

Looking ahead, it’s essential to keep patient safety, data privacy, and care quality at the forefront. Organizations that thrive view infrastructure transformation as an ongoing process. By following a phased approach - optimizing current systems, advancing through platform integration, and adopting advanced technologies - you position yourself to adapt as AI capabilities continue to evolve [31]. With U.S. healthcare spending reaching $4.9 trillion in 2023, the urgency to modernize is clear [29].

An AI-ready infrastructure should not only enable innovation but also uphold patient safety, data privacy, and care quality as core principles. By balancing these priorities with scalability and performance, you create a technology foundation that supports today’s AI needs while paving the way for future advancements.

FAQs

What are the key components for building an AI-ready infrastructure in healthcare?

Creating an infrastructure that's ready for AI in healthcare involves a few key building blocks. First, data liquidity and interoperability are essential. These ensure that data can flow smoothly between systems, enabling better collaboration and more accurate insights. Of course, this all depends on having high-quality, well-managed data as the backbone for reliable AI outcomes.

Next, scalable computing and storage solutions are a must. Advanced AI applications come with heavy demands, so having the right systems in place to handle those workloads is non-negotiable. On top of that, an AI-savvy workforce is crucial. Without a team that understands how to work with AI tools, even the best technology will fall short. Finally, disciplined procurement processes help ensure that the tools and technologies chosen align with both technical needs and regulatory requirements.

When these pieces come together, they create a secure, efficient infrastructure that’s equipped to meet the unique demands of healthcare and support the future of AI-driven innovation.

How does the Zero Trust model improve AI security in healthcare systems?

The Zero Trust model enhances AI security in healthcare by focusing on three key principles: continuous identity verification, strict access controls, and constant monitoring of devices and data. This means no user or system is ever automatically trusted - every interaction is verified. The result? A significant reduction in risks like unauthorized access, data breaches, and cyberattacks.

For healthcare organizations, adopting Zero Trust principles offers major benefits. It provides stronger protection for sensitive patient information, supports compliance with regulatory requirements, and creates a safer environment for incorporating advanced AI technologies into their systems.

Why is interoperability essential for AI in healthcare?

Interoperability plays a crucial role in the use of AI within healthcare, as it enables different systems to exchange and understand data effortlessly. This seamless data sharing ensures that AI models have access to accurate, comprehensive, and contextually relevant information - essential for making well-informed decisions and delivering better outcomes for patients.

When systems communicate effectively, it enhances coordination, minimizes errors, and boosts the overall efficiency of healthcare processes. Interoperability also safeguards patient safety while paving the way for advancements in AI-powered healthcare solutions.

Related Blog Posts

- Cross-Jurisdictional AI Governance: Creating Unified Approaches in a Fragmented Regulatory Landscape

- Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk

- Process Revolution: Redesigning Workflows for the AI Era

- Future-Ready Organizations: Aligning People, Process, and AI Technology