Top AI Tools for HIPAA-Compliant Data De-Identification

Post Summary

Healthcare organizations need to protect patient privacy while using data for research and AI development. HIPAA-compliant de-identification ensures patient information is anonymized, enabling data use without breaching privacy laws. This process removes identifiable details like names, dates, and geographic data to prevent re-identification risks. AI tools are essential for handling large datasets efficiently, offering faster and more accurate results than manual methods.

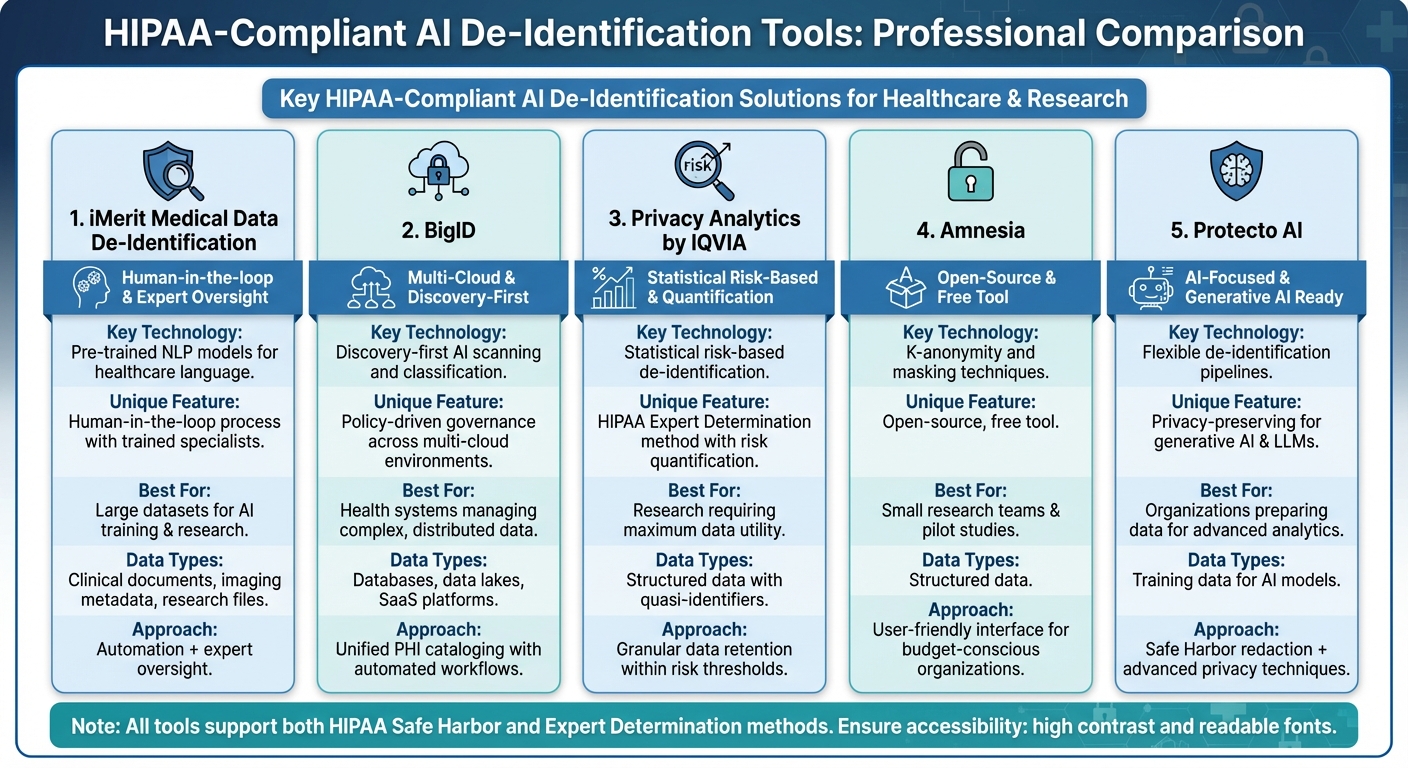

Here are five AI tools that help healthcare organizations meet HIPAA standards for data de-identification:

- iMerit: Combines AI with human oversight to remove PHI from clinical documents and imaging metadata.

- BigID: Automatically identifies and catalogs PHI across various environments for de-identification workflows.

- Privacy Analytics by IQVIA: Uses statistical methods to minimize re-identification risk while retaining data utility.

- Amnesia: Open-source tool for anonymizing structured data using techniques like k-anonymity.

- Protecto AI: Focuses on privacy-preserving techniques for preparing data for AI and analytics.

Each tool supports HIPAA’s Safe Harbor and Expert Determination methods, ensuring compliance while maintaining data usability. These platforms also integrate with broader risk management strategies, ensuring secure and consistent handling of protected health information.

Requirements for HIPAA-Compliant AI Tools

Choosing the right AI de-identification tool goes beyond flashy marketing claims - it requires verifying that the platform meets strict technical, security, and governance standards. Healthcare organizations need tools that can consistently handle protected health information (PHI) across various data types while staying compliant with federal regulations.

Technical Features for PHI Detection and Anonymization

To meet HIPAA standards, an AI tool must reliably detect and transform all 18 types of data identifiers required by HIPAA. Missing even one identifier can make a dataset non-compliant.

The most effective tools combine rule-based methods, like regular expressions, with advanced NLP models that understand the context in clinical narratives, including abbreviations and unique phrasing. These tools must work seamlessly across diverse data formats, such as structured tables from electronic health records, free-text clinical notes, PDFs, DICOM images, and FHIR resources. This ensures no gaps where PHI could slip through. Additionally, the platform should support both Safe Harbor workflows (removing all 18 identifiers) and Expert Determination processes (using statistical analysis to confirm minimal re-identification risk), with configurable options tailored to different scenarios.

An array of transformation methods - masking, redaction, pseudonymization, generalization, and tokenization - should be available. For research purposes, consistent pseudonyms or tokens allow patient data to be tracked over time without compromising privacy. For broader data sharing, techniques like k-anonymity ensure individual records blend into larger groups. Published validation results showing over 99% detection accuracy on extensive datasets are a must-have for any tool under consideration.

Security and Governance Standards

Technical strength alone isn’t enough; robust security measures are equally critical. HIPAA’s Security Rule requires encryption both in transit (via protocols like TLS 1.2 or higher) and at rest (using methods such as AES-256) for any system handling PHI. Features like role-based access control, single sign-on (SSO), and multi-factor authentication (MFA) are essential to prevent unauthorized access. Segregating duties further enhances security.

Audit logging is another key requirement. Logs should document every access, configuration change, data export, and model execution involving PHI. These logs must include details like user identity, timestamps, datasets, and actions taken, and they must be tamper-proof and retained according to organizational policies. Vendors handling PHI must also sign a Business Associate Agreement (BAA), which outlines data usage limits, breach notification timelines, subcontractor responsibilities, and security obligations. Vendors unwilling to sign a BAA or unclear about their PHI practices pose serious compliance risks.

Integration with Healthcare Risk Management

Even with strong technical and security measures, de-identification tools must fit into a broader risk management strategy. These tools don’t operate in isolation - they are part of a larger ecosystem that includes clinical systems, analytics platforms, and third-party services. Organizations should integrate these tools into their enterprise risk management programs, using documented risk assessments, threat models, and data-flow diagrams. Specialized platforms like Censinet RiskOps™ can streamline this process by offering tools for third-party and enterprise risk assessments, cybersecurity benchmarking, and collaborative issue resolution, making it easier to manage AI de-identification workflows.

Ongoing validation is crucial to ensure effectiveness over time. Organizations should regularly sample and review de-identified data to check for missed PHI (false negatives) and unnecessary over-redaction (false positives) that could impact data usability. Metrics like PHI detection rates, manual correction frequency, and the time taken to fix model issues should be monitored. Additionally, change-management protocols should govern updates to models and rules, requiring thorough testing with validation datasets and formal approval from privacy or security committees before deployment. This continuous oversight ensures that AI de-identification tools stay effective as data patterns shift and regulations evolve.

Top AI Tools for HIPAA-Compliant Data De-Identification

Comparison of 5 HIPAA-Compliant AI De-Identification Tools for Healthcare

With the increasing need for secure handling of healthcare data, several platforms now combine AI-powered PHI detection with essential security and governance features. These tools cater to different needs, from large-scale data discovery to advanced statistical modeling for research. Choosing the right platform depends on your organization's data types, volumes, and compliance goals.

Below are some standout tools that integrate HIPAA-compliant safeguards with advanced de-identification capabilities.

iMerit Medical Data De-Identification

iMerit leverages pre-trained NLP models tailored for healthcare-specific language to detect and remove PHI from clinical documents, imaging metadata, and research files. The platform identifies all HIPAA-defined identifiers while incorporating a human-in-the-loop process for high-stakes use cases. Here, trained specialists review and refine the AI's output, ensuring no PHI slips through before the data is cleared for downstream use.

This blend of automation and expert oversight is especially valuable for organizations preparing large datasets for AI training or research, where both speed and precision are critical. iMerit also supports automated workflows, enabling seamless processing of clinical notes or studies without exposing sensitive information. This dual approach of automation and human validation aligns with the strict governance standards required for compliance.

BigID

BigID employs a discovery-first strategy, using AI to scan and classify PHI across databases, data lakes, SaaS platforms, and other environments. It automatically identifies sensitive information like Social Security numbers, medical record numbers, and free-text PHI, creating a unified catalog for de-identification workflows.

With its policy-driven governance, BigID ensures that rules - such as preventing PHI from leaving a virtual private cloud without de-identification - are consistently enforced. Teams can define workflows to mask, tokenize, or redact sensitive data before it reaches analytics platforms. For health systems managing multi-cloud environments, BigID offers the clarity to locate PHI, assess its protection status, and maintain compliance. Its data cataloging capabilities also provide a foundation for more advanced risk-based de-identification methods.

Privacy Analytics by IQVIA

Privacy Analytics specializes in statistical, risk-based de-identification, following HIPAA's Expert Determination method. Instead of simply removing standard fields, it quantifies and controls the likelihood of re-identification. The platform evaluates quasi-identifiers - like age, geography, and diagnosis codes - and applies techniques such as generalization, suppression, and perturbation to meet a specific risk threshold.

This approach allows for more granular data retention compared to Safe Harbor methods. For instance, it can preserve 5-year age ranges or 3-digit ZIP prefixes when the risk remains within acceptable limits. By maintaining greater data utility, Privacy Analytics supports research and AI model development while ensuring compliance. The platform also generates detailed documentation of its risk assessments, making it easier for healthcare organizations to share data with partners while staying defensible under HIPAA.

Amnesia

Developed by the University of Athens, Amnesia is an open-source tool designed for anonymizing structured data. It uses methods like masking, pseudonymization, and k-anonymity to protect patient privacy. With k-anonymity, for example, records are grouped so that any combination of quasi-identifiers appears in at least k records, reducing the risk of singling out individuals.

Amnesia is particularly suited for smaller research teams or practices. It allows users to export datasets, apply anonymization techniques, and share them securely with collaborators. Its free, user-friendly interface makes it an excellent choice for pilot studies, IRB-approved research, or organizations with tight budgets.

Protecto AI

Protecto AI offers flexible de-identification pipelines, supporting Safe Harbor-style redaction and advanced privacy-preserving techniques like tokenization, masking, and noise injection. The platform integrates seamlessly with data repositories, ensuring PHI is de-identified before analysts access it.

Protecto AI is part of a newer wave of tools designed for "privacy for AI", focusing on securing training data for generative AI and large language models. By masking PHI before it leaves an organization's perimeter, Protecto helps healthcare organizations maintain compliance while preparing data for advanced analytics and AI applications.

sbb-itb-535baee

Governance and Continuous Oversight for AI De-Identification

AI de-identification demands ongoing monitoring, as shifting risks and changes in data usage can jeopardize the protection of Protected Health Information (PHI). Under HIPAA's Expert Determination pathway, risk assessments must be contextual and periodically updated to account for evolving data-sharing practices and external datasets. AI models, in particular, can experience "drift" as they are retrained or as input data changes over time, potentially impacting the accuracy of PHI detection or leading to unnecessary redactions. These challenges highlight the need for robust and continuous oversight.

Continuous Validation of De-Identification Models

To maintain effectiveness, de-identification models require regular validation. This typically involves benchmark testing to measure PHI detection performance - evaluating precision, recall, and F1 scores - using labeled test datasets that encompass U.S. clinical text, imaging metadata, and structured Electronic Health Record (EHR) fields. Organizations should also perform scenario-based testing to address high-risk edge cases, such as rare identifiers, small population data, and longitudinal linkages that could increase re-identification risks under HIPAA's Expert Determination standard. Some systems even incorporate adversarial testing to challenge the models’ ability to mitigate re-identification threats.

Large healthcare organizations often align their validation schedules with their risk tolerance. This might include quarterly or semiannual formal validations, as well as checks following any significant model or rule changes. These validations are documented for compliance audits. Additionally, organizations must track performance metrics and risk factors, including:

- Audit logs detailing who accessed de-identification tools and what datasets were processed.

- Incident records to document any PHI "leakage" events.

- Utility metrics to ensure that de-identification measures don’t unintentionally degrade data quality.

By combining this evidence with Business Associate Agreements (BAAs) and clear policies, organizations can demonstrate compliance with HIPAA's Safe Harbor or Expert Determination standards.

The Role of Risk Management Platforms

Risk management platforms, such as Censinet RiskOps™, play a key role in supporting AI de-identification governance. These platforms centralize risk management for healthcare organizations and provide standardized assessments to evaluate AI vendors. They examine vendors' HIPAA compliance, de-identification methods, validation practices, BAAs, and incident response protocols.

Censinet RiskOps™ tracks risks tied to PHI handling, data residency, access controls, and AI model performance across clinical applications, medical devices, and supply chain systems. Organizational policies - such as requirements for Expert Determination, encryption standards, and audit logging - are embedded into the platform’s workflows. This ensures that new AI de-identification tools cannot be deployed unless they meet the organization’s predefined controls. The platform also schedules regular reassessments, monitors remediation efforts, and alerts stakeholders to changes like new sub-processors, major model updates, or regulatory shifts.

Integration with Broader Cybersecurity Strategies

From a cybersecurity perspective, AI de-identification should integrate seamlessly into secure data pipelines. This includes using network segmentation, strict access controls, and comprehensive encryption measures. Security operations centers should actively monitor access to de-identification systems and datasets, looking for unusual activity that might signal attempts to re-identify individuals.

De-identification practices should also align with zero-trust architectures, ensuring that even de-identified datasets require authentication, authorization, and continuous monitoring. This approach acknowledges that de-identified data can still carry risks in certain contexts.

Incident response plans must explicitly address potential PHI exposure due to de-identification failures. These plans should include:

- Detection protocols to identify datasets that may still contain PHI.

- Triage and containment measures, such as revoking access to compromised datasets.

- Forensic analysis to determine whether the dataset meets HIPAA’s de-identification standards.

- Notification workflows for privacy officers and regulators, supported by detailed audit logs.

- Corrective actions, including retraining models, updating rules, enhancing validation processes, and reassessing vendor compliance.

Conclusion

AI-driven de-identification plays a crucial role for U.S. healthcare organizations aiming to tap into the potential of patient data while staying HIPAA-compliant. By automating the identification and removal of PHI in both structured EHR fields and unstructured clinical notes, these tools offer unmatched scalability, consistency, and speed compared to manual processes. As data volumes grow and use cases expand - from research to AI model training and population health analytics - this capability becomes increasingly important.[1][4]

The tools highlighted in this review - iMerit, BigID, Privacy Analytics, Amnesia, and Protecto AI - support both Safe Harbor and Expert Determination methods, ensure data remains useful, and integrate smoothly with healthcare IT systems. However, technology alone isn’t enough. Industry experts emphasize the importance of robust risk management frameworks, like Censinet RiskOps™, to help organizations maximize efficiency. Incorporating these tools into existing frameworks ensures that PHI handling, vendor oversight, audit logging, and ongoing validation work together to minimize re-identification risks over time.

Looking ahead, the future of PHI de-identification will depend on continuous advancements. Reliable de-identification is key to unlocking the potential of PHI for generative AI, data-sharing, and value-based care.[2][3] Organizations that treat de-identification as part of a broader risk management strategy - incorporating regular model validation, adversarial testing, and integration with specialized platforms - will be better equipped to drive innovation, enhance patient outcomes, and maintain public trust while adhering to HIPAA’s stringent requirements.

FAQs

What should healthcare organizations consider when choosing an AI tool for de-identifying patient data?

When choosing an AI tool to de-identify patient data, healthcare organizations should focus on solutions tailored specifically for the healthcare sector. It's crucial to ensure the tool complies with HIPAA and other relevant regulations, integrates smoothly with current workflows, and effectively reduces risks tied to protected health information (PHI). Also, prioritize tools that have been tested and proven through real-world applications and industry case studies. This helps confirm their reliability and effectiveness in delivering consistent results.

How do AI tools help meet HIPAA's Safe Harbor and Expert Determination standards for de-identifying data?

AI tools make it easier to meet HIPAA's Safe Harbor and Expert Determination standards by automating the often complicated process of de-identifying data. For Safe Harbor compliance, these tools efficiently locate and remove the 18 specific identifiers necessary to protect patient privacy.

When it comes to Expert Determination, AI tools support professionals by analyzing datasets, evaluating the risks of re-identification, and offering insights to ensure those risks stay minimal. By combining automation with advanced analytics, these tools simplify the process and help organizations better protect patient information.

What steps are needed to ensure AI de-identification tools stay effective over time?

To maintain the effectiveness of AI de-identification tools, it's crucial to conduct regular risk assessments and update algorithms to adapt to evolving data patterns and regulatory changes. Consistent testing and validation ensure that patient information remains de-identified while still being functional for its intended purposes.

Adding real-time monitoring and feedback systems can also play a key role. These tools can swiftly detect and address any potential risks of re-identification. By taking these proactive steps, healthcare organizations can stay compliant with HIPAA regulations while safeguarding sensitive patient data.