The New Risk Frontier: Navigating AI Uncertainty in an Automated World

Post Summary

Prompt injection attacks that expose PHI or manipulate clinical AI outputs during real‑time care.

Employees use unapproved generative tools that bypass security controls and violate HIPAA requirements.

Corrupted or biased data creates flawed models that produce unsafe or discriminatory clinical recommendations.

Attackers use AI to automate reconnaissance, craft targeted phishing, and exploit vulnerabilities more quickly.

Over 80% of stolen PHI comes from vendor breaches, and AI vendors often lack healthcare‑specific compliance expertise.

Autonomous models conceal bias, produce opaque decisions, and create compliance liabilities unless clinicians stay involved.

AI is reshaping healthcare, but it comes with risks that demand attention now. From diagnostic tools to medical devices, AI systems are influencing critical decisions - and mistakes can have severe consequences. Cyberattacks, biased algorithms, and compliance challenges are just a few of the threats healthcare organizations face today.

Key takeaways:

AI in healthcare holds great promise, but without proper safeguards, it can compromise patient safety and trust. This article outlines practical strategies to identify, evaluate, and mitigate AI risks effectively.

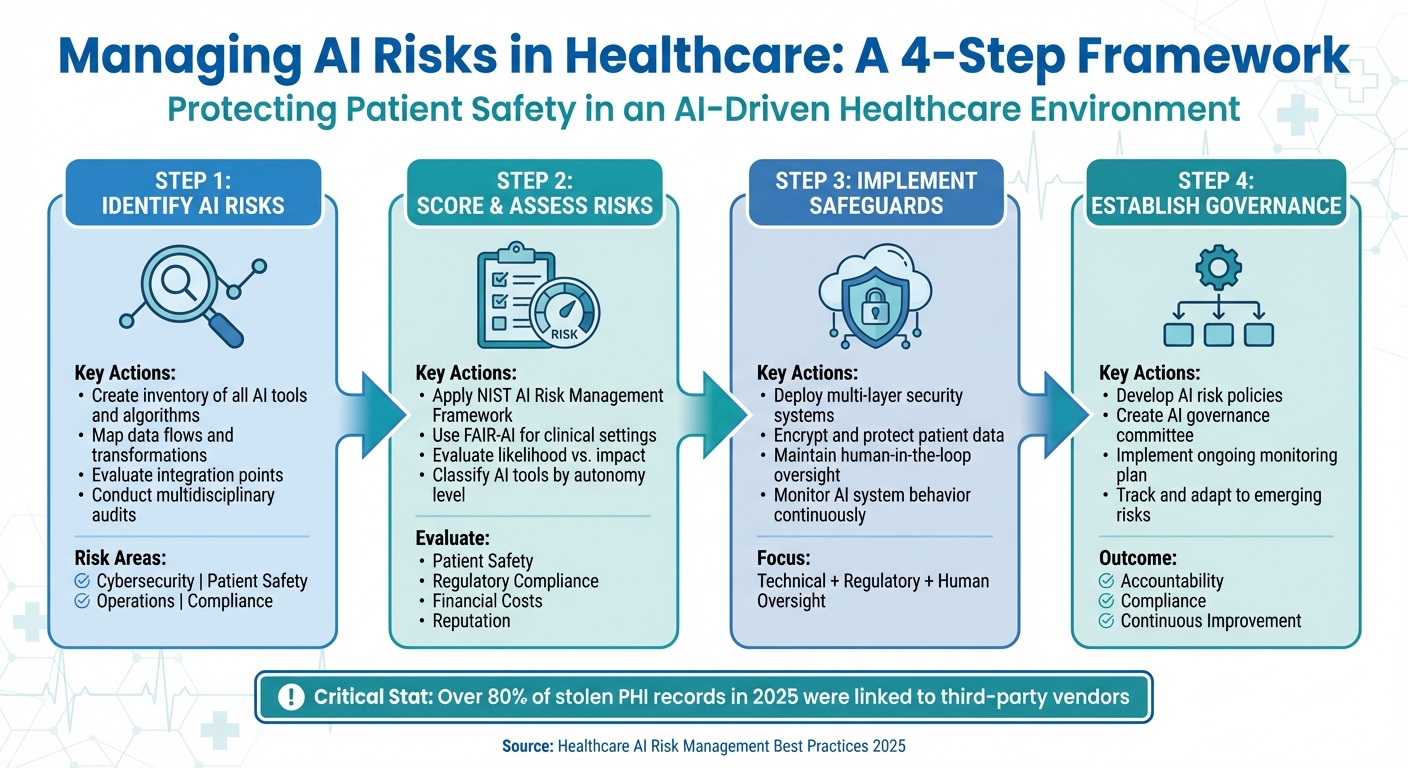

4-Step Framework for Managing AI Risks in Healthcare Organizations

Understanding AI Risks in Healthcare Systems

As AI continues to reshape healthcare, organizations are grappling with four major risk areas: cybersecurity breaches, patient safety issues, operational disruptions, and regulatory challenges. Patient safety is at stake when AI systems misdiagnose conditions, suggest inappropriate treatments, or fail during critical moments. Operational hiccups can arise from breakdowns in AI-driven processes like scheduling, supply chain management, or billing. On the regulatory front, evolving standards around AI transparency, data privacy, and accountability pose significant hurdles - especially with the HHS AI Strategy, introduced on December 4, 2025, which emphasizes governance and risk management to build public trust [8][9].

Types of AI Risks

These risks are often interconnected. For example, a cybersecurity breach targeting an AI diagnostic tool could corrupt data and endanger patient care simultaneously. Similarly, failures in AI-powered systems, such as medication dispensing, not only threaten patient safety but also trigger compliance issues. In 2025, the top concerns for healthcare systems include managing generative AI, fortifying cybersecurity, and adhering to federal regulations [10]. Below, we explore where these vulnerabilities surface within healthcare.

Where AI Vulnerabilities Exist

AI's integration into healthcare creates a broad "attack surface" across several critical areas:

Third-party risks amplify these challenges. Alarmingly, over 80% of stolen protected health information (PHI) records in 2025 were linked to third-party vendors, business associates, and external providers [5].

Current AI Threats for 2025–2026

The threat landscape in healthcare is evolving rapidly. Cybercriminals are now leveraging AI to enhance their attack strategies, making it harder for organizations to detect and respond effectively. At the same time, regulatory scrutiny is ramping up, pushing healthcare providers to navigate the dual challenge of defending against AI-driven threats while managing the risks posed by their own AI systems.

How to Identify and Score AI Risks

Effectively identifying and scoring AI risks is a cornerstone of robust AI governance, particularly in healthcare. To manage these risks, healthcare organizations must systematically assess and quantify them. This process starts with understanding the full scope of your AI ecosystem - cataloging every tool, algorithm, and data flow to gain a clear view of potential vulnerabilities.

Steps to Identify AI Risks

Start by creating a detailed inventory of all AI tools and algorithms used within your organization [14]. This registry should encompass everything from diagnostic systems to billing algorithms, ensuring each tool’s decisions can be traced and explained. Mapping the data flow is equally important - track the origins, transformations, and uses of data to uncover potential biases and ensure transparency for audits and regulatory checks [14].

Next, evaluate all points where AI integrates into your operations, such as radiology systems or patient engagement platforms, to uncover weak spots [12]. Use vendor-provided data to identify biases and limitations, but don’t stop there - validate this information with your own data and maintain post-deployment monitoring [13]. Multidisciplinary audits are also key. Bring together clinical, technical, legal, and compliance experts to review how AI is being used, its impact, and any vulnerabilities [11][14].

"The healthcare organizations that avoid the big headlines aren't lucky – they're intentional. They've made AI governance part of their everyday risk and compliance program"

.

Once risks are identified, structured frameworks are essential for assessing their severity.

Frameworks for Scoring AI Risks

After identifying risks, established frameworks can help you evaluate their severity. The NIST AI Risk Management Framework (AI RMF) offers a voluntary, consensus-driven method for managing AI risks, addressing areas like fairness, bias, and security [15][16]. It includes tools such as a Playbook and a Generative AI Profile, which focus specifically on the challenges posed by generative AI systems. For healthcare-specific needs, the Framework for the Appropriate Implementation and Review of AI (FAIR-AI) provides a tailored, two-step process designed for clinical settings. These frameworks assess risks by weighing their likelihood against their impact, recognizing that AI requires ongoing oversight due to its evolving nature [1].

Risk Factors to Evaluate

When scoring AI risks, focus on four key dimensions:

Also, examine system-level vulnerabilities, such as outdated software or poor interoperability, which could jeopardize patient safety on a larger scale [1]. Classify AI tools based on their level of autonomy to match oversight requirements with the associated risks [2]. Use incident reporting systems to log algorithm malfunctions, unexpected outcomes, or patient complaints - these reports can reveal patterns or systemic flaws that need addressing [12][13]. By evaluating these factors, healthcare organizations can develop stronger strategies to mitigate risks and enhance governance.

How to Reduce AI Risks in Healthcare

Once AI risks are assessed, the next step is to act. Reducing these risks in healthcare demands a well-rounded strategy that combines technical safeguards, adherence to regulations, and thoughtful human oversight. This approach ensures patient safety and reinforces trust in AI-driven systems by addressing vulnerabilities across technical, regulatory, and operational dimensions.

Multi-Layer Security for AI Systems

Securing AI systems in healthcare means employing multiple layers of protection and staying up-to-date with cutting-edge security protocols. For instance, the Health Sector Coordinating Council (HSCC) is preparing guidance for 2026 that prioritizes secure-by-design principles for medical devices and emphasizes stronger third-party risk management practices [2]. Blockchain technology is another promising tool, offering decentralized, transparent, and immutable data security solutions [1].

Protecting Patient Data and Meeting Compliance Requirements

AI systems in healthcare handle highly sensitive patient information, making robust data protection essential. Techniques like data minimization help limit exposure during breaches and simplify compliance with regulations like HIPAA. Additional safeguards, such as strong encryption, strict access controls, and regular data audits, are critical to securing patient information and ensuring compliance.

However, AI systems also bring unique challenges like algorithmic opacity and new vulnerabilities that could compromise data privacy and informed consent [1]. Beyond technical defenses, human oversight plays a key role in ensuring clinical decisions remain sound and ethical.

Maintaining Human Oversight in AI Processes

Human involvement is indispensable to prevent over-reliance on AI and to uphold patient safety, especially in clinical settings [17][18][19][20]. AI should assist - not replace - clinical judgment. Systems designed with a "human-in-the-loop" approach allow experts to review AI-generated recommendations before they influence critical decisions, such as diagnoses or treatment plans.

When clinicians understand how AI systems arrive at their conclusions, they’re better equipped to identify errors and apply their expertise effectively [6][18][20]. Regular monitoring and auditing of AI behavior are equally important. These practices help detect any biases, anomalies, or performance issues early, ensuring AI systems continue to deliver high-quality care. By maintaining this level of oversight, healthcare providers can sustain patient trust while effectively managing risks.

sbb-itb-535baee

Setting Up AI Governance in Healthcare

To minimize risks associated with AI in healthcare, organizations need a structured governance framework. This framework guides the use, monitoring, and control of AI systems, ensuring they are deployed responsibly and ethically. By addressing risks like bias, privacy issues, and safety concerns, such a framework supports ethical operations throughout the AI lifecycle - from design and procurement to deployment, ongoing use, and eventual decommissioning [21][22]. While technical assessments highlight potential risks, governance structures ensure these systems operate in compliance with ethical and regulatory standards. This foundation is essential for creating detailed policies and maintaining structured oversight.

Developing AI Risk Policies

The first step is to draft clear policies that outline acceptable AI practices, data handling guidelines, and the organization's risk tolerance. These policies should be tailored to the healthcare environment, focusing on how AI interacts with patient data, clinical workflows, and regulatory mandates [21][23]. They should define who has approval authority, establish security protocols, and lay out data protection measures. Assigning an executive sponsor to oversee AI governance can help align these efforts with the broader business strategy, ensuring resources are allocated effectively based on the balance of risks and benefits [23].

Creating AI Governance Committees

Forming a dedicated AI governance committee is crucial for maintaining oversight and ensuring responsible AI use. For instance, in 2025, a major university-affiliated hospital system in the U.S. created an AI governance committee after identifying organizational gaps through stakeholder interviews and co-design workshops. This committee, a subcommittee of the Digital Health Committee, brought together senior leaders with expertise in strategy, operations, clinical care, and informatics. It also included an ethics and legal subcommittee to address health equity concerns [24].

To address both technical and ethical challenges, the committee should include a diverse group of professionals: healthcare providers, AI experts, ethicists, legal advisors, patient representatives, data scientists, IT specialists, and clinical leaders [24][25]. Clear documentation of member expectations - such as time commitments, support staff availability, and compensation - helps set the stage for effective collaboration. The committee’s primary role is to oversee the ethical and responsible use of AI, while operational tasks like budgeting and clinical implementation remain the responsibility of individual departments.

Ongoing Monitoring and Risk Tracking

Once the governance structure is in place, continuous monitoring is essential to maintain ethical and effective AI use. Develop a monitoring plan that includes routine checks for data quality, algorithm performance, user satisfaction, biases, and ethical compliance [26]. A multidisciplinary team - comprising clinical, data, and administrative experts - should regularly audit AI systems to ensure they meet these standards.

Create processes for collecting feedback from healthcare professionals and patients, with clear templates and roles for managing and addressing this input [26]. Establish mechanisms to share monitoring results with AI developers for timely updates and with end-users to foster trust. As new risks emerge, adapt your monitoring plan accordingly [26]. Tools like Censinet RiskOps can centralize AI-related policies, risks, and tasks, routing key findings to the appropriate stakeholders, including the AI governance committee. With real-time data aggregated in an intuitive risk dashboard, these platforms streamline oversight and decision-making processes.

Conclusion: Managing AI Risks in Healthcare

Addressing AI risks in healthcare demands a forward-thinking approach. Recent cyberattacks highlight a troubling trend: the majority of PHI breaches originate from vulnerabilities within third-party systems [5]. At the same time, attackers are increasingly using AI to exploit weaknesses in critical healthcare infrastructure at alarming speeds [5].

To navigate these challenges, healthcare organizations must anchor their strategies around three key elements: identifying and assessing risks comprehensively, implementing layered security measures with continuous monitoring, and establishing governance frameworks that promote accountability across the organization. Together, these steps help protect patient safety and ensure compliance in an era where AI-driven medical decisions introduce new security concerns.

When these components are integrated into a unified strategy, healthcare providers can respond more effectively to emerging threats while maintaining oversight. Centralized platforms like Censinet RiskOps play a crucial role by consolidating AI-related policies, risks, and tasks. These platforms equip governance teams with real-time dashboards, enabling them to make swift, well-informed decisions [4]. By breaking down silos and providing a single, reliable source of data, such tools streamline decision-making. They also translate cyber risks into financial terms that are clear to executives and boards, while automating compliance reporting for frameworks like NIST, CIS, and ISO [27].

As the healthcare sector faces ongoing cybersecurity challenges in 2025 and beyond [7], organizations that adopt AI-driven risk platforms, encourage collaboration across departments, and continuously adapt their strategies will be better prepared to protect patients, meet regulatory demands, and build enduring resilience. The real question is no longer whether action is necessary - it’s how quickly organizations can move from recognizing risks to implementing solutions. A cohesive approach transforms awareness into decisive, impactful action.

FAQs

What are the key AI risks in healthcare organizations should watch out for?

Healthcare organizations face several challenges when incorporating AI into their operations. Among the most pressing are diagnostic errors stemming from flawed algorithms, bias in AI models that could result in unequal treatment, and data breaches that expose sensitive patient information. On top of that, system malfunctions can disrupt essential healthcare services.

There's also the danger of relying too heavily on AI without adequate human oversight, which can lead to flawed decision-making. Privacy violations and security gaps in outdated systems or third-party software further heighten the risks. Addressing these issues proactively is crucial to ensure AI is both safe and effective in healthcare settings.

What steps can healthcare providers take to manage and reduce AI-related risks?

Healthcare providers can tackle AI-related risks effectively with a well-organized risk management strategy. Key steps include performing regular AI audits, maintaining transparency in AI processes, and upgrading outdated systems to address potential weak points. Setting up clear governance protocols and embedding risk management practices throughout the AI lifecycle are equally important.

Taking proactive steps like real-time monitoring, using predictive analytics, and enforcing strict access controls allows for early detection and response to threats. Prioritizing human oversight, encouraging collaboration across various fields, and adhering to regulations like HIPAA further bolster these efforts. Together, these strategies not only minimize risks but also build trust and confidence in AI-powered healthcare solutions.

Why is human oversight essential in AI-powered healthcare systems?

Human oversight plays a critical role in AI-driven healthcare systems, ensuring precision, safety, and reliability. While AI has the potential to streamline processes and improve decision-making, it’s not without its flaws. Errors, biases, or security vulnerabilities can still arise, making human involvement essential for minimizing risks like misdiagnoses or breaches of sensitive data.

By closely monitoring AI systems, healthcare professionals can identify shortcomings, uphold accountability, and ensure that patient care adheres to ethical standards. Striking the right balance between AI capabilities and human expertise is crucial for building trust in AI-powered healthcare solutions.

Related Blog Posts

- The AI-Augmented Risk Assessor: How Technology is Redefining Professional Roles in 2025

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Healthcare AI Paradox: Better Outcomes, New Risks

- The Process Optimization Paradox: When AI Efficiency Creates New Risks

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"What are the key AI risks in healthcare organizations should watch out for?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations face several challenges when incorporating AI into their operations. Among the most pressing are <strong>diagnostic errors</strong> stemming from flawed algorithms, <strong>bias in AI models</strong> that could result in unequal treatment, and <strong>data breaches</strong> that expose sensitive patient information. On top of that, <strong>system malfunctions</strong> can disrupt essential healthcare services.</p> <p>There's also the danger of relying too heavily on AI without adequate human oversight, which can lead to flawed decision-making. <strong>Privacy violations</strong> and <strong>security gaps in outdated systems</strong> or third-party software further heighten the risks. Addressing these issues proactively is crucial to ensure AI is both safe and effective in healthcare settings.</p>"}},{"@type":"Question","name":"What steps can healthcare providers take to manage and reduce AI-related risks?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare providers can tackle AI-related risks effectively with a well-organized risk management strategy. Key steps include performing regular AI audits, maintaining transparency in AI processes, and upgrading outdated systems to address potential weak points. Setting up <strong>clear governance protocols</strong> and embedding risk management practices throughout the AI lifecycle are equally important.</p> <p>Taking proactive steps like real-time monitoring, using predictive analytics, and enforcing strict access controls allows for early detection and response to threats. Prioritizing human oversight, encouraging collaboration across various fields, and adhering to regulations like HIPAA further bolster these efforts. Together, these strategies not only minimize risks but also build trust and confidence in AI-powered healthcare solutions.</p>"}},{"@type":"Question","name":"Why is human oversight essential in AI-powered healthcare systems?","acceptedAnswer":{"@type":"Answer","text":"<p>Human oversight plays a critical role in AI-driven healthcare systems, ensuring <strong>precision, safety, and reliability</strong>. While AI has the potential to streamline processes and improve decision-making, it’s not without its flaws. Errors, biases, or security vulnerabilities can still arise, making human involvement essential for minimizing risks like misdiagnoses or <a href=\"https://censinet.com/blog/taking-the-risk-out-of-healthcare-june-2023\">breaches of sensitive data</a>.</p> <p>By closely monitoring AI systems, healthcare professionals can identify shortcomings, uphold accountability, and ensure that patient care adheres to ethical standards. Striking the right balance between AI capabilities and human expertise is crucial for building trust in AI-powered healthcare solutions.</p>"}}]}

Key Points:

Why are prompt injection attacks so dangerous in clinical workflows?

- Allow malicious prompts to override model safeguards

- Expose PHI by manipulating LLMs during summarization or treatment suggestions

- Trigger unauthorized actions within AI‑driven tools

- Complicate HIPAA compliance, even when breaches occur through vendors

- Increase legal exposure as regulators scrutinize AI‑related disclosures

What makes shadow AI uniquely risky for healthcare organizations?

- Unapproved AI tools operate outside IT visibility, creating blind spots

- PHI may be unknowingly uploaded to public LLMs

- Lack of audit trails undermines regulatory defensibility

- Biased, unvetted models can influence clinical outputs

- Violates BAAs and HIPAA, exposing organizations to penalties

How does data poisoning undermine AI reliability?

- Biases become embedded in model training

- Misdiagnoses and unsafe treatment recommendations occur

- Black‑box models hide root causes, worsening audit challenges

- Financial losses arise from repairing flawed systems

- Erodes clinician trust, disrupting workflow adoption

How is AI accelerating cyber threats in healthcare?

- Automates vulnerability scanning and attack targeting

- Improves phishing sophistication, increasing success rates

- Targets medical devices and EHR systems, amplifying patient safety risks

- Leaves gaps in insurance coverage due to AI‑related policy exclusions

- Creates cascading clinical disruptions with high financial impact

Why are third‑party AI tools and fourth‑party chains high‑risk?

- Over 80% of PHI theft originates from vendors, not providers

- Vendors may lack healthcare compliance knowledge, creating liability

- Fourth‑party subcontractors often go unmonitored

- Supply‑chain breaches spread rapidly through connected organizations

- Regulators are increasing scrutiny, raising operational stakes

What are the dangers of overreliance on autonomous AI without human oversight?

- Algorithmic bias directly impacts clinical outcomes

- Opaque decisions create compliance and liability issues

- No human validation increases risk of harmful recommendations

- Vendors may lack HIPAA expertise, transferring risk to providers

- Human‑in‑the‑loop models reduce risk while maintaining efficiency