The Process Optimization Paradox: When AI Efficiency Creates New Risks

Post Summary

Data poisoning, model manipulation, AI‑powered ransomware, medical‑device vulnerabilities, and shadow AI.

Attacks are faster, adaptive, harder to detect, and can directly disrupt patient care.

Outdated software, network exposure, poor integration, and exploitable device firmware.

Lack of transparency makes informed consent, accountability, auditing, and HIPAA alignment more difficult.

Clinicians miss unusual cases and organizations struggle to meet regulatory requirements without human validation.

It centralizes governance, automates evidence reviews, routes findings to human reviewers, and provides real-time AI risk dashboards.

Artificial intelligence (AI) is transforming healthcare by improving decision-making, reducing errors, and automating tasks. But this progress introduces risks that demand attention. Cybersecurity threats like data breaches, AI-powered ransomware, and vulnerabilities in medical devices are growing concerns. Additionally, the lack of transparency in AI systems creates challenges for compliance, trust, and accountability.

Key points:

Balancing AI's potential with these risks is essential to protect patient safety, maintain trust, and meet regulatory requirements.

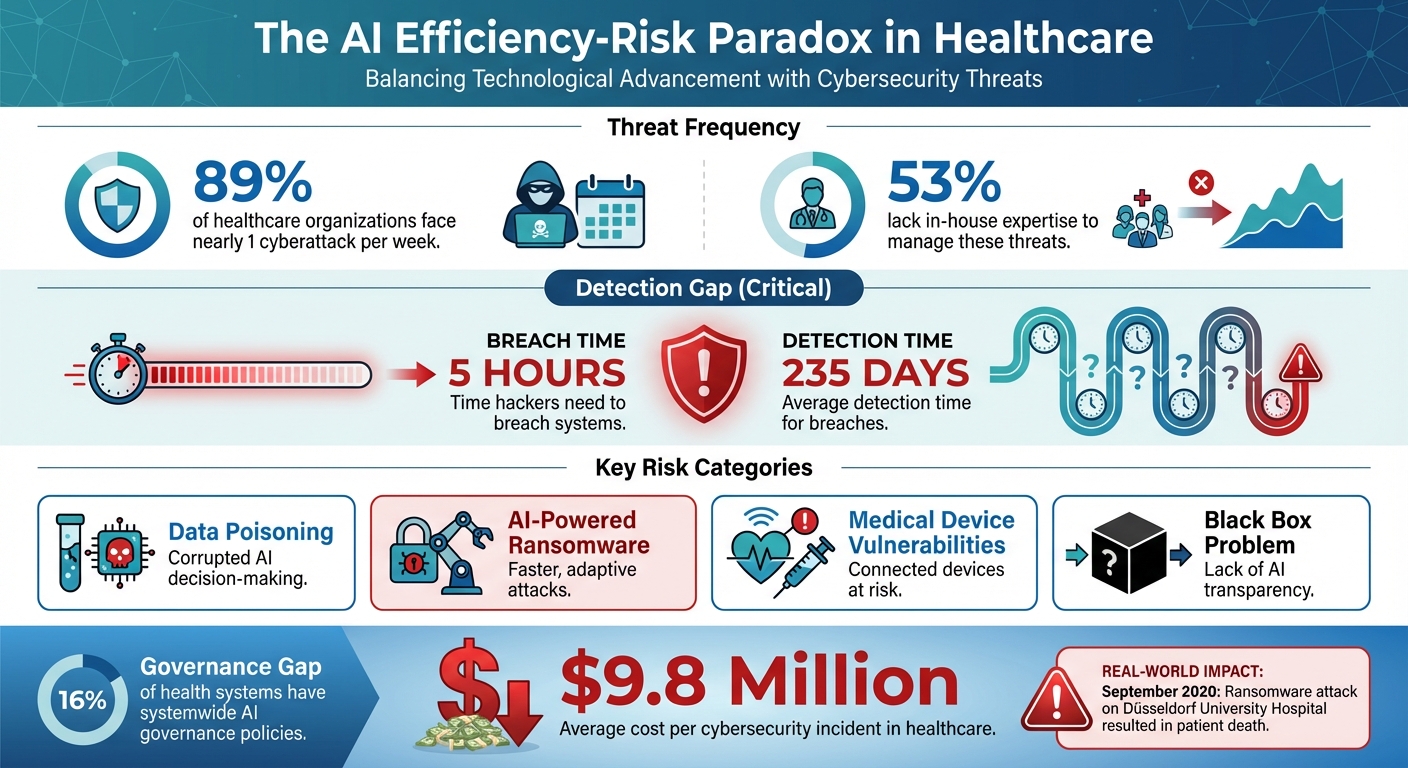

Healthcare AI Cybersecurity Statistics and Risk Impact

Cybersecurity Risks from AI in Healthcare

AI systems have revolutionized healthcare by streamlining operations and enhancing patient care. However, they also introduce new vulnerabilities, creating opportunities for cyberattacks that could jeopardize patient safety and the integrity of sensitive data. These risks extend beyond immediate threats, contributing to broader compliance and operational challenges that demand attention.

Data Poisoning and Model Manipulation

AI systems in healthcare rely on continuous learning, but this adaptability can be exploited. Attackers can inject malicious data or adversarial inputs into these systems, corrupting their decision-making processes and producing harmful outputs. In a healthcare setting, where AI plays a critical role in diagnoses and treatment recommendations, such "data poisoning" could lead to incorrect clinical decisions with potentially life-threatening consequences [3].

AI-Powered Ransomware and Shadow AI

Cybercriminals are increasingly using AI to supercharge ransomware attacks. By targeting high-value systems like electronic health records (EHRs) and imaging servers, attackers can maximize disruption. These AI-driven assaults are faster, more adaptive, and harder to detect than traditional methods [2][3].

One alarming example occurred in September 2020, when a ransomware attack on Düsseldorf University Hospital delayed critical care, tragically resulting in a patient's death [1]. Adding to the complexity is the rise of "shadow AI" - unauthorized systems operating outside an organization’s oversight. These rogue systems create blind spots in security, offering attackers new avenues to exploit. Together, these developments highlight the growing sophistication of cyber threats in healthcare.

Security Gaps in AI-Enabled Medical Devices

AI-powered medical devices, like pacemakers and insulin pumps, are another weak link in healthcare cybersecurity. Their network connectivity makes them vulnerable to attacks that could alter dosages or disrupt functionality [1]. In a hospital's interconnected IT ecosystem, a single vulnerability - whether from outdated software or insecure system integration - can compromise the entire network [1].

The consequences of these breaches are severe. Ransomware and Denial of Service (DoS) attacks can halt critical operations, endangering patient care. On average, the healthcare industry faces a staggering $9.8 million cost per cybersecurity incident [5]. These risks underscore the urgent need for robust security measures to protect both patients and healthcare infrastructure.

Compliance and Operational Challenges with AI

AI integration in healthcare isn't just about addressing cybersecurity threats - it's also about navigating a maze of compliance and operational challenges. The regulatory environment for AI in healthcare is in constant flux, with federal and state laws frequently evolving. This creates a lot of uncertainty for healthcare organizations trying to stay compliant [6][7][4]. Adding to this complexity are technical conflicts between AI systems and existing healthcare regulations [6][7][4].

The Black Box Problem in AI Algorithms

One of the biggest hurdles with AI in healthcare is the so-called "black box" issue. Many AI algorithms operate in ways that are difficult - or even impossible - for humans to fully understand. This lack of transparency creates serious problems when it comes to compliance and trust. For example, physicians often struggle to explain how an AI arrived at a treatment recommendation, making it harder to obtain informed consent from patients [8][10][11].

This lack of clarity has far-reaching consequences. Right now, only 16% of health systems have a systemwide governance policy that specifically addresses AI use and data access [11]. When AI systems contribute to errors or adverse events, determining who is legally accountable becomes a gray area [8][10][11]. For organizations bound by HIPAA regulations to protect patient health information, integrating these opaque systems into older infrastructures presents yet another layer of difficulty [7]. And as these systems increasingly influence clinical decisions, the lack of oversight only deepens the regulatory challenges.

Reduced Human Oversight in Automated Systems

As healthcare organizations adopt more AI-driven processes, there's a noticeable decline in human oversight. This shift creates governance gaps that can lead to serious operational risks. For instance, clinicians may overlook unusual patient cases that fall outside the scope of an algorithm's training data [8][9][11].

This reduced human involvement also makes it harder to comply with laws like HIPAA. While AI tools can enhance efficiency, they can't replace the critical thinking and ethical decision-making that human experts bring to the table [6][7]. The challenge for healthcare organizations is striking the right balance: leveraging AI for its efficiency while ensuring that human oversight remains strong enough to catch errors and validate AI-driven recommendations. Without this balance, the risks can outweigh the benefits.

Managing AI Risks with Censinet RiskOps™

As AI becomes more integrated into healthcare operations, managing its associated risks is no small task. Healthcare organizations must navigate operational and compliance hurdles while balancing AI's capabilities with the need for strict oversight. Enter Censinet RiskOps™, a solution designed to combine AI's efficiency with the accountability of human oversight, creating a transparent and effective risk management process.

Human-in-the-Loop Risk Management

AI systems, while powerful, often face criticism for their lack of transparency. To tackle this, human-in-the-loop practices ensure that expert judgment is applied at critical decision points. By incorporating human oversight, organizations can maintain clear, accountable risk evaluations while adhering to industry standards. This approach directly addresses concerns about the opaque nature of AI systems, ensuring decisions remain both reliable and understandable.

Using Censinet AITM for Third-Party Risk Assessments

Managing third-party risks is a major challenge, especially in healthcare. Censinet AITM simplifies this process by continuously evaluating the security posture of external vendors. This streamlined approach not only saves time but also allows risk teams to focus on more complex issues. Meanwhile, healthcare organizations can ensure their partners uphold rigorous security standards.

Vendor Performance Tracking with Censinet Connect™

Risks are constantly evolving, and keeping tabs on vendor performance is crucial. Censinet Connect™ offers a centralized platform to monitor vendor compliance and security practices in real time. By staying proactive, healthcare organizations can spot and address potential problems early, preventing them from escalating into larger issues. This continuous tracking strengthens vendor relationships while safeguarding organizational operations.

sbb-itb-535baee

Deploying Censinet AI for Effective Risk Management

Implementing AI-driven risk management demands a combination of structured governance, cohesive teamwork, and scalable operations. For healthcare organizations, these elements are especially critical. Censinet AI simplifies these complexities, turning them into coordinated and manageable processes.

Centralized AI Governance with Censinet Dashboards

Effective risk management starts with clear and centralized oversight.

When data is scattered across various systems, managing AI risks can feel like an uphill battle. Censinet RiskOps serves as a central hub, bringing together AI-related policies, risks, and tasks into a single platform. Its user-friendly AI risk dashboard consolidates real-time data, offering leadership a transparent view of potential risks. This unified perspective allows governance committees to monitor compliance with regulations like HIPAA and FDA requirements more efficiently. Additionally, healthcare organizations can clearly define roles and responsibilities throughout the AI lifecycle, ensuring clinical oversight and security measures align with both operational goals and regulatory standards [4].

This centralized system provides the foundation for smoother team collaboration and stronger risk management practices.

Team Coordination for GRC Functions

Seamless collaboration among Governance, Risk, and Compliance (GRC) teams is key to managing AI risks effectively. Censinet AI enhances this by automatically assigning assessment findings and tasks to the right stakeholders. For instance, when a critical risk is flagged, the platform ensures it reaches the appropriate reviewers for immediate action. By automating these processes, organizations not only save time but also improve coordination between departments, leading to quicker responses and more efficient teamwork.

Conclusion: Balancing AI Efficiency with Risk Management

AI in healthcare presents a unique challenge. On one hand, it has the power to streamline operations and enhance patient care. On the other, it brings with it serious cybersecurity risks. Consider this: 89% of healthcare organizations face nearly one cyberattack per week, and 53% lack the in-house expertise to effectively manage these threats [13]. The real challenge isn't about choosing between efficiency and security - it's about achieving both at the same time.

This is where Censinet RiskOps™ steps in. By offering a centralized governance framework, the platform unifies AI-related policies, risks, and tasks, providing healthcare leaders with real-time insights into potential vulnerabilities. This approach ensures that while organizations benefit from AI's efficiency, they don't sacrifice patient safety or regulatory compliance in the process.

To move forward, healthcare providers must adopt proactive risk management strategies instead of waiting to react to threats. The numbers are stark: hackers can breach systems in as little as five hours, while the average detection time for such breaches is a staggering 235 days [12]. It's clear that early detection and coordinated responses are essential. Systems need to evolve to identify vulnerabilities quickly, align governance and compliance efforts, and scale alongside AI advancements.

Censinet AI supports this proactive approach by automating routine risk assessments and directing critical findings to the appropriate stakeholders. This ensures that risk teams remain in control, using configurable rules and approval processes to balance automation with human oversight. The result? Faster detection of risks, improved team collaboration, and stronger data protection throughout an organization's AI ecosystem. Together, these measures create a robust defense against emerging threats.

As we've discussed, integrating risk management seamlessly into AI operations is key. Healthcare organizations that view risk management not as an obstacle, but as a foundation for sustainable growth, will be best positioned to thrive. With the right tools and governance in place, AI can deliver the efficiency it promises while upholding the security and compliance standards that safeguard patients and their trust.

FAQs

How can AI create cybersecurity risks in healthcare?

AI's integration into healthcare brings with it the risk of exposing sensitive patient data to breaches and unauthorized access. Weaknesses in AI systems can be exploited in several ways, such as adversarial attacks, tampering with algorithms, or even the misuse of connected medical devices. These vulnerabilities can lead to serious consequences, including privacy breaches and identity theft.

As healthcare facilities adopt more AI-powered tools, the interconnected network of devices becomes a potential gateway for cyber threats. This highlights the critical need for strong protective measures to safeguard patient information and ensure the security of healthcare operations.

What is the 'black box' problem in AI, and why does it matter in healthcare?

The 'black box' problem in AI highlights the challenge of understanding how AI systems arrive at their decisions. Many algorithms function in ways that are complex and often opaque, leaving humans unable to fully interpret their inner workings.

This issue becomes even more pressing in healthcare, where AI-driven decisions can have a direct impact on patient safety, regulatory compliance, and trust in medical systems. When the logic behind an AI's conclusions remains unclear, it raises concerns about accountability and risk management, making it harder to ensure the system's reliability. Tackling this problem is essential to building secure and dependable healthcare solutions.

How can healthcare organizations ensure AI-driven efficiency doesn’t compromise security and compliance?

Healthcare organizations can strike a balance between the efficiency of AI systems and the need for risk management by adopting a well-structured framework designed specifically for AI. This means putting strong governance policies in place, maintaining consistent human oversight, and keeping AI systems updated to tackle new risks as they arise.

To minimize potential issues, organizations should rely on tools that track and report AI-related incidents, carry out regular audits, and offer training programs for staff on AI safety and compliance. Taking these proactive steps helps healthcare providers improve efficiency while protecting patient data and ensuring secure operations.

Related Blog Posts

- From Guardian to Gatecrasher: When AI Risk Management Tools Turn Against You

- AI Risk Management: Why Traditional Frameworks Are Failing in the Machine Learning Era

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Safety-Performance Trade-off: Balancing AI Capability with Risk Control

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How can AI create cybersecurity risks in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>AI's integration into healthcare brings with it the risk of exposing sensitive patient data to breaches and unauthorized access. Weaknesses in AI systems can be exploited in several ways, such as <strong>adversarial attacks</strong>, tampering with algorithms, or even the misuse of connected medical devices. These vulnerabilities can lead to serious consequences, including privacy breaches and identity theft.</p> <p>As healthcare facilities adopt more AI-powered tools, the <strong>interconnected network of devices</strong> becomes a potential gateway for cyber threats. This highlights the critical need for strong protective measures to safeguard patient information and ensure the security of healthcare operations.</p>"}},{"@type":"Question","name":"What is the 'black box' problem in AI, and why does it matter in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>The 'black box' problem in AI highlights the challenge of understanding how AI systems arrive at their decisions. Many algorithms function in ways that are complex and often opaque, leaving humans unable to fully interpret their inner workings.</p> <p>This issue becomes even more pressing in healthcare, where AI-driven decisions can have a direct impact on patient safety, regulatory compliance, and trust in medical systems. When the logic behind an AI's conclusions remains unclear, it raises concerns about accountability and risk management, making it harder to ensure the system's reliability. Tackling this problem is essential to building secure and dependable healthcare solutions.</p>"}},{"@type":"Question","name":"How can healthcare organizations ensure AI-driven efficiency doesn’t compromise security and compliance?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can strike a balance between the efficiency of AI systems and the need for risk management by adopting a well-structured framework designed specifically for AI. This means putting strong governance policies in place, maintaining consistent human oversight, and keeping AI systems updated to tackle new risks as they arise.</p> <p>To minimize potential issues, organizations should rely on tools that track and report AI-related incidents, carry out regular audits, and offer training programs for staff on AI safety and compliance. Taking these proactive steps helps healthcare providers improve efficiency while protecting patient data and ensuring secure operations.</p>"}}]}

Key Points:

What cybersecurity threats does AI introduce into healthcare?

- Data poisoning and model manipulation that distort diagnoses or treatment recommendations.

- AI-powered ransomware targeting EHR systems, imaging servers, and hospital networks.

- Shadow AI implementations operating outside official oversight.

- Medical device vulnerabilities enabling altered dosages or system disruption.

- High-cost breaches with multimillion-dollar operational impacts.

Why does the AI black-box problem threaten compliance and trust?

- Opaque logic prevents clinicians from explaining decisions to patients.

- Accountability gaps make legal responsibility unclear during errors.

- HIPAA alignment challenges due to hidden data flows and unclear system behavior.

- Low governance adoption, with only 16% of health systems having AI policies.

How do human factors amplify AI-related cybersecurity risks?

- Automation bias causes blind trust in AI outputs.

- Weak passwords and phishing risk compromise systems.

- Poor training and outdated workflows reduce readiness for evolving AI behavior.

- Slow detection of breaches increases financial and patient‑safety impact.

What governance frameworks support safe, human-centered AI?

- NIST AI RMF, emphasizing transparency, accountability, fairness, and reliability.

- Cross-functional governance committees integrating clinical, IT, legal, and compliance functions.

- Continuous monitoring and documentation aligned with HIPAA and FDA expectations.

- Human oversight at all critical decision points.

How does Censinet RiskOps™ streamline AI risk management?

- Human‑in‑the‑loop automation for assessments, routing, and approval processes.

- Censinet AITM for fast third‑party reviews, evidence summaries, and fourth‑party risk identification.

- Censinet Connect™ for vendor performance tracking and collaborative risk sharing.

- Real-time AI risk dashboards consolidating policies, risks, and tasks across the enterprise.

What best practices help healthcare adopt AI safely?

- Explicit patient consent and clear disclosure when AI is used.

- Robust training programs for safe AI use and cybersecurity readiness.

- Process controls such as dual review, role‑based access, and audit logs.

- Continuous monitoring for drift, bias, vulnerabilities, and unusual model behavior.

- Transparent vendor oversight including documentation of updates and testing.