The Psychology of AI Safety: Understanding Human Factors in Machine Intelligence

Post Summary

Biases, trust imbalances, and behavioral mistakes shape how clinicians and security teams use (or misuse) AI systems.

Automation bias, confirmation bias, and overconfidence lead users to trust AI outputs even when evidence contradicts them.

Too little trust prevents adoption; too much trust causes complacency and missed verification steps, increasing risk.

Weak passwords, phishing susceptibility, poor authentication, misconfigurations, and slow response times due to inadequate training.

The NIST AI RMF, generative AI profiles, and cross-functional governance teams that enforce oversight and accountability.

By centralizing oversight, routing findings to the right reviewers, automating risk summaries, and maintaining human-in-the-loop control.

AI in healthcare is transforming patient care, but its safety depends heavily on human factors. Here's what you need to know:

AI is a tool, not a replacement for human judgment. Balancing trust, oversight, and clear governance is critical for safe, effective AI use in healthcare.

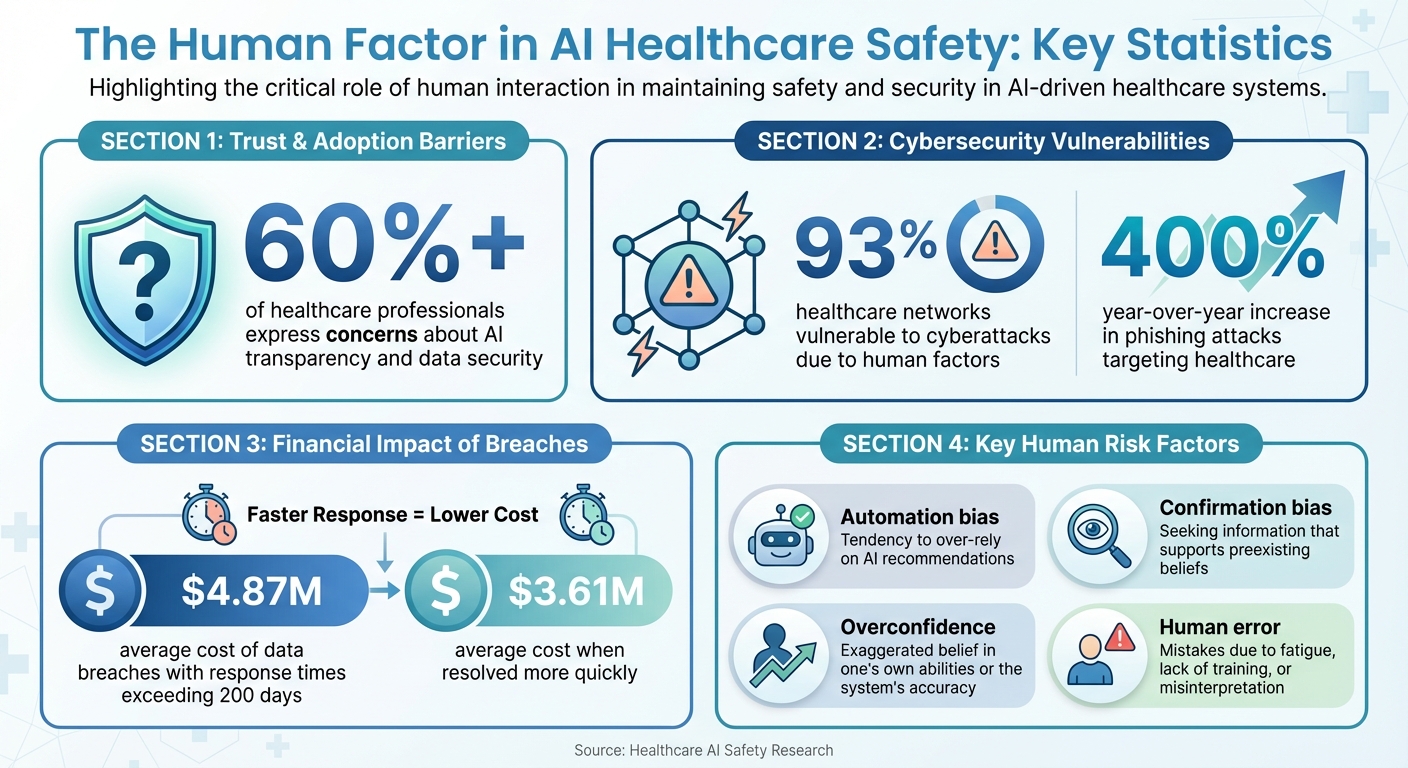

AI Safety Statistics in Healthcare: Key Human Factor Risks and Impacts

Human Factors That Influence AI Safety

Human psychology plays a key role in shaping AI safety, particularly in high-pressure fields like healthcare. Mental shortcuts, trust dynamics, and human behaviors can create vulnerabilities in cybersecurity. Let's explore how specific biases, trust issues, and human errors contribute to these challenges.

Cognitive Biases in AI Decision-Making

Automation bias is a major concern in healthcare. This bias occurs when people place too much trust in automated systems, even when the data conflicts with human judgment. For example, clinicians and security teams may rely heavily on AI-generated recommendations, ignoring warning signs that a human expert might catch. This over-reliance becomes especially dangerous when AI systems fail to detect threats (false negatives) or when algorithms trained on limited datasets provide flawed outputs [5].

Confirmation bias adds another layer of risk. During AI development, teams may unintentionally dismiss test results that don’t align with their expectations. Similarly, security professionals might focus only on alerts that confirm their existing beliefs, overlooking other critical indicators. The black box nature of AI systems - where decision-making processes are opaque - makes it even harder to identify and correct these biases [1][3].

Overconfidence in AI systems can lead organizations to skip essential checks and balances. Some healthcare institutions assume AI tools are inherently secure, rushing their deployment without adequate oversight. This misplaced confidence can introduce systemic vulnerabilities across interconnected healthcare networks.

Trust in AI Systems: Finding the Right Balance

Trust in AI systems is a delicate balancing act. On one hand, under-trusting AI can leave organizations vulnerable. Over 60% of healthcare professionals have expressed concerns about AI’s transparency and data security, leading to hesitation in adopting these tools [4]. As a result, valuable AI-driven security measures may go unused, leaving gaps that could otherwise be addressed.

On the other hand, excessive trust in AI systems can be equally dangerous. Security teams might assume that AI-powered tools will catch every threat, leading to complacency and skipped verification steps. The complexity of AI algorithms often makes it difficult for users to fully understand how decisions are made, amplifying the risks of blind trust [1]. Striking the right balance is critical: AI should be seen as a tool to enhance human capabilities, not a replacement for human judgment.

Human Error and Behavioral Risks

Human mistakes are another significant factor driving cybersecurity vulnerabilities in healthcare. Weak passwords, phishing attacks, and poor authentication practices remain common. For instance, phishing attacks in healthcare have surged by 400% year-over-year, with professionals often serving as unintentional entry points for attackers [2]. Alarmingly, 93% of healthcare networks are still vulnerable to cyberattacks, largely due to human factors rather than purely technical flaws [2].

AI systems, by their very nature, evolve over time, which can further complicate matters. As algorithms are updated, users need ongoing training to adapt to new features and behaviors. Unfortunately, many healthcare organizations provide only initial training, leaving staff ill-equipped to handle changes. This gap can have costly consequences: data breaches with response times exceeding 200 days average $4.87 million in losses, compared to $3.61 million for incidents resolved more quickly [2]. Delays in recognizing and addressing AI-related security issues not only impact finances but also put patient safety at risk.

From biases and trust imbalances to human errors, these factors highlight the need for robust oversight and comprehensive frameworks to ensure AI systems are used safely and effectively in healthcare environments.

Human-AI Interaction Risks in Healthcare Cybersecurity

When humans and AI systems collaborate in healthcare, new vulnerabilities emerge that go beyond traditional cybersecurity concerns. This section explores how human factors, when combined with AI vulnerabilities, can amplify risks in this critical field.

Cybersecurity Risks Created by AI

AI systems open up new paths for cyberattacks. For instance, data leakage can expose sensitive patient information. The opaque design of many AI models means they might inadvertently reveal protected health details, creating serious privacy concerns [1].

Another pressing issue is model manipulation. Attackers can tamper with training data or exploit weaknesses in AI systems to trigger errors - such as "hallucinations" - that could compromise medical decisions. Over-reliance on AI by security teams may also lead to missed signs of system breaches.

AI-powered social engineering attacks take these risks even further. Cybercriminals can use AI to design highly targeted phishing schemes, exploiting trust in technology to deceive individuals. These vulnerabilities also raise broader concerns about privacy, consent, and the ability to explain AI decisions.

Privacy, Consent, and Explainability

The complexity of AI systems makes it challenging to ensure informed consent. Patients may find it difficult to fully understand how their data is being used, especially when developers themselves struggle to explain the inner workings of these algorithms [1].

This lack of transparency can harm trust. Healthcare professionals may find it hard to validate AI recommendations, while patients might either overly trust or entirely dismiss AI tools. Both scenarios can lead to risky outcomes.

To address these challenges, healthcare organizations must focus on creating AI systems that are transparent about their decision-making processes and data usage. Clear communication is essential to uphold privacy standards and meet regulatory demands.

Regulatory Requirements for Human Oversight

In the U.S., frameworks like the NIST AI Risk Management Framework (AI RMF) stress the importance of ongoing human oversight to address the potential for AI-related errors [1][3]. These guidelines highlight the need for continuous monitoring to ensure that security measures effectively protect patient data [2]. Human involvement is key to aligning regulatory expectations with the realities of deploying AI in healthcare settings, ensuring both safety and compliance.

Frameworks and Governance Models for Human-Centered AI Safety

With the growing risks associated with AI, structured frameworks are critical for ensuring that technological advancements remain under human oversight. Healthcare organizations, in particular, need these frameworks to manage AI risks effectively while keeping human considerations at the forefront. The right approach offers clear guidance on balancing AI's capabilities with oversight, transparency, and accountability.

NIST AI Risk Management Framework (AI RMF)

The NIST AI Risk Management Framework provides a structured approach to AI safety in the United States. It focuses on four key principles: transparency, accountability, fairness, and reliability [6]. These principles are woven into every stage of the AI lifecycle, making the framework adaptable to various applications.

In healthcare, this framework can be applied by embedding human oversight at every step. For example, transparency ensures clinicians understand how AI systems generate recommendations. Accountability calls for clear ownership when AI systems behave unexpectedly. Reliability, a cornerstone of the framework, directly addresses patient safety by requiring ongoing validation of AI systems to ensure they perform as intended in real-world clinical environments.

Interdisciplinary collaboration plays a pivotal role in enhancing safety by addressing risks from diverse perspectives.

Cross-Functional Governance Approaches

While frameworks establish the guidelines, cross-functional teams ensure those guidelines are effectively implemented. These teams bring together healthcare providers, IT specialists, cybersecurity experts, and policymakers to tackle AI risks from all angles [2][4]. This collaborative approach is crucial because AI safety challenges often span multiple areas of expertise.

Within these teams, fostering a culture of transparency and accountability helps identify vulnerabilities and assess how people, processes, and technologies interact [1]. Given the talent shortage in AI safety, structured governance models become even more important, as they help organizations optimize their existing expertise by clearly defining roles and responsibilities.

Governance committees should meet regularly to review AI system performance, address new risks, and update policies as threats evolve. Trust remains a significant barrier - over 60% of healthcare professionals have expressed hesitancy about adopting AI due to concerns over transparency and data security [4]. Addressing these trust issues requires open communication and robust oversight mechanisms.

Censinet RiskOps™ for AI Safety Governance

To put these governance models into practice, Censinet RiskOps™ offers a centralized platform for managing AI risks. Acting like air traffic control, the platform routes risk findings to designated stakeholders, such as members of AI governance committees, for review and approval.

Censinet AI™ enhances this process by introducing human-guided automation to key risk assessment tasks. For instance, vendors can complete security questionnaires in seconds, while the platform automatically summarizes evidence and generates risk reports. Importantly, this automation is designed to support human oversight, with configurable rules ensuring that decisions remain in human hands.

The platform also features a real-time AI risk dashboard, which consolidates data from all AI systems across an organization. This gives governance teams a clear view of policy compliance, risk exposure, and progress on mitigation efforts. By centralizing this information, Censinet RiskOps™ ensures accountability by tracking which teams are responsible for addressing specific risks and monitoring their resolution timelines. For healthcare organizations managing multiple AI systems, this unified approach prevents risks from being overlooked while preserving the human judgment required for complex safety decisions.

sbb-itb-535baee

Strategies to Reduce Human-Related AI Safety Risks

Addressing human factors in AI safety calls for practical approaches that go beyond simply implementing new technologies. Healthcare organizations should focus on three key areas: equipping staff with targeted training, establishing process controls to reinforce human oversight, and utilizing tools that blend automation with sound human judgment.

Training and Awareness for Safe AI Use

More than 60% of healthcare professionals hesitate to embrace AI, often citing concerns about transparency and data security [4]. This reluctance largely stems from a lack of understanding about how AI operates and the risks it may pose. To bridge this gap, training programs need to cover the basics of AI, identify cybersecurity threats, and teach safe usage practices for all team members.

Such training should address critical risks, including algorithmic bias, large language model (LLM) hallucinations, data breaches, and tampering with algorithms. It should also provide clear guidance on authentication protocols, access controls, encryption, and secure data transmission [1][7][2][8].

Human oversight remains crucial in validating AI recommendations. Training must highlight AI's limitations, particularly with "black box" models where decision-making processes lack transparency, and dynamic algorithms that can evolve unpredictably over time [1][8]. Staff should learn when to rely on AI outputs and when human intervention is essential. Incorporating regulatory standards like HIPAA, FDA guidance, and the NIST AI Risk Management Framework ensures compliance while promoting responsible AI practices [7][2][8].

Continuous training is vital as AI technologies and cyber threats evolve at a fast pace [1][2]. Encouraging a "no-blame culture" can help transform errors into valuable learning moments, fostering openness and reducing the underreporting of incidents [1]. Educating future healthcare leaders about AI’s benefits and risks can further cultivate a culture where awareness and accountability thrive [2].

Process Controls for Human Oversight

While training builds knowledge, process controls ensure that this knowledge translates into safe daily operations. Healthcare organizations should establish safeguards such as dual control mechanisms, human review checkpoints, and segregation of duties to prevent unauthorized or unsafe AI use. These measures create opportunities for human judgment to validate AI-driven decisions before they directly impact patient care.

Role-based access controls restrict AI system interactions and sensitive data handling to authorized personnel only. Detailed audit logs of code changes and deployments, along with continuous monitoring, help identify deviations from established protocols. Regular vulnerability assessments and penetration testing can uncover weaknesses before they become exploitable risks. Additionally, an approval matrix clearly defines which individuals can authorize AI-related decisions at various levels, reducing the likelihood of single points of failure.

Censinet AI™ for Streamlined AI Risk Management

To complement these controls, tools like Censinet AI™ provide a way to streamline risk management while keeping human oversight at the center. Censinet AI™ exemplifies how automation can enhance efficiency without sidelining human decision-making. The platform allows vendors to complete security questionnaires in seconds, automatically summarizes evidence, captures integration details, and documents risks from fourth-party vendors. This human-guided automation speeds up assessments while ensuring that critical decisions remain in human hands.

Configurable rules and review processes ensure that automation supports, rather than replaces, human judgment. Risk teams retain control over which tasks require manual approval and which can proceed automatically. The platform’s routing capabilities act like air traffic control, directing AI-related findings to the appropriate stakeholders - such as AI governance committee members - for review. This approach enables healthcare organizations to expand their risk management processes, addressing complex third-party and enterprise risks with greater efficiency, all while prioritizing patient safety and care quality.

Conclusion

AI offers exciting possibilities for healthcare, but its successful adoption requires tackling human challenges like cognitive biases, mistrust, and potential errors. The "black box" design of many AI systems complicates informed decision-making and raises trust issues - problems that algorithms alone cannot solve [1].

To address these challenges, frameworks like the NIST AI Risk Management Framework provide organizations with strategies to tackle issues such as algorithmic opacity, data security breaches, and system vulnerabilities. These frameworks emphasize transparency, ethical practices, and ongoing monitoring, offering practical tools to safeguard patient data and ensure accountability [1].

Solutions like Censinet RiskOps™ streamline AI risk management by directing critical insights to the appropriate stakeholders. Meanwhile, Censinet AI™ enhances risk assessments with customizable rules and review processes, ensuring human oversight remains intact. Together, these tools help healthcare providers expand their risk management capabilities without sacrificing safety.

FAQs

How does automation bias impact AI safety in healthcare?

Automation bias happens when healthcare professionals place too much trust in AI systems, sometimes sidelining their own clinical judgment. This over-reliance can result in mistakes, like missing crucial patient details or blindly following incorrect AI recommendations without double-checking.

To reduce this risk, AI systems need to be designed in ways that promote user awareness and encourage critical thinking. Strategies like comprehensive training programs, clear and transparent feedback from the system, and built-in safeguards can help ensure that AI tools enhance patient care rather than jeopardize it.

What human factors increase cybersecurity risks in AI systems?

Human factors that increase cybersecurity risks in AI systems often stem from human errors. These include choosing weak passwords, misconfiguring systems, or overlooking basic cybersecurity protocols. On top of that, social engineering tactics - like phishing emails or impersonation scams - take advantage of human vulnerabilities to breach systems. Insider threats, whether intentional or accidental, add another layer of risk, as does the improper use of AI tools, such as unauthorized deployment or tampering.

Recognizing and addressing these human-related risks is key for organizations aiming to protect their AI systems and minimize vulnerabilities.

Why is human oversight essential for ensuring AI safety in healthcare?

Human oversight plays a key role in keeping AI safe and effective in healthcare. It helps tackle critical challenges such as errors, biases, and weaknesses in the system. By actively supervising and guiding AI tools, humans ensure these technologies meet ethical guidelines, safeguard patient well-being, and protect sensitive data.

Healthcare AI often functions in high-pressure settings where decisions can have life-or-death consequences. Human involvement ensures responsible use of these tools, especially when dealing with complicated situations or unforeseen results. This partnership between humans and AI not only minimizes risks but also strengthens trust in these systems.

Related Blog Posts

- The Human Element: Why AI Governance Success Depends on People, Not Just Policies

- The New Risk Frontier: Navigating AI Uncertainty in an Automated World

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- Life and Death Decisions: Managing AI Risk in Critical Care Settings

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How does automation bias impact AI safety in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Automation bias happens when healthcare professionals place too much trust in AI systems, sometimes sidelining their own clinical judgment. This over-reliance can result in mistakes, like missing crucial patient details or blindly following incorrect AI recommendations without double-checking.</p> <p>To reduce this risk, AI systems need to be designed in ways that promote user awareness and encourage critical thinking. Strategies like comprehensive training programs, clear and transparent feedback from the system, and built-in safeguards can help ensure that AI tools enhance patient care rather than jeopardize it.</p>"}},{"@type":"Question","name":"What human factors increase cybersecurity risks in AI systems?","acceptedAnswer":{"@type":"Answer","text":"<p>Human factors that increase <a href=\"https://www.censinet.com/blog/5-ways-to-reduce-3rd-party-cybersecurity-risks-per-18-experts\">cybersecurity risks</a> in AI systems often stem from <strong>human errors</strong>. These include choosing weak passwords, misconfiguring systems, or overlooking basic cybersecurity protocols. On top of that, <strong>social engineering tactics</strong> - like phishing emails or impersonation scams - take advantage of human vulnerabilities to breach systems. <a href=\"https://censinet.com/perspectives/top-7-insider-threat-indicators-in-healthcare\">Insider threats</a>, whether intentional or accidental, add another layer of risk, as does the improper use of AI tools, such as unauthorized deployment or tampering.</p> <p>Recognizing and addressing these human-related risks is key for organizations aiming to protect their AI systems and minimize vulnerabilities.</p>"}},{"@type":"Question","name":"Why is human oversight essential for ensuring AI safety in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Human oversight plays a key role in keeping AI safe and effective in healthcare. It helps tackle critical challenges such as errors, biases, and weaknesses in the system. By actively supervising and guiding AI tools, humans ensure these technologies meet ethical guidelines, safeguard patient well-being, and protect sensitive data.</p> <p>Healthcare AI often functions in high-pressure settings where decisions can have life-or-death consequences. Human involvement ensures responsible use of these tools, especially when dealing with complicated situations or unforeseen results. This partnership between humans and AI not only minimizes risks but also strengthens trust in these systems.</p>"}}]}

Key Points:

How do human factors influence AI safety in healthcare?

- Cognitive biases like automation bias lead clinicians to overtrust AI-generated recommendations.

- Psychological shortcuts cause security teams to overlook conflicting evidence.

- Behavioral errors such as poor authentication habits create cybersecurity vulnerabilities.

- Opaque AI models make it harder for humans to challenge incorrect outputs.

What types of cognitive bias impact AI decision-making?

- Automation bias encourages clinicians to follow AI suggestions without verification.

- Confirmation bias causes developers and security staff to dismiss conflicting alerts or test results.

- Overconfidence accelerates deployments without adequate oversight or validation.

- Black-box opacity makes bias harder to detect and correct.

How does trust affect AI performance and safety?

- Under-trust leads staff to avoid AI tools, missing benefits like risk detection.

- Over-trust causes skipped checks and false assumptions that AI will “catch everything.”

- Transparency gaps make it difficult for clinicians to validate AI outputs.

- Balanced trust positions AI as augmentation, not replacement.

How do human errors drive cybersecurity vulnerabilities?

- Weak passwords and phishing susceptibility expose AI-connected systems.

- Misconfigured devices create exploitable gaps across healthcare networks.

- Inadequate training leaves staff unprepared for evolving AI behavior.

- Delayed detection of breaches increases financial and patient safety consequences.

What frameworks and governance models support safe AI adoption?

- NIST AI RMF promotes transparency, accountability, fairness, and reliability.

- Generative AI profiles help manage model-specific risks.

- Cross-functional governance committees ensure multi-perspective oversight.

- Structured processes assign responsibility, review system performance, and update policies.

How does Censinet RiskOps™ strengthen AI risk management?

- Centralized governance routes AI risks to designated reviewers.

- Human-guided automation accelerates questionnaires and evidence summarization.

- Configurable review rules ensure human oversight stays intact.

- Real-time dashboards monitor policy compliance, risk exposure, and mitigation progress.